Unveiling the Vision: A Comprehensive Review of Computer Vision in AI and ML

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

Advertisement

A review of convolutional neural networks in computer vision

- Open access

- Published: 23 March 2024

- Volume 57 , article number 99 , ( 2024 )

Cite this article

You have full access to this open access article

- Xia Zhao 1 na1 ,

- Limin Wang 1 na1 ,

- Yufei Zhang 2 ,

- Xuming Han 3 ,

- Muhammet Deveci 4 , 5 , 6 &

- Milan Parmar 7

23k Accesses

2 Altmetric

Explore all metrics

In computer vision, a series of exemplary advances have been made in several areas involving image classification, semantic segmentation, object detection, and image super-resolution reconstruction with the rapid development of deep convolutional neural network (CNN). The CNN has superior features for autonomous learning and expression, and feature extraction from original input data can be realized by means of training CNN models that match practical applications. Due to the rapid progress in deep learning technology, the structure of CNN is becoming more and more complex and diverse. Consequently, it gradually replaces the traditional machine learning methods. This paper presents an elementary understanding of CNN components and their functions, including input layers, convolution layers, pooling layers, activation functions, batch normalization, dropout, fully connected layers, and output layers. On this basis, this paper gives a comprehensive overview of the past and current research status of the applications of CNN models in computer vision fields, e.g., image classification, object detection, and video prediction. In addition, we summarize the challenges and solutions of the deep CNN, and future research directions are also discussed.

Similar content being viewed by others

A survey of the recent architectures of deep convolutional neural networks

Impact of Deep Learning in Image Processing and Computer Vision

Convolutional Neural Networks and Architectures

Explore related subjects.

- Artificial Intelligence

Avoid common mistakes on your manuscript.

1 Introduction

Computer vision is gaining popularity as a buzzword in the field of image processing. Human activity recognition (HAR), an established trend with numerous real-life applications including elderly care monitoring, rehabilitation activity tracking, posture correction analysis, and intrusion detection in security, is a prominent area of research in the field of computer vision (Singh and Vishwakarma 2019 ). Over the years, deep learning advances in computer vision have attracted the attention of many scholars in the field of human action recognition (Vishwakarma and Singh 2019 ; Singh and Vishwakarma 2021 ; Dhiman and Vishwakarma 2020 ). The convolutional neural network (CNN) is used to construct the majority of computer vision algorithms. A convolutional neural network (Li et al. 2021 ), known for local connectivity of neurons, weight sharing, and down-sampling, is a deep feed-forward multilayered hierarchical network inspired by the receptive field mechanism in biology. As one of the deep learning models, a CNN can also achieve “end-to-end” learning. Through multiple layers of feature transformation, the underlying feature representation of the original data is gradually transformed into a higher-level feature representation, and the processed data is fed into a prediction function to settle the final classification or other tasks. The representation learned by the machine itself can generate good features, avoiding “feature engineering”.

In 2006, Hinton et al. proposed several perspectives in their article, which was published in Science (Hinton and Salakhutdinov 2006 ), including (1) that artificial neural networks with multiple hidden layers have a robust feature learning capability and (2) that the difficulty of training deep neural networks can be greatly reduced by the “layer-by-layer initialization” method. Since then, deep learning has become a hot topic in both academia and industry, and it has made a splash in computer vision, speech recognition, machine translation, and other fields. Meanwhile, another learning boom in artificial neural networks (Yu et al. 2013 ) has kicked off. As a typical neural network model of deep learning, a CNN has also gained wide attention from all walks of life. One of the most widely concerned is AlexNet (Alom et al. 2018 ), which won the ImageNet Large Scale Visual Recognition Competition (ILSVRC) in 2012 due to its excellent performance. With the improvement of AlexNet’s accuracy on computer vision tasks such as image classification, researchers started to remedy the defects of the network models based on AlexNet in the expectation of further enhancing their performance. Significant advances have been made in model optimization, and some of the most representative neural network models are Visual Geometry Group (VGG) (Sengupta et al. 2019 ), GoogLeNet (Khan et al. 2019 ), Residual Network (ResNet) (Wightman et al. 2021 ), Squeeze and Excitation Network (SENet) (Jin et al. 2022 ), and MobileNet (Chen et al. 2022 ). With the development of these network architectures, neural network models tend to be deeper, wider, and more complex. Although this evolution can facilitate the networks to capture better feature representations, there is no guarantee that it can operate efficiently in all cases. Models still suffer from disadvantages such as the fact that the networks are more likely to fall into overfitting, and instead of decreasing, the error rate of the training set increases as the networks become deeper and more complex. To remedy the shortcomings of these models, many scholars have come up with various techniques to optimize the structure of CNN, e.g., network pruning (Yang et al. 2023 ), knowledge distilling (Guo et al. 2023 ), and tensor decomposition (Fernandes et al. 2021 ).

Despite the significant achievements of CNN in computer vision applications such as image classification (Chandra and Bedi 2021 ), object detection (Ma et al. 2023 ), speech recognition (Li et al. 2022 ), sentiment analysis (Chan et al. 2023 ), and video recognition (Yan et al. 2022 ), the field continues to face various challenges and opportunities. As computer vision tasks become increasingly complex, there is a pressing need for CNN models and algorithms that offer higher performance and efficiency. Moreover, current research focuses on addressing key issues such as knowledge sharing across different tasks, domain adaptation, and interpretability. Given these things into account, this paper aims to comprehensively summarize and analyze the applications of CNN in computer vision, with a particular emphasis on the latest advancements in tasks including image classification, object detection, and video prediction. The contributions of this survey paper are summarized below:

A holistic literature review of CNN in computer vision, including image classification, object detection, and video prediction, is presented in this paper.

A theoretical understanding of the CNN design principles and techniques, such as convolution, filter size, stride, down sampling, optimizer, etc., is explained in detail.

The image classification and object detection performance obtained using the existing algorithms on the dataset of the domain to which they belong are compared, respectively.

Classical architectures for deep learning and CNN-based visual models are highlighted.

The current challenges involved and future research directions for CNN are identified and presented.

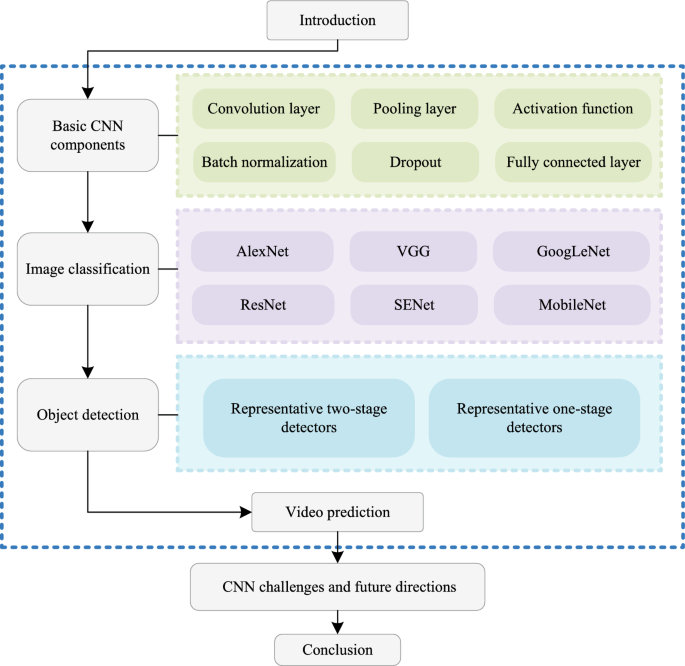

The remaining part of the paper proceeds as follows (shown in Fig. 1 ): Section 2 gives a basic introduction to the elementary components of CNN and their corresponding functions. Sections 3 , 4 , and 5 summarize the relevant research models and methods in three application directions, namely, image classification, object detection, and video prediction, respectively. In Sects. 6 and 7 , through synthesizing the current research status, the issues of CNN are analyzed and summarized. In addition, an outlook on future research trends is provided.

Layout of the paper illustrating the overall process (This paper is reviewed in the order of introduction, basic CNN components, image classification, object detection, video prediction,CNN challenges and future directions, and conclusion. Among them, the convolution layer, pooling layer, activation function, batch normalization, dropout, and fully connected layer are introduced in the basic CNN compositions. An introduction to image classification includes AlexNet, VGG, GoogLeNet, ResNet, SENet, and MobileNet. We overview object detection according to two-stage and one-stage. Video prediction is a popular area of research in the field of CNN. This part presents the state-of-the-art models in video prediction. The conclusion summarizes the challenges related to CNN and outlines future research directions.)

2 Basic CNN components

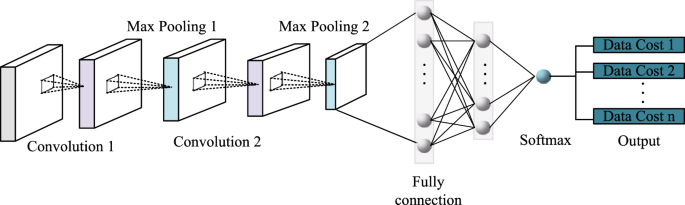

Although there are numerous variations of CNN models, the overall architecture is essentially the same and adheres to a fixed paradigm, consisting of an input layer, alternate layers of convolution and pooling layers, one or more fully connected layers, activation functions, and an output layer at the end. The first half of the network comprises of a number of convolution and pooling layers stacked alternately to form a feature extractor, through which various operations can be performed to process the raw input data that is preprocessed into a more abstract and higher-level feature representation. Fully connected layers are used in combination with activation functions to execute tasks such as classification or regression on the extracted features. To maximize CNN performance, various regulatory units like batch normalization and dropout are also included in addition to various mapping functions (Bouvrie 2006 ). Fig. 2 shows various CNN components. The configuration of CNN components is essential to creating new architectures and, ultimately, to obtaining improved performance. It is crucial to comprehend various CNN components and their respective applications in order to learn about the developments in CNN architecture in computer vision (Bhatt et al. 2021 ). The role of these components in a CNN architecture is covered in brief in this section.

Structure of CNN (Suppose this is an n-classification problem. The original data is convolved twice (Convolution 1, Convolution 2), pooled twice (Max Pooling 1, Max Pooling 2), and output to the fully connected layer (Fully connection), and finally the Softmax activation function compresses the output vectors of the full connection layer into (0, 1) and outputs them in the output layer. The Data Cost 1 represents the probability of belonging to the n categories; the larger the value, the greater the possibility of belonging to the category.)

Before being input to CNN, the raw data needs to be preprocessed. The common processing methods include homogenization (Stepanov et al. 2023 ), normalization (Huang et al. 2023 ), and principal component analysis (PCA) (Uddin et al. 2021 ). To achieve homogenization, the average value calculated across the complete training set is subtracted to center each dimension of the input data at zero. Normalization is designed to normalize the data magnitude to the same range. By individually normalizing the input, dimension reduction with PCA can lessen the correlation between several data dimensions.

2.1 Convolution layer

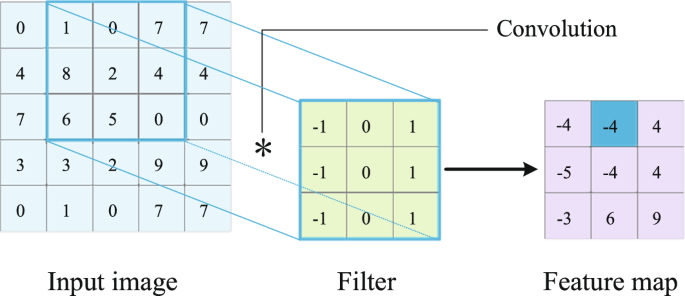

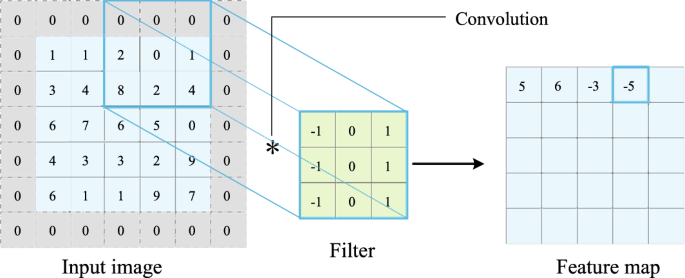

The convolution layer, which may extract various features from different local regions of the input data, is composed of a collection of convolution kernels, with each neuron acting as a kernel. Each convolution kernel has three dimensions: length ( L ), width ( W ), and depth ( D ). In the convolution layer of a CNN, the length and width of the convolution kernel are designed artificially, \(L \times W\) is also known as the size of the convolution kernel. Commonly used sizes are \(3 \times 3\) , \(5 \times 5\) , etc. The number of channels, also known as the depth or the number of feature maps, is the number of feature maps output from each layer in the CNN, and the depth of the convolution kernel is the same as the number of sheets of the feature map. The number of channels directly affects the feature extraction ability and computational complexity of the CNN. By increasing the number of channels, the feature extraction ability of CNN can be enhanced, but it also increases the computational complexity. A convolution operation is the process of sliding a convolution kernel (filter) over the input image, multiplying the convolution kernel and the pixel values at the corresponding positions of the input image, and summing them to obtain a feature map. The convolution process is depicted in Fig. 3 using a single-channel original image \(5 \times 5\) and a convolution kernel \(3 \times 3\) . Each pixel value of the feature map obtained by convolution is obtained by multiplying and summing the corresponding pixel values of the original image covered by the convolution kernel at the corresponding position. In Fig. 3 , the − 4 on the blue background in the feature map is calculated as follows: \( - 4 = ( - 1) \times 1 + 0 \times 0 + 1 \times 7 + ( - 1) \times 8 + 0 \times 2 + 1 \times 4 + ( - 1) \times 6 + 0 \times 5 + 1 \times 0 \) .

Convolution procedure

By convolving the original image with the filters and applying a nonlinear activation function to obtain new feature mappings, each feature mapping can be used as a class of extracted image features. To extract higher-level and more complete feature representations, the network model can stack multiple convolution layers. Convolutional operation’s weight-sharing technique allows multiple sets of features within an image to be retrieved by sliding a kernel with the same set of weights on the image, making it more efficient and effective for CNN parameters than fully connected networks. Furthermore, it also allows the network to have fewer neuron connections and a simpler network architecture, which facilitates the training of the network.

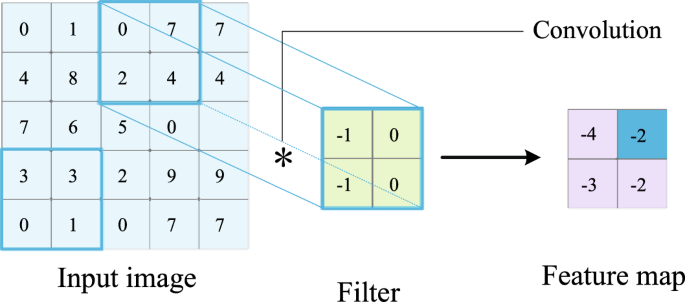

Stride is the number of rows and columns that the convolution kernel slides over the input matrix in order from left to right and top to bottom, starting from the top left of the input matrix. For example, in Fig. 3 , the stride is 1 in both the height and width directions. In addition, we can also use a larger stride. Fig. 4 illustrates a convolution operation with a stride of 3 in the vertical direction and 2 in the horizontal direction. At the output of the second element of the first column, the convolution window is slid down 3 rows, and the elements used for the calculation are: \(( - 1) \times 3 + 0 \times 3 + ( - 1) \times 0 + 0 \times 1 = - 3\) . The convolution window slides two columns to the right when the second element of the first row is output. The elements that were used in the calculation are: \(( - 1) \times 0 + 0 \times 7 + ( - 1) \times 2 + 0 \times 4 = - 2\) . Since the input elements cannot fill the convolution kernel window, no result is produced when the convolution window slides two more columns to the right on the input. The output data size, computational complexity, and feature extraction capability can all be impacted by the stride. The output data size reduces and the ability to extract features weakens as the stride increases, but the computation speed increases.

Convolution procedure (stride=(2,3))

Padding is the process of adding a certain number of pixels to the edges of the input data so that the size of the output data can match the input data. As shown in Fig. 5 , it is also known as padding some values on the boundary of the matrix to increase the size of the matrix, usually with 0 or copying the boundary pixels for padding. Padding is frequently used in CNN to prevent feature map sizes from shrinking at each layer. Furthermore, padding makes it easier for the convolution kernel to learn the information surrounding the input image. For instance, when the \(5 \times 5 \times 1\) image is reinforced into a \(7 \times 7 \times 1\) and applied to the \(3 \times 3 \times 1\) kernel over it, the complex matrix is shown to be of dimensions \(5 \times 5 \times 1\) . It demonstrates that the dimensions of the input and output images are the same. If the same procedure is done without padding, the output might have a smaller-sized image. Consequently, a \(5 \times 5 \times 1\) image will be converted to a \(3 \times 3 \times 1\) image (Bhatt et al. 2021 ).

2.2 Pooling layer

Upon acquiring the feature maps, a pooling (down sampling) layer must be added. The neurons in the pooling layer are connected to the local receptive domains of their input layer, i.e., the convolution layer, and the local receptive domains of different neurons do not overlap. The pooling procedure, like the convolution process, can be thought of as a pooling function without weights, in which the input feature mapping group is divided into many regions and each area is pooled to yield a value as a generalization of this region. Pooling functions that are commonly used are max pooling and average pooling.

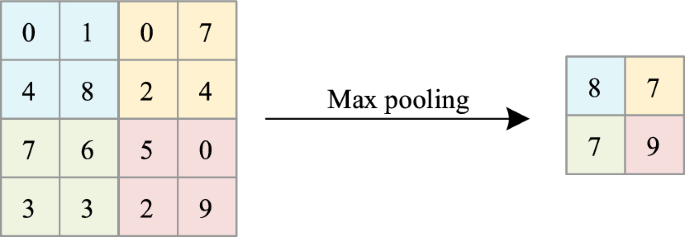

For a region, max pooling selects the maximum activity value of all neurons as the representation of this region and extracts the most significant features from the input feature mapping, which is generally used for low-level feature extraction. In the case of max pooling (stride = 2), as shown in Fig. 6 , a kernel of size \(2 \times 2\) is moved across the matrix, and the maximum value is selected and put in the appropriate spot of the output matrix. For example, pooling the four numbers ’0, 1, 4, 8’ in the blue region yields 8, the maximum of these four numbers.

Max pooling

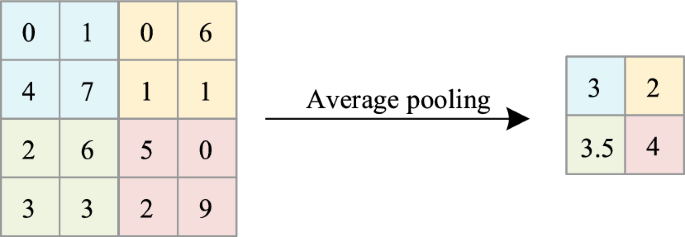

Average pooling takes the arithmetic mean of all elements in the region as the output result of the function, namely, the mean value of the local response of the extracted feature mapping.The average pooling results with filter = \(2 \times 2\) and stride 2 are shown in Fig. 7 . It is evident that the green region’s pooling result is (2 + 6 + 3 + 3)/4 = 3.5.

Average pooling

The introduction of a pooling layer not only effectively compresses the amount of data and parameters, reduces the feature map dimension, and minimizes overfitting, but also makes the network invariant to some small local morphological changes while having a larger perceptual field. Applying different pooling techniques also significantly shortens the time needed for model training and improves feature extraction and compression.

2.3 Activation function

An activation function is a different mathematical function that receives the filter’s output. It plays an important role in neural networks, which strengthens the representational and learning capabilities of the network. Each layer’s input and output in a neural network is a linear summation process, and the output of the next layer simply takes over the linear transformation of the previous layer’s input function. On the contrary, with the introduction of the activation function, the neural network can approximate any other nonlinear function, making it applicable to a wider range of nonlinear models. In this section, we will introduce the most classical and widely used activation functions, including Sigmoid, Tanh, Softmax, ReLU, and Leaky ReLU.

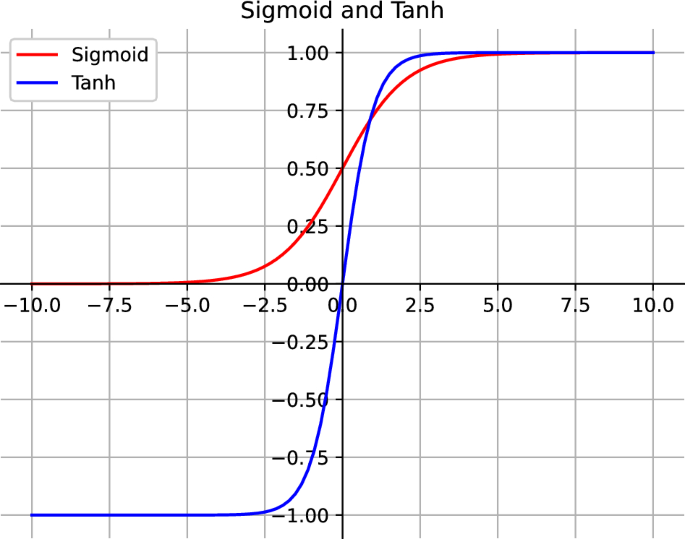

The logistic function, also known as the sigmoid, has values between 0 and 1. As can be seen in Fig. 8 , the sigmoid can be used to both normalize the output of each neuron and as a model that uses the predicted probabilities as the outputs. This is because the sigmoid maps any received vector to the (0,1) interval. The following is the expression for the sigmoid function.

Function curves of Sigmoid and Tanh

Figure 8 shows that the sigmoid gradient is smooth, preventing output values from jumping. Nevertheless, there are numerous issues with using Sigmoid. The next layer’s neuron inputs confront bias shift as a result of the non-zero-centered output, which also slows down the gradient descent’s convergence and decreases the weight update’s efficiency. Secondly, the sigmoid function’s rate of change flattens out as it gets closer to 0 and 1, meaning the sigmoid’s gradient converges to 0. Neurons with outputs near 0 or 1 do not have their weights updated when the neural network is backpropagated using the sigmoid activation function because their gradients are convergent to 0. Furthermore, the weights of the neurons connected to such neurons are slowly updated and are prone to gradient vanishing. Finally, the sigmoid function is an exponential operation, which lengthens the model’s computation time.

Tanh, also known as the hyperbolic tangent activation function (HTAF), compresses the received vector into a range of − 1 to 1. Equation ( 2 ) and Fig. 8 show the function expression and curve, respectively.

Figure 8 shows that the Tanh and sigmoid function curves are relatively similar and resemble an S-shaped curve. Furthermore, the Tanh function can be thought of as a zoomed and shifted sigmoid function. Tanh and Sigmoid have the following relationship:

Tanh is used with a higher priority than Sigmoid in practice because it improves on Sigmoid and solves the problem of Sigmoid functions not centering the output at 0. However, like the sigmoid, when the input is large or small, the output is smooth and the gradient is small, which is inconvenient for weight updating.

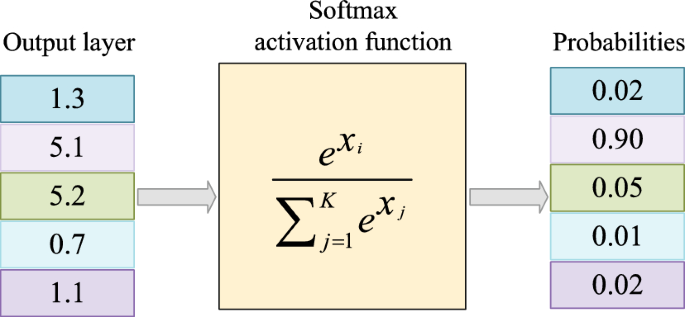

Softmax is an activation function for multi-classification problems. For any real vector of length K , Softmax activation can compress it into a real vector of length K , with values in the range (0, 1) and vector elements summing to 1. In the K classification task, these values obtained by the activation function can be used to represent the predicted probability of each category, with larger values indicating a higher probability of belonging to that category. As shown in Fig. 9 , this is a 5-classification problem. All the output layer vectors (left column) are given a number (right column) within (0, 1) after Softmax, where the probability of the second row is 0.90, indicating that the classification task belongs to the second category. SoftMax is formulated as follows:

Softmax schematic

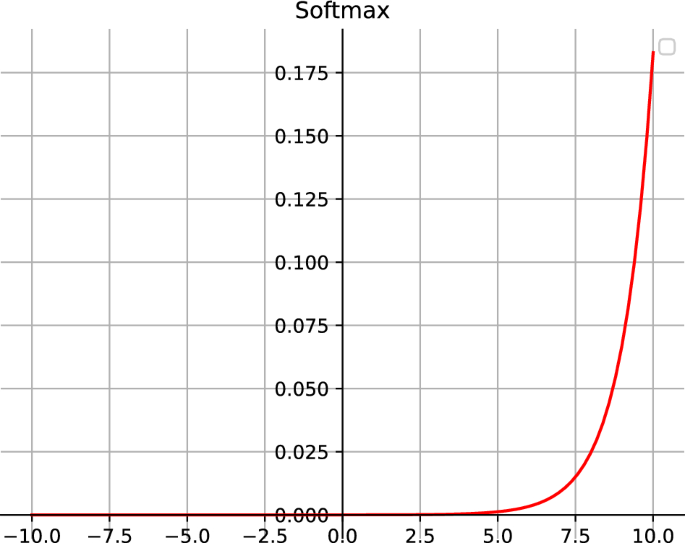

In contrast to the standard max function, which only returns the maximum value, Softmax ensures that smaller values have smaller probabilities and are not discarded outright.The denominator of the Softmax function combines all the factors of the original output value, which means that the various probabilities obtained by the Softmax function are correlated with each other. When the input is negative, the gradient is zero, which means that the weights for activation in that region will not be updated during backpropagation, resulting in dead neurons that never activate. Furthermore, Softmax has the issue of being non-trivial at zero. Fig. 10 depicts the Softmax function image.

Function curves of Softmax

Softmax and Sigmoid also have some similarities and differences in some aspects. Softmax can be regarded as an extension of sigmoid, and softmax regression degenerates to sigmoid regression when the number of categories K = 2. A sigmoid maps a real value to the interval (0,1) and is used for binary categorization. Softmax puts a K -dimensional vector of real values ( \({\textbf {a1, a2, a3, a4....}}\) ) into ( \({\textbf {b1, b2, b3, b4....}}\) ), where \({\textbf {bi}}\) is a constant from 0 to 1. The multi-categorization task can then be performed based on the probability magnitude of \({\textbf {bi}}\) . Although multiple sigmoid can also achieve the effect of multi-categorization by superposition, multi-categorization by softmax regression is mutually exclusive between classes, i.e., an input can only be categorized into one class; multi-categorization by multiple sigmoid regression is performed, and the classes of the output are not mutually exclusive.

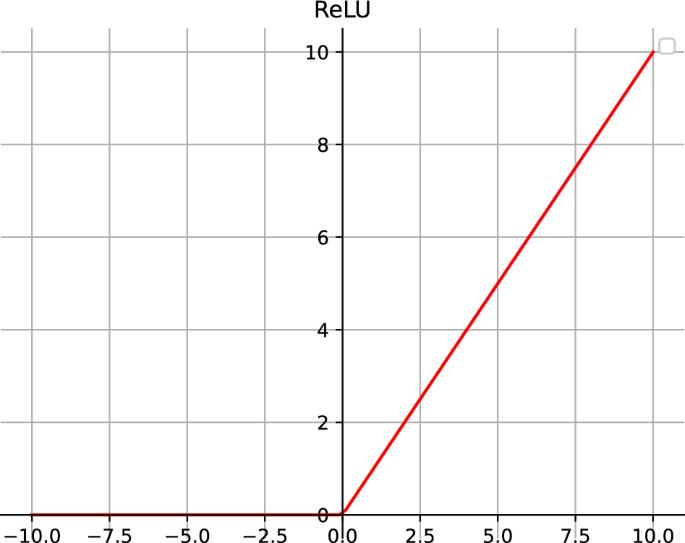

ReLU, also known as Rectified Linear Unit, is a segmented linear function, as shown in Fig. 11 . The ReLU function is essentially a ramp function with the following formula:

Function curves of ReLU

To some extent, ReLU compensates for the lack of sigmoid and tanh. When the input is positive, the derivative is 1, which improves the gradient vanishing problem and speeds up gradient descent convergence. Second, because the ReLU function only has linear relationships, it is faster than the sigmoid and tanh functions. However, this activation function suffers from the Dead ReLU problem. (If the input is negative, the gradient will be exactly zero, and the ReLU neurons are more likely to “die” during training.) Similar to Sigmoid, the output of the ReLU function is not zero-centered, which introduces a bias offset to the neural network in the next layer, affecting the efficiency of gradient descent.

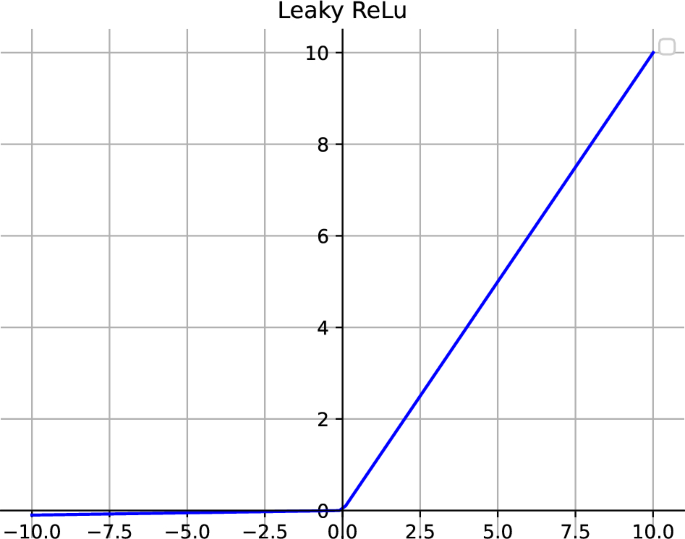

To solve the problem of the vanishing gradient in ReLU, when x < 0, we use Leaky ReLU, a function that tries to fix the Dead ReLU problem. The function expression is as follows:

where a is a very tiny value, like 0.01, 0.1, etc. As in Fig. 12 , let a = 0.01 be displayed here.

Function curves of Leaky ReLU

Leaky ReLU mitigates the Dead ReLU problem to some extent by giving very small linear components to the negative inputs to adjust for the zero gradients of the negatives, extending the range of ReLU. Although Leaky ReLU has all the features of ReLU, such as being computationally efficient, having fast convergence, and not saturating in positive regions, it has not been fully proven in practice that Leaky ReLU is always better than ReLU.

The essence of deep learning lies in continuously updating weights to find values that minimize loss. When dealing with complex tasks, deep networks outperform shallow ones. However, in deep neural networks, gradients are unstable, either vanishing or exploding, caused by the compounding effect of multiplication in gradient backpropagation. For example, the backpropagation (BP) algorithm, based on gradient descent, adjusts parameters in the negative gradient direction of the objective. Gradient calculation involves the derivative of the activation function. If the derivative is greater than 1, as network layers increase, the computed gradient update grows exponentially, leading to a gradient explosion. This results in significant updates to network weights, making the network unstable. If the derivative is less than 1, the gradient update information decays exponentially with increasing layers, causing the vanishing gradient problem. This prevents the model from learning effectively from training data, even with prolonged training.

Choosing the appropriate activation function can effectively alleviate the issues of gradients vanishing and exploding. If the derivative of the activation function is 1, there is no problem of gradients vanishing or exploding, and each layer of the network can update at the same rate. Sigmoid and Tanh are two classic activation functions, but Sigmoid has a drawback: when x is large or small, the derivative is close to 0, and the maximum value of the Sigmoid function’s derivative is 0.25. If Sigmoid is used as the activation function, its gradient cannot exceed 0.25. Consequently, gradient vanishing is likely to occur after the chain rule in backpropagation. Similar to Sigmoid, using Tanh as an activation function may still lead to the issue of gradient vanishing; although its derivative is better than Sigmoid, it remains less than 1. Therefore, Sigmoid and Tanh are generally not suitable for neural networks. The derivative of ReLU is constantly 1 in the positive part, so using ReLU as the activation function avoids the problems of gradients vanishing and exploding. By allowing positive gradients to remain unchanged and setting negative values to zero, ReLU ensures that only positive gradients contribute to weight updates, mitigating the problem of gradients vanishing. Additionally, ReLU can prevent gradient explosion by truncating large gradient values. Other activation functions, such as Sigmoid or Tanh, can also partially alleviate the problem of gradient explosion to some extent. The activation function acts as a decision function and aids in the learning of complex patterns. Choosing an appropriate activation function can hasten the learning process. Different activation functions are appropriate for various application scenarios. ReLU and its variants, on the other hand, are preferred because they aid in overcoming the vanishing gradient problem (Nwankpa et al. 2018 ).

2.4 Batch normalization

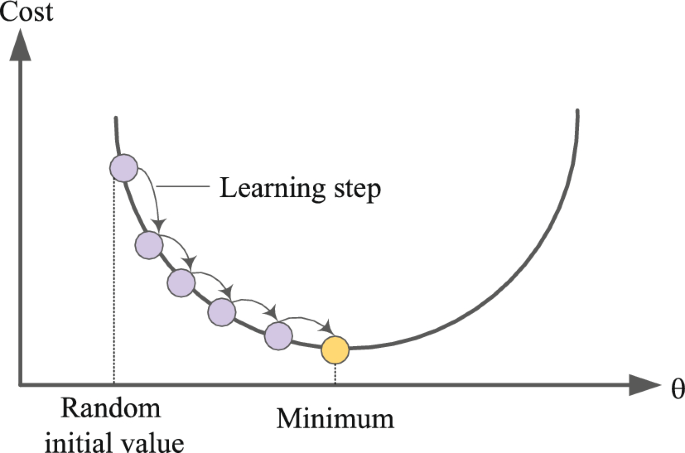

Gradient descent is a very versatile optimization algorithm that is well suited to solving a range of problems. The whole idea of gradient descent is to minimize the objective function by iteratively updating the parameters in the opposite direction of the gradient of the objective function. The gradient is the representation of the directional derivative of a function at that point along which the function achieves its maximum value. The gradient descent algorithm is shown in Fig. 13 , where a random initial value is chosen, the gradient at that point is calculated, and then the independent variables are updated in the direction of the gradient until the value of the function changes very little or the minimum number of iterations is reached. The formula is as follows:

where, \(\theta \) is the parameter to be solved, \(\alpha \) the learning rate represents the learning step for each optimization, and \(J(\theta )\) is the objective function.

Gradient descent algorithm

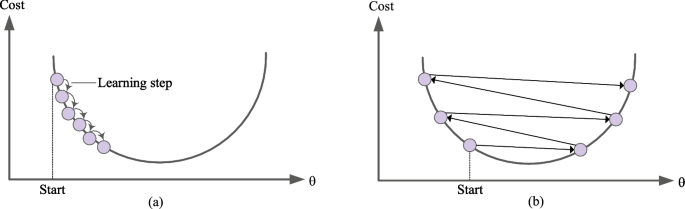

The learning step is an important parameter of gradient descent that determines just how far to try to advance on the objective function in order to find the minima point. There are two extremes that can occur with the setting of the learning step, as shown in Fig. 14 : (a) If the learning step is too small, it will have to go through many iterations before the algorithm can converge, which is very time-consuming. (b) On the other hand, if the learning step is too large, the minimum point will be skipped or may not even be found.

Algorithms for gradient descent with excessively small or large learning steps

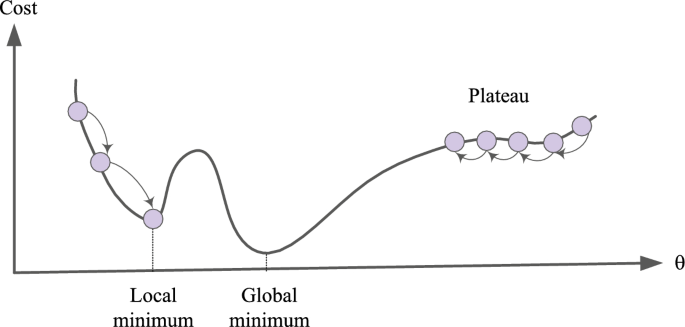

However, not all objective functions resemble a standard bowl. They can be holes, ridges, plateaus, or any other irregular terrain that makes convergence difficult. Fig. 15 depicts the two main gradient descent challenges: If a random initial value is chosen on the image’s left side, it will converge to a local minimum that is greater than the global minimum. It will take a long time to cross the plateau if it starts on the right, and if it stops training earlier than necessary, it will never reach the global minimum.

Two main gradient descent challenges

To address the various problems of gradient descent algorithms, the Google team proposed the idea of batch normalization (BN) (Ioffe and Szegedy 2015 ). BN is a neural network regularization technique that unifies the distribution of feature-map values by setting them to zero mean and unit variance. Furthermore, the BN layer helps to alleviate the problem of gradient vanishing and gradient explosion, improves the network’s adaptability to different input data, speeds up the neural network’s training process, and improves the network’s generalization. It also avoids the problem of data death in the ReLU and makes weight initialization easier.

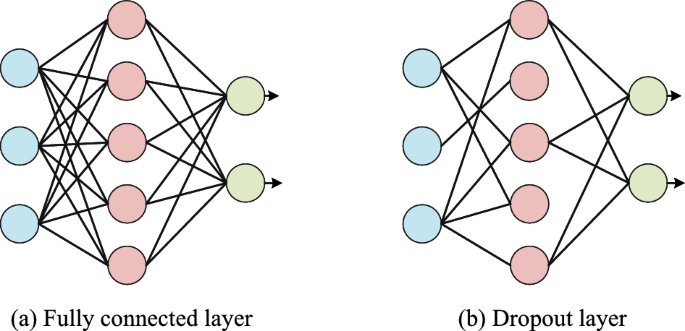

2.5 Dropout

Dropout facilitates regularization in the network by randomly omitting some units or connections with a predetermined probability, which eventually enhances generalization. This random dropping of some connections or units results in several thinned network architectures, from which one representative network is chosen with low weights. This chosen architecture is then regarded as an approximation of all proposed networks (Srivastava et al. 2014 ). Fig. 16 depicts the distinction between a fully connected layer and a dropout layer.

Distinction between a fully connected layer and a dropout layer

2.6 Fully connected layer

A fully connected layer is a global operation, as opposed to convolution and pooling, and is typically employed at the network’s conclusion for classification. Like a multi-layer perceptron neural network (MLP) (Isabona et al. 2022 ), each neuron in the fully connected layer is connected one by one with all the neurons in its preceding layers. Once the feature mapping obtained after several convolution and pooling operations is sufficient to recognize the features of the image, the next thing to consider is how to perform the classification. Generally, the CNN will pull the multiple feature mappings that are finally obtained at the end into a long vector and send it to the fully connected layer, followed by the output layer, for classification. For example, when it comes to an image triple classification problem, the output layer of a CNN will have three neurons. In addition, the fully connected layer can integrate local information that is class-distinctive in the convolution or pooling layers (Sainath et al. 2013 ).

3 Image classification

3.1 subtask explanation.

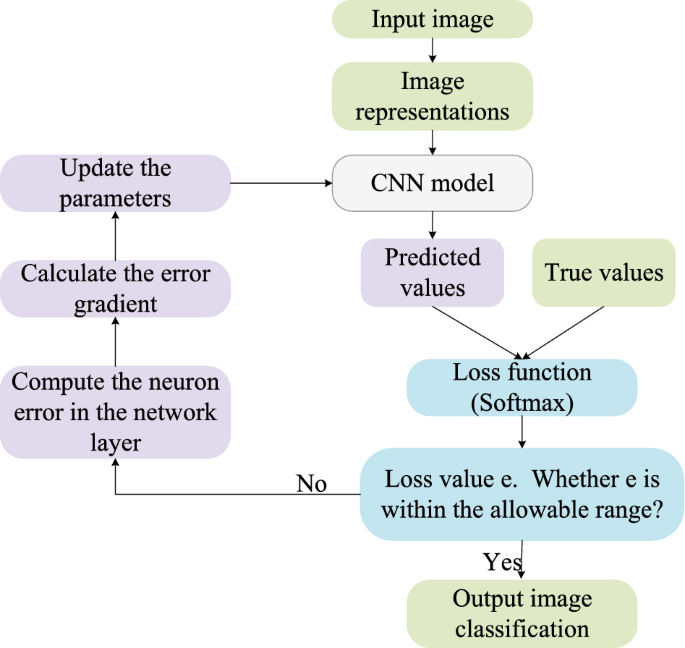

Image classification (Chandra and Bedi 2021 ), which seeks to differentiate between distinct classes of objects, such as flowers, figures, and vehicles, based on various properties reflected in the image, is one of the fundamental challenges in computer vision. In other words, a computer can identify the class to which the objects in an image or video belong. The main process of image classification includes preprocessing the original image, extracting image features, and classifying the image using a pre-trained classifier, in which the extraction of image features plays a pivotal role. The data flow diagram for image classification is shown in Fig. 17 . Traditional image classification algorithms can achieve the expected results in simple classification tasks. However, their performance in complex classification tasks is not satisfactory. CNN uses convolution kernels to extract features from the original input and automatically learns feature representations from massive sample data, giving the trained models stronger generalization abilities when compared to conventional image classification algorithms that manually extract features.

Data flow diagram for image classification

3.2 AlexNet

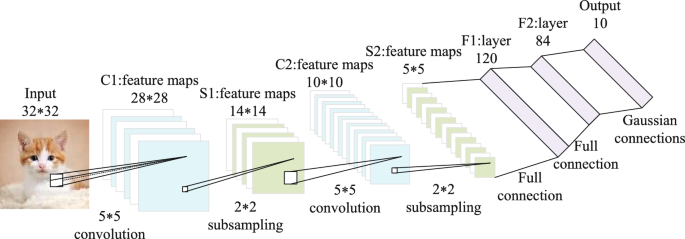

LeNet was proposed by LeCun in 1998 (LeCun et al. 1998 ). LeNet is a feed-forward neural network consisting of two fully connected layers after five alternating layers of pooling and convolution. LeNet-5 is a LeNet extension and improvement that adds more convolution and fully connected layers. As shown in Fig. 18 , the LeNet-5 network model has seven layers. LeNet-5 can share convolution kernels, reduce network parameters, perform well on the small-scale MNIST dataset, and achieve more than 98 \(\%\) accuracy. CNN was first used for image recognition tasks thanks to the work of LeNet and LeNet-5, which also offered crucial lessons and insights for the later creation of deeper neural networks.

Architecture of LeNet-5

The concepts presented by David et al. in their 1968 seminal paper served as the foundation for the idea that LeCun and his colleagues implemented (Hubel and Wiesel 1968 ). The study on the striate cortex in monkeys categorized cells as simple, complex, or hypercomplex. It found smaller receptive fields, increased sensitivity to stimulus orientation, and a minority of cells with color-coding abilities. The evidence supports two vertical column systems in the studied cortex. The first type features columns with cells sharing receptive-field orientations, akin to cat orientation columns but likely smaller. The second system organizes cells into columns based on eye preference, with larger ocular dominance columns. The boundaries of the two systems appear to be independent. The cortex exhibits dual organization patterns: a vertical system aligns cells with common features along a line, mapping stimulus dimensions independently in superimposed mosaics. The horizontal system segregates cells hierarchically in layers, with lower orders (monocularly driven simple cells) near layer IV and higher orders in the upper and lower layers. These findings not only address the organizational aspects of receptive fields and functional structure but also provide a crucial foundation for further research into information processing in the cortical region of the brain.

However, due to the low performance of the hardware and the insufficiently rich dataset at that time, LeNet was not suitable for complex problems. In 2012, Krizhevsky et al. proposed AlexNet (Alom et al. 2018 ), which consists of five convolution layers and three fully connected layers. Each convolution layer contains a convolution kernel, a bias term, a ReLU activation function, and a local response normalization (LRN) module. The first convolution layer convolves the \(224 \times 224 \times 3\) input image using 96 convolution kernels of size \(11 \times 11 \times 3\) and stride 4. The second convolution layer takes the output of the first convolution layer as input and filters it with \(5 \times 5 \times 48\) kernels. The third, fourth, and fifth convolution layers are connected to each other, with no pooling layer in between. The kernels of the second, fourth, and fifth convolution layers are only connected to those kernel maps of the previous convolution layer that are also located on the same GPU. The kernels of the third convolution layer are connected to all the kernel mappings of the second convolution layer. The neurons in the fully connected layer are connected to all the neurons in the previous layer. The response normalization layer follows the first and second convolution layers. The max pooling layer follows the response normalization layer and the fifth convolution layer. The image is convolved, fully connected, and finally fed into a Softmax classifier with 1000 nodes, which converts the output of the network into probabilistic values that can be used to predict the category of the image.

The image classification task of the ILSVRC reflects the most notable breakthrough of deep CNN in this area. In the 2012 ILSVRC, AlexNet demonstrated the potential of deep learning and finally won the competition with a Top-5 classification error rate of 16.4 \(\%\) , surpassing the performance of the second-place algorithm that performed classification by traditional methods. This competition attracted the attention of many researchers, and since then, improved algorithms based on CNN have also obtained excellent results in the ImageNet competition. Meanwhile, AlexNet became the dividing line between traditional and deep learning algorithms and was the first deep CNN model in modern times. Distinguished from traditional algorithms, AlexNet adopts many modern technical methods of deep convolutional networks for the first time, including using dual GPU parallel convolution operations in training, which overcomes the limitation of hardware resources on the learning ability and thus accelerates the training of the model. In order to address the gradient disappearance issue and hasten the convergence of the network model, after convolution filtering, the output excitation of the convolution layer is obtained using the ReLU activation function, which is then output to the subsequent convolution layer after local response normalization and down-sampling operations. By utilizing dropout and data augmentation approaches, AlexNet also lessens the model’s overfitting.

3.3 Visual geometry group

To examine the impact of a CNN’s depth on its accuracy, Karen Sengupta et al. ( 2019 ) conducted a comprehensive evaluation of the performance of network models with increasing depth using small convolution filters (3 \(\times \) 3) instead of the previous large convolution kernels (5 \(\times \) 5) and proposed a series of Visual Geometry Group (VGG) models in 2014. With a classification error rate for the Top-5 of 7.3 \(\%\) , VGG finished as the second-place network in ILSVRC 2014. VGG made the following advancements in comparison to earlier neural network models: lowered the size of the convolution kernels while increasing the number of network layers. The modest size of the convolution kernels used in VGG, as opposed to the convolution kernels used in AlexNet, lowers the computational complexity and the number of training parameters. Simultaneously, the hypothesis that performance can be enhanced by continually deepening the network topology is also supported by VGG. To date, VGG-16 is still widely used in various tasks due to its simple structural features and its applicability in transfer learning.

3.4 GoogLeNet

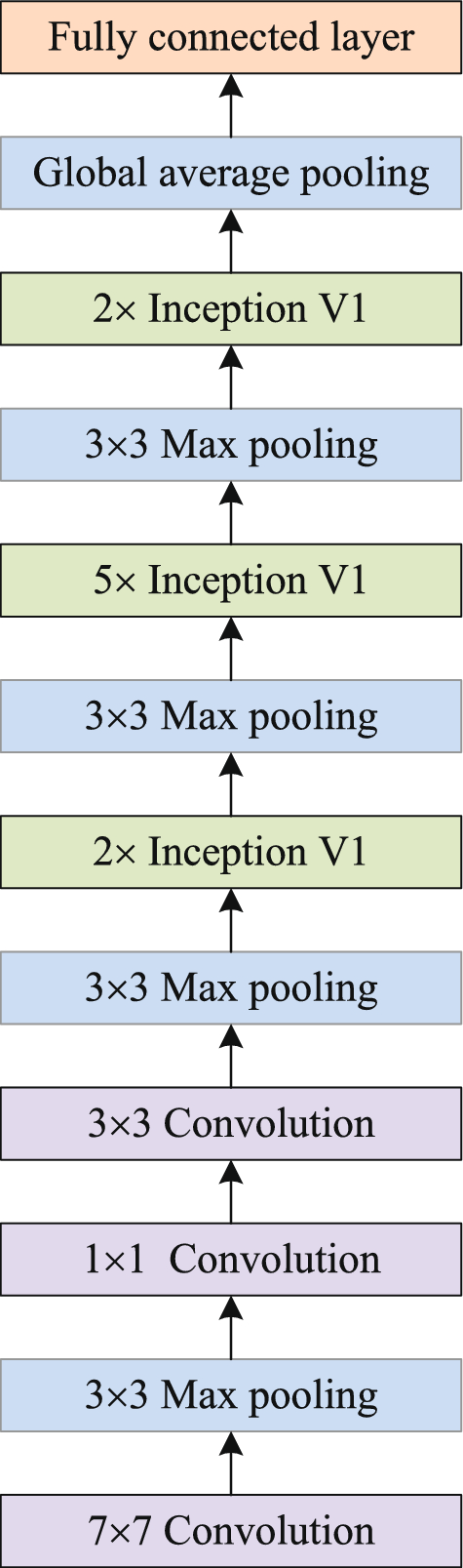

The champion model in the 2014 ILSVRC is GoogLeNet (Khan et al. 2019 ). As shown in Fig. 19 , GoogLeNet consists of nine Inception V1 modules, five down sampling layers, and a number of other convolution and fully connected layers. Though GoogLeNet has deeper network layers, it still has a lesser number of parameters compared to VGG. Consequentially, when computer hardware resources are restricted, GoogLeNet is a superior solution for image classification. A GoogLeNet convolution layer has many convolution processes of varying sizes, allowing for the production of dense data while making optimal use of processing resources. Additionally, it makes use of sparse connections to eliminate redundant data and cut costs by skipping through pointless feature maps. Last but not least, the GoogLeNet reduces the connection density by adopting global average pooling rather than a fully connected layer.

Architecture of GoogLeNet

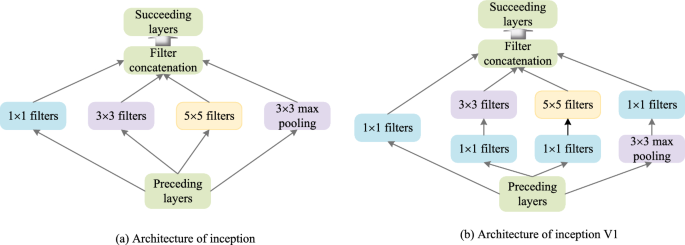

By adding more hidden layers to CNN, the recognition accuracy and performance of deep neural networks can be enhanced (Szegedy et al. 2015 ), but it can lead to many issues. On the one hand, as the number of network layers rises, the network must learn more parameters, which easily leads to the model being overfitted to the training data set. On the other hand, networks with extra layers require robust hardware resources in order to maintain the required processing power. In order to overcome these problems, the research team at Google developed the concept of inception (Al Husaini et al. 2022 ), which aims to build the underlying neurons and a network topology for sparse high-performance computing. In in Fig. 20 a, the original Inception structure is displayed. Based on experimental results, it is concluded that the structure’s 5 \(\times \) 5 convolution is the root cause of the excessive parameter issue. As a result, a new structure called Inception V1 is proposed. The structure of inception V1 is shown in Fig. 20 (b). The main idea of inception V1 is to extract feature information from the preceding layers with three different-sized convolution kernels, fuse them, and pass them to the succeeding layers. The 1 \(\times \) 1 convolution kernel is the most commonly utilized among them for data dimension reduction, which reduces convolution computation when passing to the next 3 \(\times \) 3 and 5 \(\times \) 5 convolution layers, avoiding the huge computation due to the increase in network size. The following layer can extract more valuable features from various scales by combining the features of the four channels.

Architecture of inception and inception V1

Following Inception V1, Szegedy et al. proposed some optimizations to the Inception V1 structure and released the Inception V2 model in 2015 (Szegedy et al. 2016 ). To reduce the number of parameters and increase the discriminative nature of feature information, Inception V2 is improved by using two \(3 \times 3\) convolution kernels instead of \(5 \times 5\) convolution kernels, \(\text {1} \times \text {n}\) convolution kernels, and \(\text {n} \times \text {1}\) convolution kernels instead of \(\text {n} \times \text {n}\) convolution kernels. Second, the pooling layer is optimized using a parallel structure to cut down on computation. Furthermore, by smoothing the probability distribution of labels, overfitting is minimized. Inception V3 is an improved version of Inception V1 and V2. The idea of Inception V3 was to reduce the computational cost of deep networks without affecting generalization. For this purpose, Szegedy et al. replaced large-size filters ( \(5 \times 5\) and \(7 \times 7\) ) with small and asymmetric filters ( \(1 \times 7\) and \(1 \times 5\) ) and used \(1 \times 1\) convolution as a bottleneck before the large filters (Szegedy et al. 2017 ).

3.5 Residual network

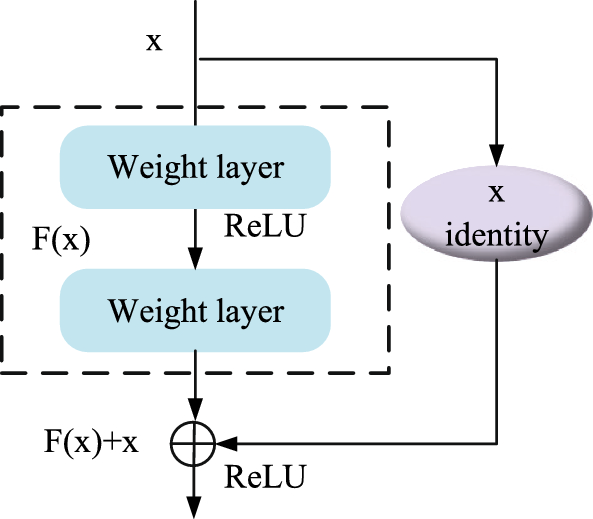

A degradation problem emerges when deeper neural networks start to converge: accuracy increases to a saturation point and then rapidly declines as network depth increases. Nevertheless, the increase in layers that results in more training errors is what causes this degradation, rather than overfitting. Prior to residual network (ResNet Wightman et al. 2021 ), networks had relatively low layer counts; for example, the 2014 VGG network had only 19 layers. ResNet, on the other hand, maintains greater accuracy while having 152 layers in its depth. ResNet alludes to the highway network concept Srivastava et al. ( 2015 ) and is composed of stacked residual blocks. The structure of a residual block is illustrated in Fig. 21 . In addition to containing weighted layers, a residual block directly connects the input x to the output through a shortcut connection. The residual mapping is denoted as F(x), and the output is obtained by adding the residual mapping to the input, resulting in F(x) + x, representing the original mapping. The residual network encourages the stacked weighted layers to fit the residual mapping F(x) rather than the original mapping. Learning the residual mapping is simpler and more easily optimized compared to learning the original mapping. Furthermore, the shortcut connections enable the exchange of features between different layers, to some extent alleviating the problem of gradient vanishing. The Top-5 error rate of the residual network on the image classification task was reduced to 3.6 \(\%\) .

A residual block

3.6 Squeeze and excitation network

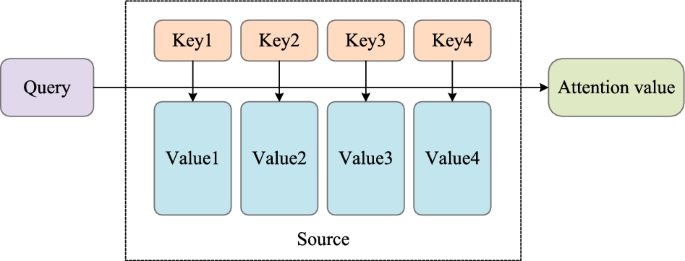

In recent years, the attention mechanism has been another focus of CNN research. When the human eye scans an image, it first looks at the whole picture and then focuses its attention on a certain detail, concentrating its attention on the valuable part and ignoring the less valuable part. When we are designing neural network models, we hope that the models can have the same ability. Attention can be understood as selectively filtering out a small amount of important information from a large pool of data and focusing on these crucial details, while ignoring the majority of less significant information. The process of focusing is reflected in the calculation of weight coefficients, where larger weights indicate a stronger focus on the corresponding Value. In other words, the weights represent the importance of the information, and the Value is the corresponding piece of information. In this way, we can comprehend the attention mechanism (refer to Fig. 22 ). Imagine the constituent elements of Source as a series of <Key,Value> data pairs. Then, given an element Query in target, by calculating the similarity or correlation between Query and each Key, get the weight coefficients of the Value corresponding to each Key, and then weight and sum the Value, that is, we get the final Attention value. So the attention mechanism is essentially a weighted sum of the values of the elements in the Source, and the Query and Key are used to calculate the weight coefficients of the corresponding values.

The essential idea of attention

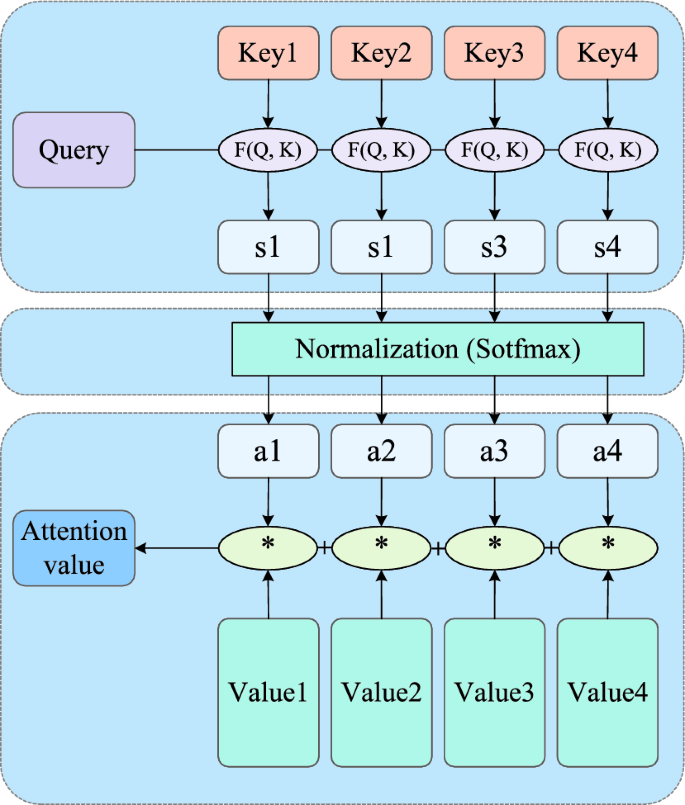

Abstracting the specific calculations of the attention mechanism can be summarized into two processes: the first process involves calculating weight coefficients based on Query and Key, and the second process entails weighting and summing the values based on these weight coefficients. The first process can be further divided into two stages: the first stage computes the similarity or correlation between Query and Key, and the second stage normalizes the raw scores obtained in the first stage. Fig. 23 illustrates the three-stage calculation process of attention.

Three-stage process for computing attention

In the first stage, various computation mechanisms can be introduced to calculate the similarity or correlation between Query and a given Key. The most common methods include computing the dot product of their vectors and calculating the cosine similarity, as illustrated below:

Due to the different methods used, the values produced in the first stage can have different ranges. In the second stage, a computation method similar to Softmax is introduced to transform the scores obtained in the first stage. On one hand, this normalization ensures that the original computed scores are unified into a probability distribution where the sum of all element weights is equal to 1. On the other hand, the intrinsic mechanism of Softmax helps emphasize the weights of important elements. Typically, the calculation is performed using the following formula:

where Lx = \( |Source| \) represents the length of the Source. The computed result \(a_i\) from the second stage represents the weight coefficient corresponding to \(value_i\) . Then, by performing a weighted sum, the attention value can be obtained:

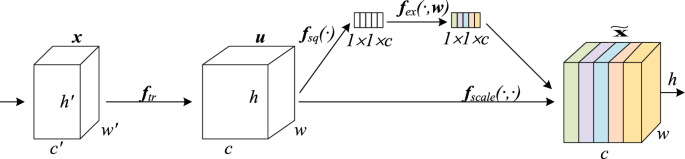

Focusing on channel attention research, Hu proposed the squeeze-and-excitation block (SE block). The SE block explicitly models interdependencies between channels to recalibrate the feature responses within channels. This involves selectively enhancing useful channel features while suppressing irrelevant ones. Squeeze and excitation Networks (SENet Jin et al. 2022 ) won the 2017 ImageNet competition, similar to ResNet, both with a largely reduced error rate compared to previous models and low network complexity. The two primary parts of SENet are squeeze and excitation. A block of squeeze-and-excitation networks is shown in Fig. 24 . The \({\textbf {f}}_{tr}\) in the figure is the traditional convolution structure, \({\textbf {x}}\) and \({\textbf {u}}\) are the input and output of \({\textbf {f}}_{tr}\) , which are already present in the previous structures. The added part of SENet is the content after \({\textbf {u}}\) . In the image recognition task, the input image’s dimensions are h , w , and c , where h stands for height, w for width, and c for channel count. The squeeze component is in charge of compressing the \(h\times w\times c\) dimension into \(1\times 1\times c\) dimension, which is the same as condensing \(h\times w\) into a single dimension, and this is typically accomplished using global average pooling ( \({\textbf {f}}_{sq}\) (.) in Fig. 24 ). The output \(1\times 1\times c\) data is then fully concatenated ( \({\textbf {f}}_{ex}\) (.) in Fig. 24 , which is the excitation process), and finally, the self-gating technique is used to learn the excitation of each channel and scale this value to the c channels of \({\textbf {u}}\) as the next level’s input data. Controlling the scale size allows squeeze-and-excitation networks to strengthen critical channel properties while weakening non-important channel features, yielding good results and providing a novel notion for future research in this approach.

A block of squeeze-and-excitation networks

3.7 MobileNet

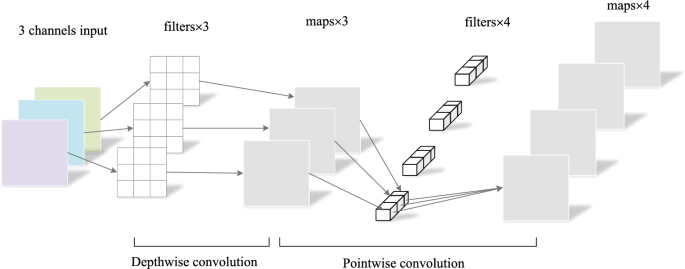

In traditional CNN, the memory requirements and computational demands are substantial, making it impractical for running on mobile and embedded devices. Howard and his colleagues proposed a lightweight network, MobileNetV1 (Howard et al. 2017 ), tailored for mobile and embedded applications. Compared to traditional CNN, MobileNetV1 significantly reduces the model’s parameters and computational workload while experiencing a minor decrease in accuracy. MobileNetV1 achieves 0.9 \(\%\) lower accuracy than VGG16, but with only 1/32 of the model’s parameters. MobileNetV1 employs depthwise separable convolution layers, as illustrated in Fig. 25 . This involves first applying depthwise convolution to each channel of the feature map, followed by pointwise \(1 \times 1\) convolution, aiming to reduce computational load and model parameters. Two contraction hyperparameters, the width multiplier and the resolution multiplier, are introduced simultaneously to decrease computation, reduce volume, and improve accuracy. However, a drawback of this model is its low cost-effectiveness, as many convolution kernel parameters become zero during the training process. Subsequently, Google introduced MobileNetV2 (Sandler et al. 2018 ), which utilizes an inverted residual structure and a linear bottleneck structure. The inverted residual structure first employs a \(1 \times 1\) convolution to increase dimensionality, deepening the channels to capture more feature information. It then applies a \(3 \times 3\) depthwise convolution operation and concludes with a \(1 \times 1\) convolution for dimensionality reduction, effectively reducing the number of parameters. One drawback of this model is the loss of diversity between layers, which cannot guarantee accuracy.

Deep separable convolution layer structure

ImageNet (Deng et al. 2009 ), as one of the datasets for image classification tasks, has the characteristics of large-scale datasets and abundant image categories, and the trained model has good generalization ability, allowing it to obtain effective classification results on other image classification datasets such as CIFAR-10/100 Krizhevsky et al. ( 2009 ), Caltech-101 (Fei-Fei et al. 2004 ), and SUN (Xiao et al. 2010 ). Deep CNN models have improved in training thanks to the availability of a wide range of large-scale datasets, and models trained on these datasets have better generalization abilities. These generalization abilities can be used in practical applications to quickly learn the features of the datasets on their own and boost the effectiveness and efficiency of classification tasks. Performance comparisons of different architectures are shown in Table 1 .

As shown in Table 1 , from AlexNet to GoogLeNet, the accuracy of image classification increases progressively. This is attributed to the deeper architecture of the networks, which leads to more effective feature extraction.

ResNet has a deeper network architecture compared to VGG, but the introduction of residual learning makes the network more easily optimized, mitigating gradient vanishing issues. Additionally, parameter sharing and reuse, along with a reduced parameter count, contribute to achieving higher performance with lower complexity and error rates.

Deep neural networks incorporating attention mechanisms have achieved remarkable performance, as exemplified by the SE block proposed by Hu, which effectively models dependencies among channel features. Through these methods, it becomes evident that the core function of attention mechanisms is to emphasize useful components while disregarding those with relatively minor contributions to feature extraction. Consequently, integrating attention mechanisms into networks offers the advantage of enhancing model performance and improving the effective extraction of features.

Although lightweight networks may not perform as well as classical deep CNNs in image classification on the ImageNet dataset, they significantly reduce the number of parameters. This indicates that lightweight networks effectively utilize model parameters by employing methods like depthwise separable convolution. This advantage is particularly valuable in resource-constrained environments, such as mobile devices or embedded systems, where they can still deliver relatively good performance while reducing model size. This makes them more suitable for practical deployment and operation.

Despite the fact that several CNN models have achieved outstanding performance in image classification, they have a number of drawbacks. Advanced CNN models frequently have intricate structures and a lot of parameters, requiring a lot of processing power and memory during training and deployment. The use of lightweight network topologies like MobileNet and EfficientNet, model pruning, and model compression, as well as other strategies to lessen model complexity and storage needs, can all be used to overcome this issue. The fact that many CNN models heavily rely on an enormous amount of labeled data to perform at their best is a huge hurdle. Large-scale annotated data can be expensive and time-consuming to acquire, though. Several techniques can be used to improve training data and lessen reliance on annotated data in order to address this difficulty. These techniques include transfer learning, semi-supervised learning, and data augmentation. Another problem is that typical CNN models may lose fine-grained information when used on small-sized images. Different strategies can be used to handle the limitations of small-sized photos in order to solve this issue. These methods involve leveraging shallow network designs, pyramid-style network structures, or smaller convolutional kernels. Recent years have seen the emergence of fresh study focuses and methodologies, like using transformer models for image categorization. Researchers have looked into replacing convolutional blocks with transformer model structures or implementing self-attention processes from transformers straight into CNN. As evidenced by models like DeiT, pyramid vision transformer, and swin transformer, these initiatives have yielded promising outcomes. A significant area for future research will be the combination of deep learning and reinforcement learning in image classification. The effectiveness of image classification models may be further improved by this fusion of methodologies.

4 Object detection

4.1 subtask explanation.

As a fundamental task in computer vision, object detection (Ma et al. 2023 ) is the key to solving more complex vision tasks such as image segmentation (Minaee et al. 2021 ), object tracking (Luo et al. 2021 ), behavior recognition (Hu et al. 2023 ), etc. The process of object detection and recognition typically consists of two steps: firstly, the prospective placement of each target object in a picture is localized, and secondly, the well-positioned objects are sorted into several categories. Compared with image classification, object detection focuses more on local regions of an image and specific sets of object classes. CNN has been used in object detection since the 1990’s. However, because of a lack of training data and hardware resources such as computational power and storage devices, research on object detection using CNN received little attention and advanced slowly until 2012. The tremendous breakthrough of CNN in the ImageNet challenge in 2012 rekindled researchers’ interest in deep CNN-based object detection, which led to a dramatic increase in object detection and recognition rates. At the same time, object detection has been widely applied in real-world scenarios, including autonomous driving (Zablocki et al. 2022 ), virtual reality (VR) (Xiong et al. 2021 ), intelligent video surveillance (Huang et al. 2021 ), etc.

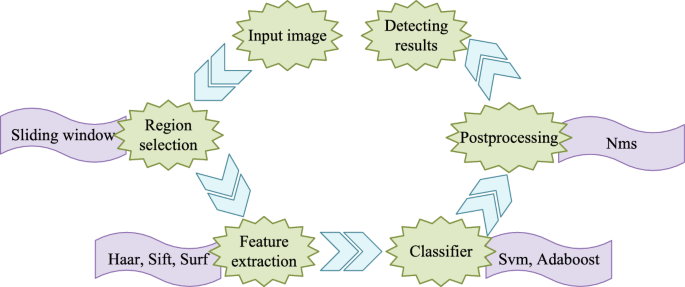

Before the prosperity of deep learning, object detection algorithms depended on the traditional sliding window approach and were designed manually. The commonly used feature descriptors are Haar (Papageorgiou et al. 1998 ), Sift (Lowe 2004 ), Surf (Bay et al. 2006 ), etc., to train a unique shallow classifier for each class of target objects. Traditional object detection process is shown in Fig. 26 . However, due to the factors of objects and the imaging environment, the method of manually designing features suffers from a lack of robustness, poor generalization, and low detection accuracy (Dicong et al. 2021 ). The bottlenecks of traditional object detection algorithms in practical applications are twofold. On the one hand, because the traditional object detection algorithm requires the designer to extract the features of the sample using prior knowledge, only a few parameters can appear in the feature design to lessen the difficulty of manually tuning the parameters. Shallow classifiers, on the other hand, require exponentially more parameters and training data in the face of tough detection tasks due to the lack of model depth. In response to the problem of manual parameter tuning of traditional object detection algorithms, the research boom in deep networks has brought new opportunities for the development of object detection. Compared with traditional object detection algorithms, deep CNN can automatically learn feature representations of parameters from massive data sets and do not require additional training classifiers, which greatly improves the efficiency of the feature learning process.

Traditional object detection process

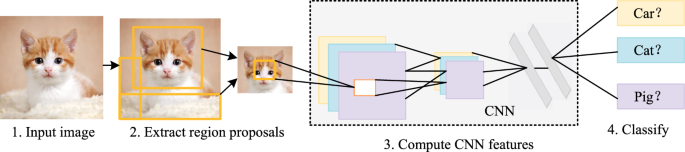

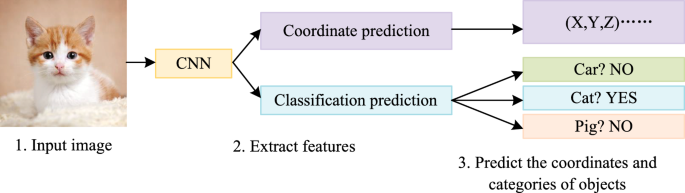

In this paper, we outline known strategies for object detection from two perspectives: the region-based object detection algorithm (two-stage detectors) and the regression-based object detection algorithm (one-stage detectors). Fig. 27 depicts the basic procedure of two-stage detectors, which scan the whole image using multiple fixed-size sliding windows to generate a series of region proposal boxes, select the region proposal of the image, and then perform regression localization and classification of the targets that may exist in the region proposal to achieve object detection. One-stage detectors, in contrast, do not generate region proposals and combine feature extraction, object classification, and position regression into a single CNN to complete the process, simplifying the object detection process into a form of the end-to-end regression problem, as illustrated in Fig. 28 .

Basic process of two-stage detectors

Basic process of one-stage detectors

4.2 Representative two-stage detectors

Using CNN and region proposals, Girshick Girshick et al. ( 2014 ) introduced a deep learning object detection framework in 2014 called R-CNN. Initially, the model uses selective search (Ji et al. 2021 ), a non-deep learning algorithm, to propose candidate regions to be classified, and then feeds each candidate region into a CNN to extract features. Finally, these features are fed into a linear support vector machine for classification. To improve localization accuracy, a linear regression model is trained in R-CNN and used to correct the coordinates of the candidate region; this process is known as bounding box regression. On the PASCAL VOC object detection dataset, the model achieved an average correctness mean that was approximately 20 \(\%\) higher than the traditional algorithm, paving the way for the creation of two-stage detectors.

In R-CNN, approximately 2000 candidate regions are generated for each image, and each image’s candidate regions must be feature extracted separately, making feature extraction a bottleneck in total test time. A Microsoft Research team applied SPP-Net (Ma et al. 2021 ), to object detection and elevated R-CNN’s shortcoming. For the candidate regions generated by the selective search algorithm, SPP-Net projects the coordinates of these regions to the corresponding positions of the feature maps output by the highest convolution layer and then inputs the features corresponding to each candidate region into the spatial pyramid pooling layer to obtain a fixed-length feature representation. The subsequent stages keep similarities to R-CNN in that the fully connected layer receives these feature representations as input, a linear support vector machine uses the fully connected layer’s feature output for classification, and bounding box regression is used to correct the candidate region coordinates. On the PASCAL VOC, the network achieved similar accuracy to the R-CNN, but the total time spent on the test was significantly reduced due to the fact that the time-consuming convolution operation was performed only once for each input image.

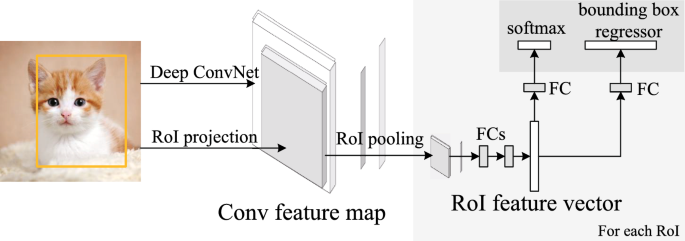

Like R-CNN, SPP-Net has certain limitations: the multi-stage training process of region proposal creation, feature extraction, and object classification is challenging, and it needs a lot of storage space for the derived features. Additionally, SPP-Net ignores the parameters of the network model’s other layers and only adjusts the fully connected layer. To solve these problems, Fast R-CNN (Girshick 2015 ) was available in 2015; its structure is shown in Fig. 29 . Compared with the CNN in R-CNN, Fast R-CNN improves on the last pooling layer by proposing the Region of Interest (RoI) pooling layer. The role of this layer is similar to that of the spatial pyramid pooling layer used in SPP-Net, which is to output a fixed-dimensional feature vector for any size of input, except that only a single level of spatial block partitioning is performed in the RoI pooling layer. This improvement allows Fast R-CNN, like SPP-Net, to input the whole input image together with the coordinates of the candidate regions generated by the selective search algorithm into a CNN and then perform RoI pooling on the feature maps of the output of the last convolution layer for the features corresponding to each candidate region. RoI pooling is performed on the output feature mapping of the last convolution layer, thus eliminating the need to perform a separate convolution computation for each candidate region. In addition, Fast R-CNN replaces the last softmax classification layer of the CNN with two side-by-side fully connected layers, one of which is still a softmax classification layer, and the other is a bounding box regressor, which is used for correcting the coordinate information of the candidate regions. During the training process, Fast R-CNN designs a multi-task loss function to train the two fully connected layers for classification and correction of candidate region coordinate information simultaneously. This training approach achieves better detection results on the PASCAL VOC dataset than the network obtained from the staged training previously used for R-CNN, thus eliminating the need for additional training of SVM classifiers in Fast R-CNN and realizing the integration of the process from extracting image features to completing detection.

Fast R-CNN architecture

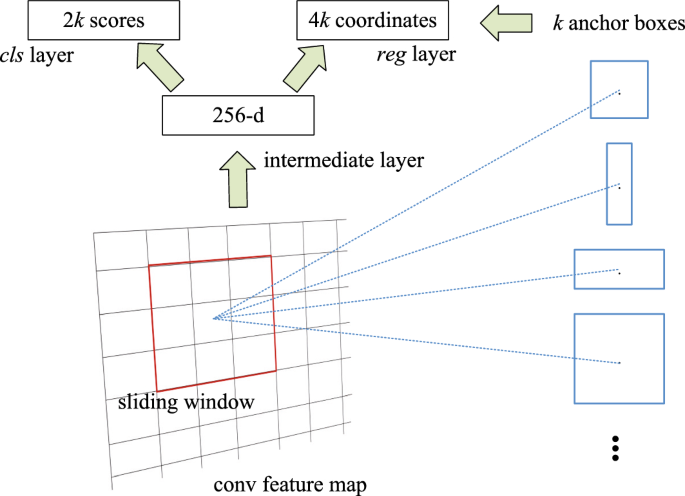

These models have made improvements in the training process and the structure of CNN, however, they all use traditional algorithms to propose candidate regions, and these algorithms are implemented on CPUs, which makes the time of calculating candidate regions the bottleneck of the overall running time of the model. Therefore, in the Faster R-CNN model (Ren et al. 2015 ) designed by Ren Shaoqing et al., a candidate region network is proposed to improve this step, and its structure is shown in Fig. 30 . Faster R-CNN improves on Fast R-CNN by setting a sliding window on the feature mapping output from the last convolution layer, which is fully connected to the candidate region network. For each position that the sliding window slides over, several anchor points with different scales and aspect ratios centered on the center of the sliding window are given in the model, and the candidate region network will compute a candidate region based on each anchor point accordingly. Since the process of proposing candidate regions by Faster R-CNN is based on the features extracted from the first few convolution layers of the Fast R-CNN used for detection and the candidate region network is also implemented on GPUs, the time overhead for proposing candidate regions is greatly reduced, the time required for detection is about 1/10th of the original, and the accuracy is improved, which suggests that the candidate region network is able to not only operate more efficiently but also improve the quality of the candidate regions produced.

Region proposal network (RPN)

Despite the fact that various applications have successfully recognized medium-size and large-size items in images with accuracy, small object detection remains problematic. Small objects are very difficult to recognize due to indistinguishable characteristics, complicated backdrops, low resolution, insufficient context information, and so on. As a result, there is considerable research being done in this field, and numerous deep-learning approaches have been developed recently with promising outcomes. In 2017, Lin et al. ( 2017 ) used the pyramidal hierarchy property of CNN to connect top-down lateralized high-level features with low-resolution, high-semantic information and low-level features with high-resolution, low-semantic information to construct Feature Pyramid Networks (FPNs) with high-level semantic information at different scales. The proposed FPN greatly improved the detection accuracy of the network and achieved state-of-the-art object detection, which will also become one of the important techniques to improve the accuracy of major networks in the future. Moreover, compared with other object detection models, the performance of FPN to improve classification accuracy in small object detection has achieved good results.

He et al. presented Mask R-CNN (He et al. 2017 ) in 2017, which integrates the concepts of Faster R-CNN and FCN. The feature extraction section uses a feature pyramid network (FPN) architecture and replaces the RoI pooling layer with a RoI align pooling layer, as well as a Mask prediction branch. The new FPN architecture improves the model’s multi-scale feature extraction capacity and improves the recognition of small objects. However, the detection speed is the same as Faster R-CNN, which is insufficient for real-time monitoring applications.

Cao suggested a novel two-stage detector, D2Det (Cao et al. 2020 ), in 2020, that can handle the difficulties of precise localization and accurate classification at the same time. The model uses dense local regression to estimate the object’s various dense frame offsets. Dense local regression is not confined to a fixed set of quantified key points but may also regress location-sensitive real dense offsets, allowing for more accurate localization. To improve classification accuracy, discriminative RoI pooling (DRP) is used to recover accurate object feature areas from the first and second phases, respectively. Table 2 compares the performance of two-stage detectors.

4.3 Representative one-stage detectors

One-stage detectors separated input photos into a number of cells, and each cell was used to forecast the item’s center falling into the cell, as opposed to using pre-defined anchors for the object region. After just one stage, which has a quicker detection speed, the class and location of the object can be determined. However, compared to two-stage detectors, the detection accuracy is less accurate. The YOLO (you only look once) algorithm is a typical example of such an algorithm. The first one-stage object detection algorithm is YOLO (Redmon et al. 2016 ). The fundamental concept behind YOLO is to break the image up into multiple cells, predict the bounding box coordinates, the objects inside the boxes, and their corresponding confidence levels for each cell, and then remove the overlapping boxes using a non-maximal value algorithm to get the desired predicted boxes to achieve object detection. For instance, if the center of an object that needs to be recognized falls within one of the image’s divided cells, the cell is in charge of determining the type and location of the target object.

When compared to two-stage detectors, the real-time object detector YOLO was incredibly quick. However, it struggles to accurately forecast bounding box scales and ratios, especially for small item detection, which leads to relatively low localization and classification accuracy. It also performs poorly on objects that are partially situated in one cell. In 2017, Redmon proposed YOLOv2 (Redmon and Farhadi 2017 ). YOLOv2 adds a batch normalization layer to all convolution layers to accelerate model learning, employs DarkNet-19 (Al-Haija et al. 2021 ) as the backbone, and employs a classification network, namely, a high-resolution classifier (Anuj and Gopalakrishna 2020 ), which pre-trains the model on high-resolution ImageNet datasets and then fine-tunes it using target datasets to improve model training stability. All of the strategies significantly increased detection accuracy while remaining fast.

DarkNet-53 served as the backbone for YOLOv3’s extraction of picture characteristics, and logistics was employed in place of softmax for classification. The prediction was performed using the FPN network, and the previous frames were chosen using k-means clustering. In YOLOv3 (Redmon and Farhadi 2018 ), nine preceding frames were chosen, and three feature maps with various sensory fields were chosen to identify objects of various sizes.

YOLOv4 (Bochkovskiy et al. 2020 ) introduced mosaic data enhancement on the input images. In feature extraction, YOLOv4 integrated numerous novel techniques, including CSPDarkNet53 and the mish activation function. Instead of FPN, SPP and PAN were employed to extend the perceptual field and conduct feature fusion. Overall, YOLOv4 is a significant improvement over YOLOv3 and has considerable technical value since it introduces the most recent research methods within the realm of deep learning for validation testing. The network topology of YOLOv5 can be broken down into four sections: input, backbone, neck, and prediction. This makes it quite similar to YOLOv4. On the input photos, YOLOv5 applies adaptive image scaling, adaptive anchor frame computation, and mosaic data enhancement. A YOLOv5 invention, the backbone section employs a mix of focus structure and CSP structure, and the key is the slicing operation. Although YOLOv5 presently employs the same structure as YOLOv4, when it was launched, only the FPN structure was in use. The PAN structure was later introduced, and other network components were also modified. Although YOLOv4 already has a high level of detection precision, YOLOv5’s numerous network architectures are more adaptable in real-world experiments.

Accuracy and speed are two critical performance characteristics in object identification, and how to balance them is critical in actual applications in industry. YOLOv6 (Li et al. 2022 ), designed for industrial applications, was released in 2022, and it supports the entire chain of industrial application requirements, such as model training, inference, and multi-platform deployment, as well as making several improvements and optimizations at the network structure, training strategy, and other algorithm levels. In terms of backbone, neck, head, and training approach, YOLOv6 outperforms earlier models. Li created a re-parameterizable and more efficient backbone network based on the RepVGG architecture, inspired by the notion of hardware-aware neural network design (Ding et al. 2021 ). The anchor-free paradigm is employed as the training approach, and to further increase the detection accuracy, the SimOTA (Ge et al. 2021 ) label assignment technique and SIoU (Gevorgyan 2022 ) bounding box regression loss are included. In terms of accuracy and speed, YOLOv6 surpasses other methods of the same volume on the COCO datasets.

Tan proposed EfficienDet (Tan et al. 2020 ), which is based on EfficienNet (Tan and Le 2019 ), in order to establish a model that balances detection speed and accuracy. This model introduces a collaborative scaling strategy while enabling quick multi-scale feature fusion using EfficienNet as the backbone and a bi-directional feature pyramid network as the feature network. Additionally, the concept of weighting is used. Joint scaling may evenly scale the depth, breadth, and resolution of the frame-class prediction network, the feature network, and the backbone network to produce the best outcomes.

Dong introduced CentripetalNet (Dong et al. 2020 ) to address the issue that key point-based detectors are prone to matching mistakes. This approach matches the corner points more precisely than the conventional embedding method, and this model can anticipate the corner point location and centripetal displacement of the item and match its corresponding corner. In the meantime, the cross-star-shaped variability convolution is proposed to maximize the learning of the cross-star feature in the partial feature map created after the corner pooling layer. On the COCO datasets, the model experimentally outperforms all other object detectors without anchor frames. The datasets are an important measure for the training and evaluation of different supervised algorithms. The two datasets that are most often utilized for object detection tasks are PASCAL VOC (Shetty 2016 ) and Microsoft COCO (Lin et al. 2014 ). Table 3 compares the performance of one-stage detectors.

The accuracy of the method is improved by optimizing the network structure by making the model more complicated, but this decreases the training and detection speeds, making it challenging to satisfy the requirement for real-time detection. Therefore, concentrating on the combination of accuracy and speed will be the direction of future study. We simultaneously increase the accuracy and speed of object recognition to establish a balance between precision and speed that would satisfy the real demand. This is done by combining the high accuracy of region-based algorithms with the high speed of regression-based algorithms. An individual detection algorithm may perform SOTA on task A but may not perform as well on other tasks due to factors such as complex object backgrounds with substantial noise interference, and low contrast between object and background colors, which makes it challenging for the network to extract discriminate features, and small object sizes, which are challenging to detect. Therefore, a specific analysis of the difficulties of each detection task is beneficial to designing techniques that perform SOTA on a specific task.

In the past several years, object detectors based on CNN have entered the fast track of development, during which certain results have been achieved, but there is still room for further development. The following provides the frontier issues and research directions in this field to promote the research and improvement of subsequent object detectors.