- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Guided Meditations

- Verywell Mind Insights

- 2024 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

How Observational Learning Affects Behavior

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

- Influential Factors

- Pros and Cons

Observational learning describes the process of learning by watching others, retaining the information, and then later replicating the behaviors that were observed.

There are a number of learning theories, such as classical conditioning and operant conditioning , that emphasize how direct experience, reinforcement, or punishment can lead to learning. However, a great deal of learning happens indirectly.

For example, think about how a child may watch adults waving at one another and then imitates these actions later on. A tremendous amount of learning happens through this process. In psychology , this is referred to as observational learning.

Observational learning is sometimes called shaping, modeling, and vicarious reinforcement. While it can take place at any point in life, it tends to be the most common during childhood.

It also plays an important role in the socialization process. Children learn how to behave and respond to others by observing how their parent(s) and/or caregivers interact with other people.

Verywell / Brianna Gilmartin

History of Observational Learning

Psychologist Albert Bandura is the researcher most often associated with learning through observation. He and others have demonstrated that we are naturally inclined to engage in observational learning.

Studies suggest that imitation with social understanding tends to begin around 2 years old, but will vary depending on the specific child. In the past, research has claimed that newborns are capable of imitation, but this likely isn't true, as newborns often react to stimuli in a way that may seem like imitation, but isn't.

Basic Principles of Social Learning Theory

If you've ever made faces at a toddler and watched them try to mimic your movements, then you may have witnessed how observational learning can be such an influential force. Bandura's social learning theory stresses the power of observational learning.

Bobo Doll Experiment

Bandura's Bobo doll experiment is one of the most famous examples of observational learning. In the Bobo doll experiment , Bandura demonstrated that young children may imitate the aggressive actions of an adult model. Children observed a film where an adult repeatedly hit a large, inflatable balloon doll and then had the opportunity to play with the same doll later on.

Children were more likely to imitate the adult's violent actions when the adult either received no consequences or when the adult was rewarded. Children who saw the adult being punished for this aggressive behavior were less likely to imitate them.

Observational Learning Examples

The following are instances that demonstrate observational learning has occurred.

- A child watches their parent folding the laundry. They later pick up some clothing and imitate folding the clothes.

- A young couple goes on a date to an Asian restaurant. They watch other diners in the restaurant eating with chopsticks and copy their actions to learn how to use these utensils.

- A child watches a classmate get in trouble for hitting another child. They learn from observing this interaction that they should not hit others.

- A group of children play hide-and-seek. One child joins the group and is not sure what to do. After observing the other children play, they quickly learn the basic rules and join in.

Stages of Observational Learning

There are four stages of observational learning that need to occur for meaningful learning to take place. Keep in mind, this is different than simply copying someone else's behavior. Instead, observational learning may incorporate a social and/or motivational component that influences whether the observer will choose to engage in or avoid a certain behavior.

For an observer to learn, they must be in the right mindset to do so. This means having the energy to learn, remaining focused on what the model is engaging in, and being able to observe the model for enough time to grasp what they are doing.

How the model is perceived can impact the observer's level of attention. Models who are seen being rewarded for their behavior, models who are attractive, and models who are viewed as similar to the observer tend to command more focus from the observer.

If the observer was able to focus on the model's behavior, the next step is being able to remember what was viewed. If the observer is not able to recall the model's behavior, they may need to go back to the first stage again.

Reproduction

If the observer is able to focus and retains the information, the next stage in observational learning is trying to replicate it. It's important to note that every individual will have their own unique capacity when it comes to imitating certain behaviors, meaning that even with perfect focus and recall, some behaviors may not be easily copied.

In order for the observer to engage in this new behavior, they will need some sort of motivation . Even if the observer is able to imitate the model, if they lack the drive to do so, they will likely not follow through with this new learned behavior.

Motivation may increase if the observer watched the model receive a reward for engaging in a certain behavior and the observer believes they will also receive some reward if they imitate said behavior. Motivation may decrease if the observer had knowledge of or witnessed the model being punished for a certain behavior.

Influences on Observational Learning

According to Bandura's research, there are a number of factors that increase the likelihood that a behavior will be imitated. We are more likely to imitate:

- People we perceive as warm and nurturing

- People who receive rewards for their behavior

- People who are in an authoritative position in our lives

- People who are similar to us in age, sex, and interests

- People we admire or who are of a higher social status

- When we have been rewarded for imitating the behavior in the past

- When we lack confidence in our own knowledge or abilities

- When the situation is confusing, ambiguous, or unfamiliar

Pros and Cons of Observational Learning

Observational learning has the potential to teach and reinforce or decrease certain behaviors based on a variety of factors. Particularly prevalent in childhood, observational learning can be a key part of how we learn new skills and learn to avoid consequences.

However, there has also been concern about how this type of learning can lead to negative outcomes and behaviors. Some studies, inspired by Bandura's research, focused on the effects observational learning may have on children and teenagers.

For example, previous research drew a direct connection between playing certain violent video games and an increase in aggression in the short term. However, later research that focused on the short- and long-term impact video games may have on players has shown no direct connections between video game playing and violent behavior.

Similarly, research looking at sexual media exposure and teenagers' sexual behavior found that, in general, there wasn't a connection between watching explicit content and having sex within the following year.

Another study indicated that if teenagers age 14 and 15 of the same sex consumed sexual media together and/or if parents restricted the amount of sexual content watched, the likelihood of having sex was lower. The likelihood of sexual intercourse increased when opposite-sex peers consumed sexual content together.

Research indicates that when it comes to observational learning, individuals don't just imitate what they see and that context matters. This may include who the model is, who the observer is with, and parental involvement.

Uses for Observational Learning

Observational learning can be used in the real world in a number of different ways. Some examples include:

- Learning new behaviors : Observational learning is often used as a real-world tool for teaching people new skills. This can include children watching their parents perform a task or students observing a teacher engage in a demonstration.

- Strengthening skills : Observational learning is also a key way to reinforce and strengthen behaviors. For example, if a study sees another student getting a reward for raising their hand in class, they will be more likely to also raise their hand the next time they want to ask a question.

- Minimizing negative behaviors : Observational learning also plays an important role in reducing undesirable or negative behaviors. For example, if you see a coworker get reprimanded for failing to finish a task on time, it means that you may be more likely to finish your work more quickly.

A Word From Verywell

Observational learning can be a powerful learning tool. When we think about the concept of learning, we often talk about direct instruction or methods that rely on reinforcement and punishment . But, a great deal of learning takes place much more subtly and relies on watching the people around us and modeling their actions. This learning method can be applied in a wide range of settings including job training, education, counseling, and psychotherapy .

Jones SS. The development of imitation in infancy. Philosophical Transactions of the Royal Society B: Biological Sciences . 2009;364(1528):2325-2335. doi:10.1098/rstb.2009.0045

Bandura A. Social Learning Theory . Prentice Hall; 1977.

Kühn S, Kugler DT, Schmalen K, Weichenberger M, Witt C, Gallinat J. Does playing violent video games cause aggression? A longitudinal intervention study. Mol Psychiatry . 2019;24(8):1220-1234. doi:10.1038/s41380-018-0031-7

Gottfried JA, Vaala SE, Bleakley A, Hennessy M, Jordan A. Does the effect of exposure to TV sex on adolescent sexual behavior vary by genre? Communication Research . 2013;40(1):73-95. doi:10.1177/0093650211415399

Parkes A, Wight D, Hunt K, Henderson M, Sargent J. Are sexual media exposure, parental restrictions on media use and co-viewing TV and DVDs with parents and friends associated with teenagers’ early sexual behaviour? Journal of Adolescence . 2013;36(6):1121-1133. doi:10.1016/j.adolescence.2013.08.019

The impact of interactive violence on children . U.S. Senate Hearing 106-1096. March 21, 2000.

Anderson CA, Dill KE. Video games and aggressive thoughts, feelings, and behavior in the laboratory and in life . J Pers Soc Psychol. 2000;78(4):772-790. doi:10.1037/0022-3514.78.4.772

Collins RL, Elliott MN, Berry SH, et al. Watching sex on television predicts adolescent initiation of sexual behavior . Pediatrics . 2004;114(3):e280-9. dloi:10.1542/peds.2003-1065-L

By Kendra Cherry, MSEd Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

Neural Mechanisms of Observational Learning: A Neural Working Model

Sònia pineda hernández.

- Author information

- Article notes

- Copyright and License information

Edited by: Carmen Moret-Tatay, Catholic University of Valencia San Vicente Mártir, Spain

Reviewed by: Andrew N. Meltzoff, University of Washington, United States; José Salvador Blasco Magraner, University of Valencia, Spain

*Correspondence: Weixi Kang [email protected]

This article was submitted to Cognitive Neuroscience, a section of the journal Frontiers in Human Neuroscience

Received 2020 Sep 23; Accepted 2020 Dec 2; Collection date 2020.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

Humans and some animal species are able to learn stimulus-response (S-R) associations by observing others' behavior. It saves energy and time and avoids the danger of trying the wrong actions. Observational learning (OL) depends on the capability of mapping the actions of others into our own behaviors, processing outcomes, and combining this knowledge to serve our goals. Observational learning plays a central role in the learning of social skills, cultural knowledge, and tool use. Thus, it is one of the fundamental processes in which infants learn about and from adults (Byrne and Russon, 1998 ). In this paper, we review current methodological approaches employed in observational learning research. We highlight the important role of the prefrontal cortex and cognitive flexibility to support this learning process, develop a new neural working model of observational learning, illustrate how imitation relates to observational learning, and provide directions for future research.

Keywords: cognitive flexibility, visuomotor learning, Imitation, vicarious learning, mirror system, observational learning, social learning, prefrontal cortex

Introduction

Observational learning refers to learning by watching others' actions and their associated outcomes in certain circumstances. Unlike imitation, observational learning does not simply mean to replicate an action that others have performed. Rather, it requires the learner to transform the observed scenarios into actions as close as possible to that of the actor's (Torriero et al., 2007 ). One of the most illustrative examples would be how Adelie penguins observe and learn from others' actions when congregating at the water's edge to enter the sea and feed on krill in Antarctica. However, the leopard seal—the main predator of the penguins—often hides beneath the waves, making it risky to be the first penguin to jump into the water. As the waiting game continues, one of the hungriest animals will jump into the water while others are watching. They will only follow if no seal appears (Burke et al., 2010 ). This ability to follow, to interpret and to learn from observed actions and outcomes is crucial for many species when the stakes are high. For example, predators can avoid eating poisonous prey without trying it by watching their peers consuming it (Burke et al., 2010 ). Many animal species, as well as human infants are born helpless and rely on observational learning (Meltzoff and Marshall, 2018 ). Research has found that newborns as young as 42 min are able to match gestures (including tongue protrusion and mouth opening) that has been shown to them (Meltzoff and Moore, 1997 ). Moreover, newborns can also map observed behaviors to their own, which suggests shared representation for the acts of self and others (Meltzoff and Moore, 1997 ; Meltzoff, 2007 ; Meltzoff and Marshall, 2018 ). Young infants can easily imitate, but only older infants demonstrate observational learning. In one study, a group of 14-month-old infants saw a novel act (e.g., the adult actor used his head to turn on a light panel). When encountering the same panel after a 1-week delay, 67% of infants used their head to turn on the light panel. This is observational learning because the infant reacts when seeing the stimulus by retrieving what they encoded in memory; rather than simply replicating an action (Meltzoff, 1988 ). Moreover, several studies from other groups have provided converging evidence for this, which found that infants not only copy goals and outcomes of the demonstration, but they also imitate the model used to attain that goal (e.g., Tennie et al., 2006 ; Williamson et al., 2008 ).

By definition, observational learning involves two main steps: (1) Infer other's intentions according to the observation, (2) process others' action outcomes (i.e., successes and errors) and combine these sources of information to learn the stimulus-response-outcome (S-R-O) associations that can be later used to obtain desirable outcomes. Unlike instruction-based learning (IBL; Hampshire et al., 2016 ), instruction is not required in observational learning, which would be advantageous for species which do not have language or aphasia patients who have difficulties comprehending linguistic instructions. Compared to widely investigated reinforcement learning (RL), observational learning enables one to acquire knowledge without taking the risks or incurring costs during discovering (Monfardini et al., 2008 ). This paper is structured in five main sections. First, we survey the current approaches in observational learning research. Next, we discuss the neural mechanisms underlying observational learning with a focus on the frontal-temporal system while comparing it to other forms of learning (e.g., IBL and RL). After that, we develop a neural working model of observational learning. We then connect observational learning with imitation and suggest that they should be regarded as two distinct cognitive processes. Finally, we discuss the role of ventral striatum in social learning and outline some possible future research directions.

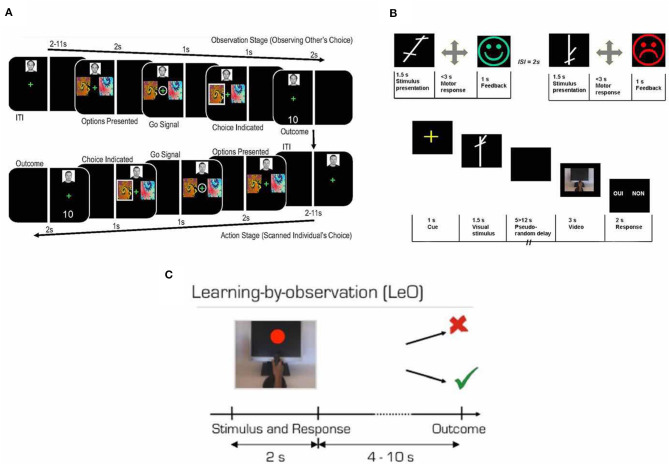

Schemes in Observational Learning Research

There are three main kinds of task designs in current observational learning research ( Figure 1 ). The first one was employed by Burke et al. ( 2010 ), who used the observational action prediction error (defined as the difference between actual choice and predicted choice of the other agent) and observational outcome prediction error (defined as the difference between the actual outcome and predicted outcome of the other agent's action, which were based on the widely used concept prediction error in reinforcement learning literature). More specifically, in a given trial of their experiment, participants had to choose between one of the two abstract fractal stimuli to gain a stochastic reward and to avoid stochastic punishment while being scanned by fMRI. One stimulus consistently delivered a good outcome (reward or absence of punishment 80% of the time) and bad outcome (punishment or absence of reward 20% of the time; Figure 1A ). They also included a trial-and-error baseline individual learning condition and a learning from observing actions only condition which could be used to characterize the observational action prediction error. The second task design was introduced by Monfardini et al. ( 2008 ), in which participants watch a short video demonstrating an actor making motor responses according to the stimulus presentation with post-response feedback ( Figure 1B ). Importantly, in the fMRI scanning section, instead of participants themselves making their response when seeing the stimuli, participants watched the actor in the short video making a response according to the stimulus that participants saw before the video and made a binary choice regarding whether the actor made a correct response. This kind of design enables the detection of brain activity when participants retrieve rules (when novel stimuli are being displayed). However, logically, the part where participants had to watch an actor performing the task and make judgement about it is very unlikely to occur in a realistic situation because we seldomly learn from judging another, while judging others' responses to stimuli might involve additional cognitive processes. In a later study, Monfardini et al. ( 2013 ) changed the aforementioned design and introduced the learning by observation (LeO) task. In the LeO task, participants were asked to learn S-R associations between stimulus presented on the screen and joystick movements by watching a video which shows an expert demonstrating the correct visuomotor association. They were then asked to make responses accordingly in the fMRI scanner after each stimulus was presented ( Figure 1C ).

Experimental designs. (A) The upper panel, learning session. Participants learned the associations between abstract visual stimuli and corresponding joystick movements. In the experiment, the stimulus presented on the screen for 1.5 s, the participants were asked to move the joystick left, right, up, or down. Once participants made a response, a visual feedback was given to indicate if the response was correct (happy green face; example 1) or wrong (sad red face; example 2). The participants learned one set of S-R associations by trial-and-error exploration and the other by observing experts performing the task. The lower panel, scanning session. Trials in the three different conditions were pseudo-randomly intermixed. Across all conditions, the visual stimuli were presented for 1.5 s and followed by a blank screen of variable durations and a short video of an actor's hand performing a joystick movement. After the movie, participants were asked to judge whether the actor in the video made a correct response or not by pressing a right or left mouse corresponding to “yes” or “no” shown on the left or the right side of the screen, respectively. There was a 50% of change that the actor's response was correct with 50% of change that the actor's response was wrong. Adapted from Monfardini et al. ( 2008 ) with permission. (B) Prediction error approach paradigm. After a variable ITI, participants first were asked to observe the confederate players being presented with two abstract fractal stimuli to choose from. Then participants were presented with the same stimuli, and the trial proceeded with the same manner. Participants were asked to make a response when the fixation cross was circled by using the index finger for left stimulus and middle finger for right stimulus on the response pad. Adapted from Burke et al. ( 2010 ) under Creative Commons Attribution License (CC BY). (C) Each trial started with a video showing a hand on a joystick performing one of the four possible movements in response to the presentation of a colored stimulus on the monitor screen. The total video length was 2 s and the colored stimulus lasted 1.5 s. The outcome image was presented after a variable delay. Participants were instructed to learn the correct stimulus-response-outcome association by looking at the video and outcomes. Adapted from Monfardini et al. ( 2013 ) under Creative Commons Attribution License (CC BY).

Neural Mechanisms of Observational Learning

Cognitive flexibility and the lateral prefrontal cortex.

In uncertain and changing environments, flexible control of actions has evolutionary and developmental benefits as it enables goal-directed and adaptive behavior. Flexible control of actions require an understanding of the outcome (reward or punishment) associated with the given action (Burke et al., 2010 ), and it is well-established that cognitive flexibility plays an important role in both reinforcement learning and instruction-based learning. In reinforcement learning where trial-and-error exploration is commonly used, individuals can use the outcomes associated with previous actions to determine future actions (Thorndike, 1970 ; Mackintosh, 1983 ; Balleine and Dickinson, 1998 ; Skinner, 2019 ). In instruction-based learning, individuals can utilize learned rules and representations to choose the correct actions (Cole et al., 2013a ). Theoretically speaking, cognitive flexibility is also required in observational learning as individuals learn the associations between other agents' actions and their associated outcomes and use this information to choose the correct responses rapidly.

The lateral prefrontal cortex (LPFC) is crucial when a high demand for cognitive flexibility is required to perform the task, e.g., during learning novel tasks (Cole et al., 2013a ), decision making (McGuire and Botvinick, 2010 ), and task-switching (Braver et al., 2003 ; Ruge et al., 2013 ). Previous research has confirmed the essential role of LPFC in instruction-based learning in terms of transferring novel rules into execution rapidly (Cole et al., 2013a , 2016 ; Hampshire et al., 2016 ), where a high degree of cognitive flexibility was required. Studies on observational learning have also shown the involvements of the dorsolateral prefrontal cortex (DLPFC) and the ventrolateral prefrontal cortex (VLPFC; Monfardini et al., 2008 , 2013 ; Burke et al., 2010 ) once the rule was learned, which is consistent with the notion that LPFC is engaged during acquisition of novel rules.

Note that the only difference between IBL and observational learning is that observational learning of novel rules requires subjects to learn by observing others' action and/or outcomes associated with that action whereas during IBL, subjects learn from explicitly linguistic or symbolistic instructions. According to previous research on IBL (Cole et al., 2013a ), abstract IBL activates anterior LPFC while concrete IBL activates middle LPFC. In abstract IBL, participants were asked to judge if two words have the same property. For example, the stimulus might be “apple” and “grape,” and the participants were required to press down the left index finger if these two kinds of fruits were sweet and press down the right index finger if any of these two kinds of fruits was not sweet. In contrast, similar to the S-R associations in observational learning research, the concrete IBL tasks asked participants to press a button when seeing a novel stimulus. For instance, the instruction might ask the participants to press down the left index finger when seeing the shape “square” and to press down the right index finger when seeing the shape “circle.” Research has identified that the concrete IBL tasks activated more posterior areas of the LPFC and activation of LPFC shifted posteriorly with practice in both abstract and concrete IBL. One interpretation is that once the novel task becomes routine after a certain amount of practice, the task representation also becomes more concrete. Thus, we hypothesize that the more posterior parts of the LPFC will be activated during observational learning because watching someone performing the task would be more concrete than transferring linguistic rules into programmatic execution. Practice of novel tasks in observational learning will also lead to an anterior-posterior shift in LPFC. However, further studies are needed to confirm this hypothesis.

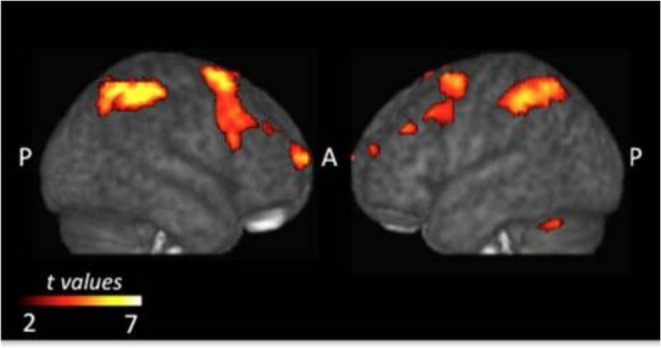

Common Networks in Acquiring Rules

Monfardini et al. ( 2013 ) found that it does not matter whether S-R associations are learned via observation or trial-and-error, three documented cerebral systems were involved in the acquisition stage: the dorsal frontoparietal, the fronto-striatal, and the cerebellar networks ( Figure 2 ). The most straightforward interpretation is that during both types of learning, the brain builds a task model linking rule and response and it does not matter whether rules are acquired through observation or through trial-and-error. The dorsal frontoparietal system consists of the superior and inferior parietal lobes and the premotor dorsal cortex, which plays a central role in sensorimotor transformation, goal-directed attentional control to stimulus and response, and instrumental learning. Previous neuroimaging research has also confirmed its contribution to trial-and-error learning (Eliassen et al., 2003 ; Law et al., 2005 ) specifically during the processing of outcomes (Brovelli et al., 2008 ). This evidence suggested that processing of other's success and error during observational learning might involve the same brain systems as in individual learning, sensorimotor transformation, and goal-directed attentional control (Monfardini et al., 2013 ).

Common brain regions that were more active during the acquisition phase of both types of learning. Adopted from Monfardini et al. ( 2013 ) under Creative Commons Attribution License (CC BY).

The frontostriatal networks comprise the left dorsal striatum, the anterior ventro-lateral, dorso-lateral prefrontal cortices and the supplementary motor area (SMA), which are considered to be of crucial importance for goal-directed operations during individual instrumental learning (Yin and Knowlton, 2006 ; Balleine et al., 2007 ; Graybiel, 2008 ; Yin et al., 2008 ; Packard, 2009 ; White, 2009 ; Ashby et al., 2010 ; Balleine and O'doherty, 2010 ). Previous research has observed activities in the caudate nucleus and the ventrolateral and dorsolateral frontal cortex during individual learning, and also in the premotor and supplementary motor areas (Frith and Frith, 1999 , 2012 ; Toni and Passingham, 1999 ; Toni et al., 2001 ; Tricomi et al., 2004 ; Boettiger and D'Esposito, 2005 ; Delgado et al., 2005 ; Galvan et al., 2005 ; Seger and Cincotta, 2005 ; Grol et al., 2006 ; Haruno and Kawato, 2006 ; Brovelli et al., 2008 ). Specifically, the anterior caudate nucleus might integrate information about performance and cognitive control demand during individual instrumental learning (Brovelli et al., 2011 ), whereas the ventral lateral prefrontal cortex (VLPFC) is involved in the retrieval of visuomotor associations learned either by trial-and-error or by observation of others' actions (Monfardini et al., 2008 ). The fronto-striatal networks are also critical for adaptively implementing a wide variety of tasks where a high level of cognitive flexibility is required and these networks' ability to adapt to various contexts is made possible by the “flexible hubs,” which include neural systems that rapidly update their patterns of global functional connectivity depending on the task demands (Cole et al., 2013b ).

The cerebellar network located bilaterally in the cerebellum was recruited in outcome processing at early stages of learning. Clinical evidence has shown that cerebellar lesions can give rise to impairments in procedural learning and cognitive planning (Grafman et al., 1992 ; Appollonio et al., 1993 ; Pascual-Leone et al., 1993 ; Gomez-Beldarrain et al., 1998 ). Moreover, a study that employed repetitive transcranial magnetic stimulation (rTMS; Torriero et al., 2007 ) has demonstrated the role of cerebellar regions in acquiring new motor patterns through both observation and trial-and-error. Monfardini et al. ( 2013 ) concluded that this network is involved in both observational learning and trial-and-error learning, even if there was no need to acquire new motor patterns.

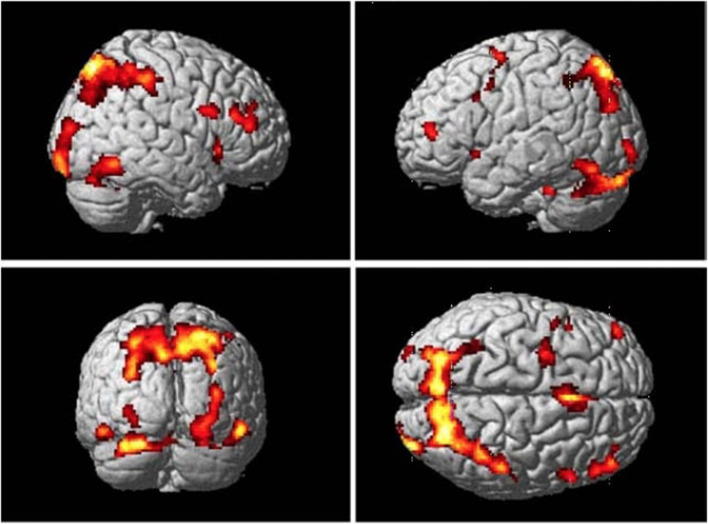

Common Networks in Retrieval of Associations

S-R associations can be learned through instruction, observation, and trial-and-error, thus common networks involved in retrieval of associations are proposed. Results from the conjunction analysis in Monfardini et al. ( 2008 ) has demonstrated that when newly acquired rules were being retrieved, with the brain network consisting of the right ventrolateral and anterior frontal cortices, pre-SMA, as well as the parietal cortex, was highly involved ( Figure 3 ; Bunge et al., 2003 ; Bunge, 2004 ; Donohue et al., 2005 ; Crone et al., 2006 ). This finding was consistent with the conclusions of Donohue et al. ( 2005 ) that assessed the contribution of the inferior frontal junction to the retrieval of motor responses associated with symbolic cues. It also suggested that the posterior medial temporal gyrus plays a crucial role in the representation of arbitrary associations (Donohue et al., 2005 ). The most straightforward interpretation of these findings is that during both observational learning and trial-and-error learning, the brain builds a task model which links rules with responses, thus involving brain networks that are commonly activated during retrieval of associations.

Common brain regions that were more active while participants viewing stimuli they had recently learned by either trial-and-error exploration or observation than control stimuli at the retrieval stage. Adopted from Monfardini et al. ( 2008 ) with permission.

Specific Networks for the Observational Learning

When retrieving rules learned through observation, the observation of abstract stimuli and their associations with corresponding responses activated a set of brain regions including the right pars triangularis (BA 45), the right inferior parietal lobule, and the posterior visual areas. Monfardini et al. ( 2008 ) compared the changes in BOLD signals in the right pars triangularis during retrieval with that during movie watching and identified similar patterns of activation when subjects retrieved motor responses associated with a visual arbitrary stimulus. This finding is consistent with the notion that the pars triangularis is engaged in observation of actions, but not in imitation or execution of actions (Molnar-Szakacs et al., 2005 ). Monfardini et al. ( 2008 ) proposed that the right pars triangularis should not be considered as part of the mirror system, rather, this brain region is related to the suppression of actions execution during both observation and motor imagery (Deiber et al., 1998 ; Molnar-Szakacs et al., 2006 ). Regarding activations in the posterior visual areas, Monfardini et al. ( 2008 ) suggested that it may be a result of top-down modulations. In line with this hypothesis, neuroimaging studies have proposed that during the execution of a given task, a frontal-parietal network exerts control over the activities in the visual cortex through top-down signals that modulate activities of the visual cortex. In Monfardini et al. ( 2008 ), attention to the hand was an instinct characteristic of observational learning. In the meantime, a retrieval process may reactivate the observed movements during the learning process and the top-down modulations may influence distinct visual areas, depending on whether the rules were learned through observation or trial-and-error processes (Super et al., 2001 ; Vidyasagar and Pigarev, 2007 ).

During implementation, many brain areas were significantly more active during the presentation of incorrect outcomes in observational compared to individual learning. In particular, the activated clusters of bilateral brain regions include the middle cingulate cortex (MCC), the posterior medial frontal cortex (pMFC), the anterior insula, and the posterior superior temporal sulcus (pSTS). Previous research has demonstrated that the pMFC and the anterior insula are both part of the error-monitor network (Radke et al., 2011 ). The pMFC is located within the dorsal anterior cingulate cortex, which has been found to play a central role in individual trial-and-error learning processes (Holroyd and Coles, 2002 ; Mars et al., 2005 ). A study by Monfardini et al. ( 2013 ) has also suggested that the pMFC is highly involved in both error-monitoring and subsequent behavioral adjustments. More specifically, it has been suggested that when adaptations are required according to the outcomes associated with an action, the performance-monitoring system in the pMFC implements as a signal of the need for adjustments (Ullsperger and Von Cramon, 2003 ). Recent results from electrophysiological experiments on monkeys suggested that neurons in the dorsomedial prefrontal cortex selectively respond to others' incorrect actions, and their patterns of activity are associated with the subsequent behavior adjustments (Yoshida et al., 2012 ). The anterior insular cortex is involved in both performance monitoring processes (Radke et al., 2011 ) and the autonomic responses to error in non-social contexts (Ullsperger and Von Cramon, 2003 ), and its level of activity increases with error awareness (Klein et al., 2007 ; Ullsperger et al., 2010 ). This network is also activated during error-detection and in non-learning contexts (Ridderinkhof et al., 2004 ; de Bruijn et al., 2009 ; Radke et al., 2011 ), however, there is no evidence suggesting its activities could differentiate others' from individual learning. Monfardini et al. ( 2013 ) provided novel insights into the role of the pMFC-anterior insular network in the processing of others' mistakes during observational learning. To date, a number of studies has established an association between the level of error-related activities and the subsequent learning performances (Klein et al., 2007 ; Hester et al., 2008 ; van der Helden et al., 2010 ). Monfardini et al. ( 2013 ) speculated that the neurons in the pMFC-anterior insular network may act as the neural correlates of a cognitive bias that have been referred to as the predisposition of humans to process errors of others differently from their own errors by studies in neuroeconomics and social psychology. Specifically, the “actor-observer” cognitive bias represents the tendency to attribute others' failures to their personal mistakes but one's own failure is attributed to the situation (Jones and Nisbett, 1987 ). For a deeper understanding of the relative effectiveness of individual and observational learning from others' and individual errors, further neuroimaging research is needed (Monfardini et al., 2013 ).

Monfardini et al. ( 2013 ) also demonstrated that the posterior superior temporal sulcus (pSTS) is specifically correlated with the processing of others' errors during observational learning. As previous studies on non-human primates showed that the STS is anatomically well-suited to integrating information sourced from both the ventral and dorsal visual pathways, in a number of studies, social cues in the STS region which is sensitive to stimuli that signal the actions of another individual were analyzed (Pandya and Yeterian, 1985 ; Boussaoud et al., 1990 ; Baizer et al., 1991 ). Particular emphasis has been given to the pSTS, which was regarded as the substrate of goal-directed behavior (Saxe et al., 2004 ) and social perception (Allison et al., 2000 ). Overall, previous research supports the hypothesis that perception of agency activates the pSTS (Tankersley et al., 2007 ), and the activity in pSTS might be part of a larger network mapping of observed actions to motor programs (Rilling et al., 2004 ; Keysers and Gazzola, 2006 ). Moreover, the pSTS is considered to be actively involved in the attribution of mental states to other organisms (Frith and Frith, 1999 , 2003 ; Saxe and Kanwisher, 2003 ; Samson et al., 2004 ) and the extraction of contextual and intentional cues from goal-directed behavior (Toni et al., 2001 ). More importantly, activities of the pSTS have been observed in humans during imitation of actions (Iacoboni, 2005 ). Results from Monfardini et al. ( 2013 ) supported the hypothesis of the role of pSTS in the processing of social information, which is a necessary component of the learning stage of observational learning. Additionally, the fact that the pSTS was more activated by the errors made by others than one's own errors may imply more intensive mentalizing (e.g., what does the agent think now that they know that one certain action does not lead to positive outcomes?) or the reactivation of the visual representations of an observed action to decrease its association with the corresponding stimulus (Monfardini et al., 2013 ).

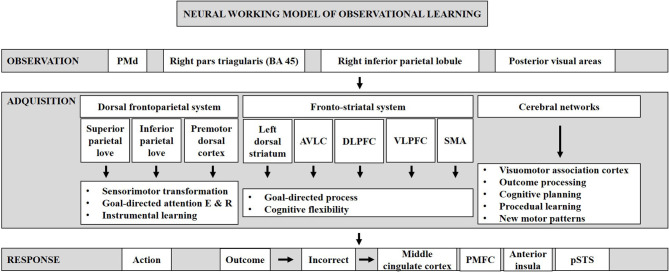

Neural Working Model of Observational Learning

To shed light on how observational learning works and its neural basis, we created a neural working model of observational learning ( Figure 4 ). Our neural work model of observational learning includes three phases: observation, acquisition, response. The first phase is observation, defined as observation of abstract stimuli and their association to specific bodily movements, which activate a network consisting of a number of brain regions: the dorsal premotor cortex (Cisek and Kalaska, 2004 ), the right pars triangularis (BA 45), the right inferior parietal lobule, and the posterior visual areas (Monfardini et al., 2008 ). The ability to observe and learn with it is a powerful capacity of humans (Mattar and Gribble, 2005 ; Torriero et al., 2007 ), and previous studies have shown that when a symbolic representation of task performance is observed, neurons in the dorsal premotor cortex (PMd) respond in a similar manner than when the task is physically performed (Cisek and Kalaska, 2004 ).

Neural working model of observational learning. It can be divided into three phases: observation, acquisition, and response.

The second phase is acquisition of rules, which recruits three individual systems: the dorsal frontoparietal, the fronto-striatal, and the cerebellar networks. The dorsal frontoparietal network consists of the bilateral superior and inferior parietal lobes and the premotor dorsal cortices, which are thought to involve in sensorimotor transformation, in the regulation of goal-directed attention to both stimulus and response, and in instrumental learning (Monfardini et al., 2013 ). The fronto-striatal network consists of the left dorsal striatum, the anterior ventro-lateral, and dorso-lateral prefrontal cortices, ventral lateral prefrontal cortex, and the SMA. During instrumental learning, this network is thought to facilitate goal-directed processes (Yin and Knowlton, 2006 ; Balleine et al., 2007 ; Graybiel, 2008 ; Yin et al., 2008 ; Packard, 2009 ; White, 2009 ; Ashby et al., 2010 ; Balleine and O'doherty, 2010 ). In early phases of learning, the cerebellar network, located bilaterally in the cerebellum, is involved in outcome processing and assessment. It is also related to cognitive planning and procedural learning. Moreover, the cerebellar structures play a role in the acquisition of new motor patterns learned through observation (Grafman et al., 1992 ; Appollonio et al., 1993 ; Pascual-Leone et al., 1993 ; Gomez-Beldarrain et al., 1998 ).

The third phase is the response where the learner retrieves learned S-R contingencies and makes a response when seeing the stimulus. After making a response, if the outcome is incorrect, then it activates the error-monitoring network comprised of the middle cingulate cortex, the posterior medial frontal cortex (pMFC), the anterior insula, and the posterior superior temporal sulcus (pSTS; Radke et al., 2011 ). Usually, action and outcome are accompanied by a shift from feedback-based performance to response-based performance, and action and outcome can be learned well, both actively and by observation (Bellebaum and Colosio, 2014 ).

Observational Learning vs. Imitation

Previous studies on observational learning have focused on the learning of novel motor patterns through imitation and mirror-like mechanisms (Monfardini et al., 2013 ). In imitation tasks used in these studies, participants did not need to search for the correct response to the current stimuli from multiple observations. Instead, they were required to imitate other people's actions regardless of the outcomes. Classical imitation research has suggested that the main circuitry of imitation consists of the superior temporal sulcus and the mirror neuron system, which includes the posterior inferior frontal gyrus, and ventral premotor cortex, and the rostral inferior parietal lobule [see Iacoboni ( 2005 ) for a review]. Several studies have found that the frontoparietal putative mirror neuron system (pMNS), which consists of the ventral and dorsal premotor cortex, the inferior parietal lobule and adjacent somatosensory areas, and the middle temporal gyri, was strongly activated while participants were observing others' actions during the acquisition of motor patterns (Caspers et al., 2010 ). Moreover, the pMNS was also recruited when participants were watching others' actions without the need to imitate, or they simply executed those actions (Monfardini et al., 2013 ).

However, the role of pMNS is ambiguous in observational learning of arbitrary visuomotor associations because the differentiation between actions that would result in positive feedback and actions that lead to negative feedback remain unexplored. In observational learning tasks, no novel motor responses have to be acquired during learning stages. Instead, new associations have to be established between the stimulus presented, acquired motor responses, and the associated outcomes. Monfardini et al. ( 2013 ) found that in line with activities of the pMNS, as outlined in the literature, both observational learning and individual trial-and-error learning activated a brain network that was also involved in simple action execution and observation. The increase in activations following outcome presentation was larger in observation learning than in trial-and-error learning in most trials. However, during the LeO task, the BOLD signal was larger in practice than in observation. The comparatively lower activation during the observation stage compared to the practice stage is a common finding in studies on pMNS and might be explained by the fact that only ~10% of premotor neurons respond to action observations in primates (Gallese et al., 1996 ; Keysers et al., 2003 ). It is therefore challenging to infer why observational learning induces a slightly larger BOLD signal than trial-and-error learning does in somatosensory motor regions. Researchers hypothesized that the BOLD signal in the somatosensorimotor regions were more activated in LeO due to the fact that unlike in the trial-and-error learning condition, responses were not given by the participants during the S-R acquisition stage (Monfardini et al., 2013 ). Thus, participants might be strong to mentally re-enact the observed response upon knowing whether it was to be associated with the stimulus or not. Without overt execution, it would be important to use additional mental re-enactments of others' actions to consolidate the S-R association that needs to be established during the learning process. This notion is consistent with proactive control in instruction-based learning, where goal-relevant information is actively maintained in preparation for the anticipated high control demand (Cole et al., 2018 ).

Ventral Striatum and Social Learning

Burke et al. ( 2010 ) found that the ventral striatum, a brain region that has been found to be frequently related with the processing of prediction errors in individual instrumental learning, showed the inverted coding patterns for observational prediction errors. Despite the fact that Burke et al. ( 2010 ) did not present participants with a game situation and there was no way that the behavior of the confederate would affect the probability of participants obtaining the reward, this inverse reward prediction error encoding of the confederate's behavioral outcomes was supported by previous studies emphasizing the critical role of the ventral striatum in competitive situations. Nevertheless, the following explanations must be carefully assessed, given that Burke et al. ( 2010 ) did not include non-social control trials in their task design. For example, the ventral striatum is involved when a competitor is punished (e.g., received less money than oneself). This gives rise to several future research directions including the role of the ventral striatum in learning from each other. In the meantime, are positive reward prediction errors a sophisticated result of viewing others' loss during observational learning, or it is simply rewarding to see the misfortune of others? Recent data suggested the perceived similarity between the personalities of the participant and the confederate modulates activities in the ventral striatum when observing a confederate succeed in a non-learning task. In action-only learning scenarios, the individual outcome prediction error signals from the ventral striatum not only increase the selection accuracies of one's own outcome-oriented choices but can also refine predictions of others' choices based on information about their past actions (Burke et al., 2010 ).

Concluding Remarks

In conclusion, observational learning is an important cognitive process in both animals who do not have language and humans whose infants imitate and learn from adults during development and early learning stages. We surveyed three kinds of methodological approaches in the investigation of this cognitive process, with emphasis on different stages of learning as well as on different modeling aspects. LPFC is essential for cognitive flexibility, which is required in observational learning where subjects have to rapidly learn rules from observing others' actions and outcomes associated with these actions. Trial-and-error learning and observational learning share some networks both during acquiring rules and applying rules, although observational learning also involves some additional networks. A neural working model has been developed for observational learning consisting of three phases: observation, acquisition, and response. This model is important because it disentangled the sub-processes and neural systems involved in observational learning. It provides foundations for future cognitive neuroscience and translational clinical research. Observational learning is different from imitation, both conceptually (observational learning involves processing of others' actions and outcomes to know how to react when encountering the same situation, whereas imitation only requires the subject to replicate others' actions) and neuroscientifically (the mirror neuron system is thought to be critical in imitation and is only partly activated in observational learning).

Future research questions include (a) how the observational learning processes in sports could be optimized to facilitate motor skill acquisition and improve performance, (b) how the brain activity patterns shift with practice in observational learning, and (c) how observational learning is employed in human infants that have not fully developed language abilities and how the brain supports this cognitive process. Imitation has been studied in children with the aim to understand its relationship with development (Sebastianutto et al., 2017 ). However, relatively little attention has been paid to observational learning from a developmental perspective. Unlike adults, neuroimaging methods such as fMRI and transcranial magnetic stimulation (TMS) cannot be applied to infants, making it challenging to directly analyze brain activation patterns in the study of observational learning. Previous works using electroencephalogram (EEG) have elucidated the properties of the mu rhythm in infants during imitation (e.g., Marshall and Meltzoff, 2014 ), and it would be important for future studies to investigate how observational learning relates to development in infants and how observational learning differs from imitation in infants using EEG.

As observational learning and imitation allow for scenario-specific adaptive behavior, learning and task execution, increasing interest has been shown in the application of findings in both fields to computational modeling, artificial intelligence, and robotics (Liu et al., 2018 ). More specifically, research has demonstrated that biologically inspired learning models could be implemented to enable robotic learning by imitation and allow for human-robot collaboration (Chung et al., 2015 ). Furthermore, modeling of imitation and observational learning using robots facilitates testing and refinement of hypotheses in developmental psychology and human-robot interaction. In one previous study, developmental robots were used to model human cognitive development and to examine how learning could be achieved through interaction that involves mutual imitation (Boucenna et al., 2016 ). Taken together, in future studies, it would be of considerable relevance to examine how understanding of observational learning processes and imitation could lead to advancements in the fields of robotics and cognitive modeling.

From a clinical-translational perspective, previous research has revealed that functional connectivity of some of the LPFC networks are impacted in patients who suffer from traumatic brain injuries (Hampshire et al., 2013 ) or patients with neurodegenerative disorders (Grafman et al., 1992 ). As an example, patients with Parkinson's disease who had abnormal striatum function showed slower acquisition of contingency reversal learning paradigms (Williams-Gray et al., 2008 ). Therefore, another sensible future direction is to determine whether the connectivity effects observed during simple observational learning can provide clinical diagnostic markers in populations that suffer from cognitive impairments.

Limitations

The current review focused on neuroimaging literature of observational learning using healthy participants. Other methodologies (e.g., electrophysiology and lesion studies) may further confirm the role of each brain region in observational learning in our review. Moreover, a review on studies from atypical participants (e.g., infants, aging adults, people with neurological conditions) may provide further insights on the relevance of observational learning to other aspects (e.g., developmental theories and rehabilitation).

Author Contributions

WK: conceptualization, writing–original draft, visualization, writing–review & editing, and funding acquisition. SP: conceptualization, writing–original draft, and visualization. JM: writing–review & editing. All authors contributed to the article and approved the submitted version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Funding. This work was supported by the Imperial Open Access Fund.

- Allison T., Puce A., McCarthy G. (2000). Social perception from visual cues: role of the STS region. Trends Cogn. Sci. 4, 267–278. 10.1016/s1364-6613(00)01501-1 [ DOI ] [ PubMed ] [ Google Scholar ]

- Appollonio I. M., Grafman J., Schwartz V., Massaquoi S., Hallett M. (1993). Memory in patients with cerebellar degeneration. Neurology 43, 1536–1536. 10.1212/WNL.43.8.1536 [ DOI ] [ PubMed ] [ Google Scholar ]

- Ashby F. G., Turner B. O., Horvitz J. C. (2010). Cortical and basal ganglia contributions to habit learning and automaticity. Trends Cogn. Sci. 14, 208–215. 10.1016/j.tics.2010.02.001 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Baizer J. S., Ungerleider L. G., Desimone R. (1991). Organization of visual inputs to the inferior temporal and posterior parietal cortex in macaques. J. Neurosci. 11, 168–190. 10.1523/JNEUROSCI.11-01-00168.1991 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Balleine B. W., Delgado M. R., Hikosaka O. (2007). The role of the dorsal striatum in reward and decision-making. J. Neurosci. 27, 8161–8165. 10.1523/JNEUROSCI.1554-07.2007 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Balleine B. W., Dickinson A. (1998). Goal-directed instrumental action: contingency and incentive learning and their cortical substrates. Neuropharmacology 37, 407–419. 10.1016/S0028-3908(98)00033-1 [ DOI ] [ PubMed ] [ Google Scholar ]

- Balleine B. W., O'doherty J. P. (2010). Human and rodent homologies in action control: corticostriatal determinants of goal-directed and habitual action. Neuropsychopharmacology 35, 48–69. 10.1038/npp.2009.131 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Bellebaum C., Colosio M. (2014). From feedback- to response-based performance monitoring in active and observational learning. J. Cogn. Neurosci. 26, 2111–2127. 10.1162/jocn_a_00612 [ DOI ] [ PubMed ] [ Google Scholar ]

- Boettiger C. A., D'Esposito M. (2005). Frontal networks for learning and executing arbitrary stimulus response associations. J. Neurosci. 25, 2723–2732. 10.1523/JNEUROSCI.3697-04.2005 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Boucenna S., Cohen D., Meltzoff A. N., Gaussier P., Chetouani M. (2016). Robots learn to recognize individuals from imitative encounters with people and avatars. Sci. Rep. 6:19908. 10.1038/srep19908 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Boussaoud D., Ungerleider L. G., Desimone R. (1990). Pathways for motion analysis: cortical connections of the medial superior temporal and fundus of the superior temporal visual areas in the macaque. J. Comp. Neurol. 296, 462–495. 10.1002/cne.902960311 [ DOI ] [ PubMed ] [ Google Scholar ]

- Braver T. S., Reynolds J. R., Donaldson D. I. (2003). Neural mechanisms of transient and sustained cognitive control during task switching. Neuron 39, 713–726. 10.1016/S0896-6273(03)00466-5 [ DOI ] [ PubMed ] [ Google Scholar ]

- Brovelli A., Laksiri N., Nazarian B., Meunier M., Boussaoud D. (2008). Understanding the neural computations of arbitrary visuomotor learning through fMRI and associative learning theory. Cerebral Cortex 18, 1485–1495. 10.1093/cercor/bhm198 [ DOI ] [ PubMed ] [ Google Scholar ]

- Brovelli A., Nazarian B., Meunier M., Boussaoud D. (2011). Differential roles of caudate nucleus and putamen during instrumental learning. Neuroimage 57, 1580–1590. 10.1016/j.neuroimage.2011.05.059 [ DOI ] [ PubMed ] [ Google Scholar ]

- Bunge S. A. (2004). How we use rules to select actions: a review of evidence from cognitive neuroscience. Cognitive Affect. Behav. Neurosci. 4, 564–579. 10.3758/CABN.4.4.564 [ DOI ] [ PubMed ] [ Google Scholar ]

- Bunge S. A., Kahn I., Wallis J. D., Miller E. K., Wagner A. D. (2003). Neural circuits subserving the retrieval and maintenance of abstract rules. J. Neurophysiol. 90, 3419–3428. 10.1152/jn.00910.2002 [ DOI ] [ PubMed ] [ Google Scholar ]

- Burke C. J., Tobler P. N., Baddeley M., Schultz W. (2010). Neural mechanisms of observational learning. Proc. Natl. Acad. Sci. U.S.A. 107, 14431–14436. 10.1073/pnas.1003111107 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Byrne R. W., Russon A. E. (1998). Learning by imitation: a hierarchical approach. Behav. Brain Sci. 21, 667–684. 10.1017/S0140525X98001745 [ DOI ] [ PubMed ] [ Google Scholar ]

- Caspers S., Zilles K., Laird A. R., Eickhoff S. B. (2010). ALE meta-analysis of action observation and imitation in the human brain. Neuroimage 50, 1148–1167. 10.1016/j.neuroimage.2009.12.112 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Chung M. J. Y., Friesen A. L., Fox D., Meltzoff A. N., Rao R. P. (2015). A Bayesian developmental approach to robotic goal-based imitation learning. PLoS ONE 10:e0141965. 10.1371/journal.pone.0141965 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Cisek P., Kalaska J. F. (2004). Neural correlates of mental rehearsal in dorsal premotor cortex. Nature 431, 993–996. 10.1038/nature03005 [ DOI ] [ PubMed ] [ Google Scholar ]

- Cole M. W., Ito T., Braver T. S. (2016). The behavioral relevance of task information in human prefrontal cortex. Cerebral Cortex 26, 2497–2505. 10.1093/cercor/bhv072 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Cole M. W., Laurent P., Stocco A. (2013a). Rapid instructed task learning: a new window into the human brain's unique capacity for flexible cognitive control. Cognitive Affective Behav. Neurosci. 13, 1–22. 10.3758/s13415-012-0125-7 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Cole M. W., Patrick L. M., Meiran N., Braver T. S. (2018). A role for proactive control in rapid instructed task learning. Acta Psychol. 184, 20–30. 10.1016/j.actpsy.2017.06.004 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Cole M. W., Reynolds J. R., Power J. D., Repovs G., Anticevic A., Braver T. S. (2013b). Multi-task connectivity reveals flexible hubs for adaptive task control. Nat. Neurosci. 16:1348. 10.1038/nn.3470 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Crone E. A., Donohue S. E., Honomichl R., Wendelken C., Bunge S. A. (2006). Brain regions mediating flexible rule use during development. J. Neurosci. 26, 11239–11247. 10.1523/JNEUROSCI.2165-06.2006 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- de Bruijn E. R., de Lange F. P., von Cramon D. Y., Ullsperger M. (2009). When errors are rewarding. J. Neurosci. 29, 12183–12186. 10.1523/JNEUROSCI.1751-09.2009 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Deiber M. P., Ibanez V., Honda M., Sadato N., Raman R., Hallett M. (1998). Cerebral processes related to visuomotor imagery and generation of simple finger movements studied with positron emission tomography. Neuroimage 7, 73–85. 10.1006/nimg.1997.0314 [ DOI ] [ PubMed ] [ Google Scholar ]

- Delgado M. R., Miller M. M., Inati S., Phelps E. A. (2005). An fMRI study of reward-related probability learning. Neuroimage 24, 862–873. 10.1016/j.neuroimage.2004.10.002 [ DOI ] [ PubMed ] [ Google Scholar ]

- Donohue S. E., Wendelken C., Crone E. A., Bunge S. A. (2005). Retrieving rules for behavior from long-term memory. Neuroimage 26, 1140–1149. 10.1016/j.neuroimage.2005.03.019 [ DOI ] [ PubMed ] [ Google Scholar ]

- Eliassen J. C., Souza T., Sanes J. N. (2003). Experience-dependent activation patterns in human brain during visual-motor associative learning. J. Neurosci. 23, 10540–10547. 10.1523/JNEUROSCI.23-33-10540.2003 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Frith C. D., Frith U. (1999). Interacting minds–a biological basis. Science 286, 1692–1695. 10.1126/science.286.5445.1692 [ DOI ] [ PubMed ] [ Google Scholar ]

- Frith U., Frith C. D. (2003). Development and neurophysiology of mentalizing. Philos. Trans. R. Soc. Lond. B Biol. Sci. 358, 459–473. 10.1098/rstb.2002.1218 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Frith C. D., Frith U. (2012). Mechanisms of social cognition. Ann. Rev. Psychol. 63,287–313. 10.1146/annurev-psych-120710-100449 [ DOI ] [ PubMed ] [ Google Scholar ]

- Gallese V., Fadiga L., Fogassi L., Rizzolatti G. (1996). Action recognition in the premotor cortex. Brain 119, 593–609. 10.1093/brain/119.2.593 [ DOI ] [ PubMed ] [ Google Scholar ]

- Galvan A., Hare T. A., Davidson M., Spicer J., Glover G., Casey B. J. (2005). The role of ventral frontostriatal circuitry in reward-based learning in humans. J. Neurosci. 25, 8650–8656. 10.1523/JNEUROSCI.2431-05.2005 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Gomez-Beldarrain M., Garcia-Monco J. C., Rubio B., Pascual-Leone A. (1998). Effect of focal cerebellar lesions on procedural learning in the serial reaction time task. Exp. Brain Res. 120, 25–30. 10.1007/s002210050374 [ DOI ] [ PubMed ] [ Google Scholar ]

- Grafman J., Litvan I., Massaquoi S., Stewart M., Sirigu A., Hallett M. (1992). Cognitive planning deficit in patients with cerebellar atrophy. Neurology 42, 1493–1493. 10.1212/WNL.42.8.1493 [ DOI ] [ PubMed ] [ Google Scholar ]

- Graybiel A. M. (2008). Habits, rituals, and the evaluative brain. Annu. Rev. Neurosci. 31, 359–387. 10.1146/annurev.neuro.29.051605.112851 [ DOI ] [ PubMed ] [ Google Scholar ]

- Grol M. J., de Lange F. P., Verstraten F. A., Passingham R. E., Toni I. (2006). Cerebral changes during performance of overlearned arbitrary visuomotor associations. J. Neurosci. 26, 117–125. 10.1523/JNEUROSCI.2786-05.2006 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Hampshire A., Hellyer P. J., Parkin B., Hiebert N., MacDonald P., Owen A. M., et al. (2016). Network mechanisms of intentional learning. Neuroimage 127, 123–134. 10.1016/j.neuroimage.2015.11.060 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Hampshire A., MacDonald A., Owen A. M. (2013). Hypoconnectivity and hyperfrontality in retired American football players. Sci. Rep. 3:2972. 10.1038/srep02972 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Haruno M., Kawato M. (2006). Different neural correlates of reward expectation and reward expectation error in the putamen and caudate nucleus during stimulus-action-reward association learning. J. Neurophysiol. 95, 948–959. 10.1152/jn.00382.2005 [ DOI ] [ PubMed ] [ Google Scholar ]

- Hester R., Barre N., Murphy K., Silk T. J., Mattingley J. B. (2008). Human medial frontal cortex activity predicts learning from errors. Cerebral Cortex 18, 1933–1940. 10.1093/cercor/bhm219 [ DOI ] [ PubMed ] [ Google Scholar ]

- Holroyd C. B., Coles M. G. (2002). The neural basis of human error processing: reinforcement learning, dopamine, and the error-related negativity. Psychol. Rev. 109:679. 10.1037/0033-295X.109.4.679 [ DOI ] [ PubMed ] [ Google Scholar ]

- Iacoboni M. (2005). Neural mechanisms of imitation. Curr. Opin. Neurobiol. 15, 632–637. 10.1016/j.conb.2005.10.010 [ DOI ] [ PubMed ] [ Google Scholar ]

- Jones E. E., Nisbett R. E. (1987). The Actor and the Observer: Divergent Perceptions of the Causes of Behavior. New York, NY: General Learning Press. [ Google Scholar ]

- Keysers C., Gazzola V. (2006). Towards a unifying neural theory of social cognition. Prog. Brain Res. 156, 379–401. 10.1016/S0079-6123(06)56021-2 [ DOI ] [ PubMed ] [ Google Scholar ]

- Keysers C., Kohler E., Umiltà M. A., Nanetti L., Fogassi L., Gallese V. (2003). Audiovisual mirror neurons and action recognition. Exp. Brain Res. 153, 628–636. 10.1007/s00221-003-1603-5 [ DOI ] [ PubMed ] [ Google Scholar ]

- Klein T. A., Neumann J., Reuter M., Hennig J., von Cramon D. Y., Ullsperger M. (2007). Genetically determined differences in learning from errors. Science 318, 1642–1645. 10.1126/science.1145044 [ DOI ] [ PubMed ] [ Google Scholar ]

- Law J. R., Flanery M. A., Wirth S., Yanike M., Smith A. C., Frank L. M., et al. (2005). Functional magnetic resonance imaging activity during the gradual acquisition and expression of paired-associate memory. J. Neurosci. 25, 5720–5729. 10.1523/JNEUROSCI.4935-04.2005 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Liu Y., Gupta A., Abbeel P., Levine S. (2018). Imitation from observation: Learning to imitate behaviors from raw video via context translation, in 2018 IEEE International Conference on Robotics and Automation (ICRA) (Brisbane, QLD: IEEE; ), 1118–1125. 10.1109/ICRA.2018.8462901 [ DOI ] [ Google Scholar ]

- Mackintosh N. J. (1983). Conditioning and Associative Learning (p. 316: ). Oxford: Clarendon Press. [ Google Scholar ]

- Mars R. B., Coles M. G., Grol M. J., Holroyd C. B., Nieuwenhuis S., Hulstijn W., et al. (2005). Neural dynamics of error processing in medial frontal cortex. Neuroimage 28, 1007–1013. 10.1016/j.neuroimage.2005.06.041 [ DOI ] [ PubMed ] [ Google Scholar ]

- Marshall P. J., Meltzoff A. N. (2014). Neural mirroring mechanisms and imitation in human infants. Philos. Trans. Royal Society B 369:20130620. 10.1098/rstb.2013.0620 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Mattar A. A., Gribble P. L. (2005). Motor learning by observing. Neuron 46, 153–160. 10.1016/j.neuron.2005.02.009 [ DOI ] [ PubMed ] [ Google Scholar ]

- McGuire J. T., Botvinick M. M. (2010). Prefrontal cortex, cognitive control, and the registration of decision costs. Proc. Natl. Acad. Sci. U.S.A. 107, 7922–7926. 10.1073/pnas.0910662107 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Meltzoff A. N. (1988). Infant imitation after a 1-week delay: long-term memory for novel acts and multiple stimuli. Dev. Psychol. 24:470. 10.1037/0012-1649.24.4.470 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Meltzoff A. N. (2007). The ‘like me' framework for recognizing and becoming an intentional agent. Acta Psychol. 124, 26–43. 10.1016/j.actpsy.2006.09.005 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Meltzoff A. N., Marshall P. J. (2018). Human infant imitation as a social survival circuit. Curr. Opin. Behav. Sci. 24, 130–136. 10.1016/j.cobeha.2018.09.006 [ DOI ] [ Google Scholar ]

- Meltzoff A. N., Moore M. K. (1997). Explaining facial imitation: a theoretical model. Infant Child Dev. 6, 179–192. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Molnar-Szakacs I., Iacoboni M., Koski L., Mazziotta J. C. (2005). Functional segregation within pars opercularis of the inferior frontal gyrus: evidence from fMRI studies of imitation and action observation. Cerebral Cortex 15, 986–994. 10.1093/cercor/bhh199 [ DOI ] [ PubMed ] [ Google Scholar ]

- Molnar-Szakacs I., Kaplan J., Greenfield P. M., Iacoboni M. (2006). Observing complex action sequences: the role of the frontoparietal mirror neuron system. Neuroimage 33, 923–935. 10.1016/j.neuroimage.2006.07.035 [ DOI ] [ PubMed ] [ Google Scholar ]

- Monfardini E., Brovelli A., Boussaoud D., Takerkart S., Wicker B. (2008). I learned from what you did: retrieving visuomotor associations learned by observation. Neuroimage 42, 1207–1213. 10.1016/j.neuroimage.2008.05.043 [ DOI ] [ PubMed ] [ Google Scholar ]

- Monfardini E., Gazzola V., Boussaoud D., Brovelli A., Keysers C., Wicker B. (2013). Vicarious neural processing of outcomes during observational learning. PLoS ONE 8:e73879. 10.1371/journal.pone.0073879 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Packard M. G. (2009). Exhumed from thought: basal ganglia and response learning in the plus-maze. Behav. Brain Res. 199, 24–31. 10.1016/j.bbr.2008.12.013 [ DOI ] [ PubMed ] [ Google Scholar ]

- Pandya D. N., Yeterian E. H. (1985). Architecture and connections of cortical association areas, in Association and Auditory Cortices (Boston, MA: Springer; ), 3-61. 10.1007/978-1-4757-9619-3_1 [ DOI ] [ Google Scholar ]

- Pascual-Leone A., Grafman J., Clark K., Stewart M., Massaquoi S., Lou J. S., et al. (1993). Procedural learning in Parkinson's disease and cerebellar degeneration. Ann. Neurol. 34, 594–602. 10.1002/ana.410340414 [ DOI ] [ PubMed ] [ Google Scholar ]

- Radke S., De Lange F. P., Ullsperger M., De Bruijn E. R. A. (2011). Mistakes that affect others: an fMRI study on processing of own errors in a social context. Exp. Brain Res. 211, 405–413. 10.1007/s00221-011-2677-0 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Ridderinkhof K. R., Ullsperger M., Crone E. A., Nieuwenhuis S. (2004). The role of the medial frontalcortex in cognitive control. Science 306, 443–447. 10.1126/science.1100301 [ DOI ] [ PubMed ] [ Google Scholar ]

- Rilling J. K., Sanfey A. G., Aronson J. A., Nystrom L. E., Cohen J. D. (2004). The neural correlates of theory of mind within interpersonal interactions. Neuroimage 22, 1694–1703. 10.1016/j.neuroimage.2004.04.015 [ DOI ] [ PubMed ] [ Google Scholar ]

- Ruge H., Jamadar S., Zimmermann U., Karayanidis F. (2013). The many faces of preparatory control in task switching: reviewing a decade of fMRI research. Hum. Brain Mapp. 34, 12–35. 10.1002/hbm.21420 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Samson D., Apperly I. A., Chiavarino C., Humphreys G. W. (2004). Left temporoparietal junction is necessary for representing someone else's belief. Nature. Neurosci. 7, 499–500. 10.1038/nn1223 [ DOI ] [ PubMed ] [ Google Scholar ]

- Saxe R., Kanwisher N. (2003). People thinking about thinking people: the role of the temporo-parietal junction in theory of mind. Neuroimage 19, 1835–1842. 10.1016/s1053-8119(03)00230-1 [ DOI ] [ PubMed ] [ Google Scholar ]

- Saxe R., Xiao D. K., Kovacs G., Perrett D. I., Kanwisher N. (2004). A region of right posterior superior temporal sulcus responds to observed intentional actions. Neuropsychologia 42, 1435–1446. 10.1016/j.neuropsychologia.2004.04.015 [ DOI ] [ PubMed ] [ Google Scholar ]

- Sebastianutto L., Mengotti P., Spiezio C., Rumiati R. I., Balaban E. (2017). Dual-route imitation in preschool children. Acta Psychol. 173, 94–100. 10.1016/j.actpsy.2016.12.007 [ DOI ] [ PubMed ] [ Google Scholar ]

- Seger C. A., Cincotta C. M. (2005). The roles of the caudate nucleus in human classification learning. J. Neurosci. 25, 2941–2951. 10.1523/JNEUROSCI.3401-04.2005 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Skinner B. F. (2019). The Behavior of Organisms: An Experimental Analysis. Cambridge, MA: BF Skinner Foundation. Available online at: https://books.google.com/books?hl=en&lr=&id=S9WNCwAAQBAJ&oi=fnd&pg=PT20&dq=Behavior+of+Organisms:+An+Experimental+Analysis&ots=LmrranBzF1&sig=pZBGhnD6lI9Rooqf3N5wE88GIYU#v=onepage&q=Behavior%20of%20Organisms%3A%20An%20Experimental%20Analysis&f=false

- Super H., Spekreijse H., Lamme V. A. (2001). A neural correlate of working memory in the monkey primary visual cortex. Science 293, 120–124. 10.1126/science.1060496 [ DOI ] [ PubMed ] [ Google Scholar ]

- Tankersley D., Stowe C. J., Huettel S. A. (2007). Altruism is associated with an increased neural response to agency. Nat. Neurosci. 10, 150–151. 10.1038/nn1833 [ DOI ] [ PubMed ] [ Google Scholar ]

- Tennie C., Call J., Tomasello M. (2006). Push or pull: Imitation vs. emulation in great apes and human children. Ethology 112, 1159–1169. 10.1111/j.1439-0310.2006.01269.x [ DOI ] [ Google Scholar ]

- Thorndike E. L. (1970). Animal Intelligence: Experimental Studies. New Brunswick, NJ; London: Transaction Publishers. Available online at: https://books.google.com/books?hl=en&lr=&id=Go8XozILUJYC&oi=fnd&pg=PR7&dq=Animal+Intelligence:+Experimental+Studies.&ots=-o7sIim0sL&sig=T75YCcscgbB1jFE1s6RWH1mK5Xs#v=onepage&q=Animal%20Intelligence%3A%20Experimental

- Toni I., Passingham R. E. (1999). Prefrontal-basal ganglia pathways are involved in the learning of arbitrary visuomotor associations: a PET study. Exp. Brain Res. 127, 19–32. 10.1007/s002210050770 [ DOI ] [ PubMed ] [ Google Scholar ]

- Toni I., Ramnani N., Josephs O., Ashburner J., Passingham R. E. (2001). Learning arbitrary visuomotor associations: temporal dynamic of brain activity. Neuroimage 14, 1048–1057. 10.1006/nimg.2001.0894 [ DOI ] [ PubMed ] [ Google Scholar ]

- Torriero S., Oliveri M., Koch G., Caltagirone C., Petrosini L. (2007). The what and how of observational learning. J. Cogn. Neurosci. 19, 1656–1663. 10.1162/jocn.2007.19.10.1656 [ DOI ] [ PubMed ] [ Google Scholar ]

- Tricomi E. M., Delgado M. R., Fiez J. A. (2004). Modulation of caudate activity by action contingency. Neuron 41, 281–292. 10.1016/S0896-6273(03)00848-1 [ DOI ] [ PubMed ] [ Google Scholar ]

- Ullsperger M., Harsay H. A., Wessel J. R., Ridderinkhof K. R. (2010). Conscious perception of errors and its relation to the anterior insula. Brain Struct. Funct. 214, 629–643. 10.1007/s00429-010-0261-1 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Ullsperger M., Von Cramon D. Y. (2003). Error monitoring using external feedback: specific roles of the habenular complex, the reward system, and the cingulate motor area revealed by functional magnetic resonance imaging. J. Neurosci. 23, 4308–4314. 10.1523/JNEUROSCI.23-10-04308.2003 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- van der Helden J., Boksem M. A., Blom J. H. (2010). The importance of failure: feedback-related negativity predicts motor learning efficiency. Cerebral Cortex 20, 1596–1603. 10.1093/cercor/bhp224 [ DOI ] [ PubMed ] [ Google Scholar ]

- Vidyasagar T. R., Pigarev I. N. (2007). Modulation of neuronal responses in macaque primary visual cortex in a memory task. Eur. J. Neurosci. 25, 2547–2557. 10.1111/j.1460-9568.2007.05483.x [ DOI ] [ PubMed ] [ Google Scholar ]

- White N. M. (2009). Some highlights of research on the effects of caudate nucleus lesions over the past 200 years. Behav. Brain Res. 199, 3–23. 10.1016/j.bbr.2008.12.003 [ DOI ] [ PubMed ] [ Google Scholar ]

- Williams-Gray C. H., Hampshire A., Barker R. A., Owen A. M. (2008). Attentional control in Parkinson's disease is dependent on COMT val158met genotype. Brain 131, 397–408. 10.1093/brain/awm313 [ DOI ] [ PubMed ] [ Google Scholar ]

- Williamson R. A., Meltzoff A. N., Markman E. M. (2008). Prior experiences and perceived efficacy influence 3-year-olds' imitation. Dev. Psychol. 44:275. 10.1037/0012-1649.44.1.275 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Yin H. H., Knowlton B. J. (2006). The role of the basal ganglia in habit formation. Nat. Rev. Neurosci. 7, 464–476. 10.1038/nrn1919 [ DOI ] [ PubMed ] [ Google Scholar ]

- Yin H. H., Ostlund S. B., Balleine B. W. (2008). Reward-guided learning beyond dopamine in the nucleus accumbens: the integrative functions of cortico-basal ganglia networks. Eur. J. Neurosci. 28, 1437–1448. 10.1111/j.1460-9568.2008.06422.x [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Yoshida K., Saito N., Iriki A., Isoda M. (2012). Social error monitoring in macaque frontal cortex. Nat. Neurosci. 15, 1307–1312. 10.1038/nn.3180 [ DOI ] [ PubMed ] [ Google Scholar ]

- View on publisher site

- PDF (1.2 MB)

- Collections

Similar articles

Cited by other articles, links to ncbi databases.

- Download .nbib .nbib

- Format: AMA APA MLA NLM

Add to Collections