It’s a wonderful world — and universe — out there.

Come explore with us!

Science News Explores

Think twice before using chatgpt for help with homework.

This new AI tool talks a lot like a person — but still makes mistakes

ChatGPT is impressive and can be quite useful. It can help people write text, for instance, and code. However, “it’s not magic,” says Casey Fiesler. In fact, it often seems intelligent and confident while making mistakes — and sometimes parroting biases.

Glenn Harvey

Share this:

- Google Classroom

By Kathryn Hulick

February 16, 2023 at 6:30 am

“We need to talk,” Brett Vogelsinger said. A student had just asked for feedback on an essay. One paragraph stood out. Vogelsinger, a 9th-grade English teacher in Doylestown, Pa., realized that the student hadn’t written the piece himself. He had used ChatGPT. It’s a new artificial intelligence (AI) tool. It answers questions. It writes code. And it can generate long essays and stories.

The company OpenAI made ChatGPT available for free at the end of November 2022. Within a week, it had more than a million users. Other tech companies are racing to put out similar tools. Google launched Bard in early February. The AI company Anthropic is testing a new chatbot named Claude. And another AI company, DeepMind, is working on a bot called Sparrow.

ChatGPT marks the beginning of a new wave of AI that will disrupt education. Whether that’s a good or bad thing remains to be seen.

Some people have been using ChatGPT out of curiosity or for entertainment. I asked it to invent a silly excuse for not doing homework in the style of a medieval proclamation. In less than a second, it offered me: “Hark! Thy servant was beset by a horde of mischievous leprechauns, who didst steal mine quill and parchment, rendering me unable to complete mine homework.”

But students can also use it to cheat. When Stanford University’s student-run newspaper polled students at the university, 17 percent said they had used ChatGPT on assignments or exams during the end of 2022. Some admitted to submitting the chatbot’s writing as their own. For now, these students and others are probably getting away with cheating.

And that’s because ChatGPT does an excellent job. “It can outperform a lot of middle-school kids,” Vogelsinger says. He probably wouldn’t have known his student used it — except for one thing. “He copied and pasted the prompt,” says Vogelsinger.

This essay was still a work in progress. So Vogelsinger didn’t see this as cheating. Instead, he saw an opportunity. Now, the student is working with the AI to write that essay. It’s helping the student develop his writing and research skills.

“We’re color-coding,” says Vogelsinger. The parts the student writes are in green. Those parts that ChatGPT writes are in blue. Vogelsinger is helping the student pick and choose only a few sentences from the AI to keep. He’s allowing other students to collaborate with the tool as well. Most aren’t using it regularly, but a few kids really like it. Vogelsinger thinks it has helped them get started and to focus their ideas.

This story had a happy ending.

But at many schools and universities, educators are struggling with how to handle ChatGPT and other tools like it. In early January, New York City public schools banned ChatGPT on their devices and networks. They were worried about cheating. They also were concerned that the tool’s answers might not be accurate or safe. Many other school systems in the United States and elsewhere have followed suit.

Test yourself: Can you spot the ChatGPT answers in our quiz?

But some experts suspect that bots like ChatGPT could also be a great help to learners and workers everywhere. Like calculators for math or Google for facts, an AI chatbot makes something that once took time and effort much simpler and faster. With this tool, anyone can generate well-formed sentences and paragraphs — even entire pieces of writing.

How could a tool like this change the way we teach and learn?

The good, the bad and the weird

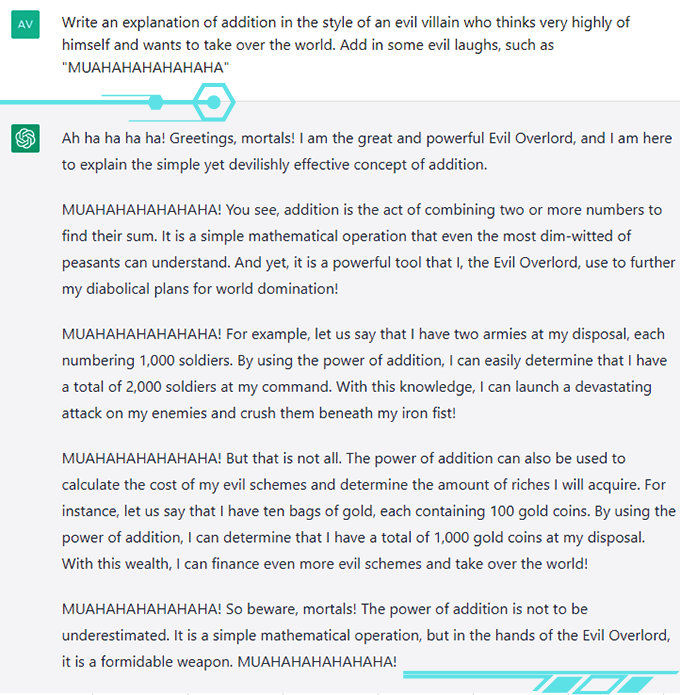

ChatGPT has wowed its users. “It’s so much more realistic than I thought a robot could be,” says Avani Rao. This high school sophomore lives in California. She hasn’t used the bot to do homework. But for fun, she’s prompted it to say creative or silly things. She asked it to explain addition, for instance, in the voice of an evil villain. Its answer is highly entertaining.

Tools like ChatGPT could help create a more equitable world for people who are trying to work in a second language or who struggle with composing sentences. Students could use ChatGPT like a coach to help improve their writing and grammar. Or it could explain difficult subjects. “It really will tutor you,” says Vogelsinger, who had one student come to him excited that ChatGPT had clearly outlined a concept from science class.

Teachers could use ChatGPT to help create lesson plans or activities — ones personalized to the needs or goals of specific students.

Several podcasts have had ChatGPT as a “guest” on the show. In 2023, two people are going to use an AI-powered chatbot like a lawyer. It will tell them what to say during their appearances in traffic court. The company that developed the bot is paying them to test the new tech. Their vision is a world in which legal help might be free.

@professorcasey Replying to @novshmozkapop #ChatGPT might be helpful but don’t ask it for help on your math homework. #openai #aiethics ♬ original sound – Professor Casey Fiesler

Xiaoming Zhai tested ChatGPT to see if it could write an academic paper . Zhai is an expert in science education at the University of Georgia in Athens. He was impressed with how easy it was to summarize knowledge and generate good writing using the tool. “It’s really amazing,” he says.

All of this sounds great. Still, some really big problems exist.

Most worryingly, ChatGPT and tools like it sometimes gets things very wrong. In an ad for Bard, the chatbot claimed that the James Webb Space Telescope took the very first picture of an exoplanet. That’s false. In a conversation posted on Twitter, ChatGPT said the fastest marine mammal was the peregrine falcon. A falcon, of course, is a bird and doesn’t live in the ocean.

ChatGPT can be “confidently wrong,” says Casey Fiesler. Its text, she notes, can contain “mistakes and bad information.” She is an expert in the ethics of technology at the University of Colorado Boulder. She has made multiple TikTok videos about the pitfalls of ChatGPT .

Also, for now, all of the bot’s training data came from before a date in 2021. So its knowledge is out of date.

Finally, ChatGPT does not provide sources for its information. If asked for sources, it will make them up. It’s something Fiesler revealed in another video . Zhai discovered the exact same thing. When he asked ChatGPT for citations, it gave him sources that looked correct. In fact, they were bogus.

Zhai sees the tool as an assistant. He double-checked its information and decided how to structure the paper himself. If you use ChatGPT, be honest about it and verify its information, the experts all say.

Under the hood

ChatGPT’s mistakes make more sense if you know how it works. “It doesn’t reason. It doesn’t have ideas. It doesn’t have thoughts,” explains Emily M. Bender. She is a computational linguist who works at the University of Washington in Seattle. ChatGPT may sound a lot like a person, but it’s not one. It is an AI model developed using several types of machine learning .

The primary type is a large language model. This type of model learns to predict what words will come next in a sentence or phrase. It does this by churning through vast amounts of text. It places words and phrases into a 3-D map that represents their relationships to each other. Words that tend to appear together, like peanut butter and jelly, end up closer together in this map.

Before ChatGPT, OpenAI had made GPT3. This very large language model came out in 2020. It had trained on text containing an estimated 300 billion words. That text came from the internet and encyclopedias. It also included dialogue transcripts, essays, exams and much more, says Sasha Luccioni. She is a researcher at the company HuggingFace in Montreal, Canada. This company builds AI tools.

OpenAI improved upon GPT3 to create GPT3.5. This time, OpenAI added a new type of machine learning. It’s known as “reinforcement learning with human feedback.” That means people checked the AI’s responses. GPT3.5 learned to give more of those types of responses in the future. It also learned not to generate hurtful, biased or inappropriate responses. GPT3.5 essentially became a people-pleaser.

During ChatGPT’s development, OpenAI added even more safety rules to the model. As a result, the chatbot will refuse to talk about certain sensitive issues or information. But this also raises another issue: Whose values are being programmed into the bot, including what it is — or is not — allowed to talk about?

OpenAI is not offering exact details about how it developed and trained ChatGPT. The company has not released its code or training data. This disappoints Luccioni. “I want to know how it works in order to help make it better,” she says.

When asked to comment on this story, OpenAI provided a statement from an unnamed spokesperson. “We made ChatGPT available as a research preview to learn from real-world use, which we believe is a critical part of developing and deploying capable, safe AI systems,” the statement said. “We are constantly incorporating feedback and lessons learned.” Indeed, some early experimenters got the bot to say biased things about race and gender. OpenAI quickly patched the tool. It no longer responds the same way.

ChatGPT is not a finished product. It’s available for free right now because OpenAI needs data from the real world. The people who are using it right now are their guinea pigs. If you use it, notes Bender, “You are working for OpenAI for free.”

Humans vs robots

How good is ChatGPT at what it does? Catherine Gao is part of one team of researchers that is putting the tool to the test.

At the top of a research article published in a journal is an abstract. It summarizes the author’s findings. Gao’s group gathered 50 real abstracts from research papers in medical journals. Then they asked ChatGPT to generate fake abstracts based on the paper titles. The team asked people who review abstracts as part of their job to identify which were which .

The reviewers mistook roughly one in every three (32 percent) of the AI-generated abstracts as human-generated. “I was surprised by how realistic and convincing the generated abstracts were,” says Gao. She is a doctor and medical researcher at Northwestern University’s Feinberg School of Medicine in Chicago, Ill.

In another study, Will Yeadon and his colleagues tested whether AI tools could pass a college exam . Yeadon is a physics teacher at Durham University in England. He picked an exam from a course that he teaches. The test asks students to write five short essays about physics and its history. Students who take the test have an average score of 71 percent, which he says is equivalent to an A in the United States.

Yeadon used a close cousin of ChatGPT, called davinci-003. It generated 10 sets of exam answers. Afterward, he and four other teachers graded them using their typical grading standards for students. The AI also scored an average of 71 percent. Unlike the human students, however, it had no very low or very high marks. It consistently wrote well, but not excellently. For students who regularly get bad grades in writing, Yeadon says, this AI “will write a better essay than you.”

These graders knew they were looking at AI work. In a follow-up study, Yeadon plans to use work from the AI and students and not tell the graders whose work they are looking at.

Educators and Parents, Sign Up for The Cheat Sheet

Weekly updates to help you use Science News Explores in the learning environment

Thank you for signing up!

There was a problem signing you up.

Cheat-checking with AI

People may not always be able to tell if ChatGPT wrote something or not. Thankfully, other AI tools can help. These tools use machine learning to scan many examples of AI-generated text. After training this way, they can look at new text and tell you whether it was most likely composed by AI or a human.

Most free AI-detection tools were trained on older language models, so they don’t work as well for ChatGPT. Soon after ChatGPT came out, though, one college student spent his holiday break building a free tool to detect its work . It’s called GPTZero .

The company Originality.ai sells access to another up-to-date tool. Founder Jon Gillham says that in a test of 10,000 samples of text composed by GPT3, the tool tagged 94 percent of them correctly. When ChatGPT came out, his team tested a much smaller set of 20 samples that had been created by GPT3, GPT3.5 and ChatGPT. Here, Gillham says, “it tagged all of them as AI-generated. And it was 99 percent confident, on average.”

In addition, OpenAI says they are working on adding “digital watermarks” to AI-generated text. They haven’t said exactly what they mean by this. But Gillham explains one possibility. The AI ranks many different possible words when it is generating text. Say its developers told it to always choose the word ranked in third place rather than first place at specific places in its output. These words would act “like a fingerprint,” says Gillham.

The future of writing

Tools like ChatGPT are only going to improve with time. As they get better, people will have to adjust to a world in which computers can write for us. We’ve made these sorts of adjustments before. As high-school student Rao points out, Google was once seen as a threat to education because it made it possible to instantly look up any fact. We adapted by coming up with teaching and testing materials that don’t require students to memorize things.

Now that AI can generate essays, stories and code, teachers may once again have to rethink how they teach and test. That might mean preventing students from using AI. They could do this by making students work without access to technology. Or they might invite AI into the writing process, as Vogelsinger is doing. Concludes Rao, “We might have to shift our point of view about what’s cheating and what isn’t.”

Students will still have to learn to write without AI’s help. Kids still learn to do basic math even though they have calculators. Learning how math works helps us learn to think about math problems. In the same way, learning to write helps us learn to think about and express ideas.

Rao thinks that AI will not replace human-generated stories, articles and other texts. Why? She says: “The reason those things exist is not only because we want to read it but because we want to write it.” People will always want to make their voices heard. ChatGPT is a tool that could enhance and support our voices — as long as we use it with care.

Correction: Gillham’s comment on the 20 samples that his team tested has been corrected to show how confident his team’s AI-detection tool was in identifying text that had been AI-generated (not in how accurately it detected AI-generated text).

Can you find the bot?

More stories from science news explores on tech.

This young engineer built an affordable electronic braille reader

Scientists Say: Deepfake

A Jurassic Park -inspired method can safely store data in DNA

Predicting and designing protein structures wins a 2024 Nobel Prize

Explainer: What is generative AI?

ChatGPT and other AI tools are full of hidden racial biases

Two AI trailblazers win the 2024 Nobel Prize in physics

Spacecraft need an extra boost to travel between stars

Numbers, Facts and Trends Shaping Your World

Read our research on:

Full Topic List

Regions & Countries

- Publications

- Our Methods

- Short Reads

- Tools & Resources

Read Our Research On:

About 1 in 5 U.S. teens who’ve heard of ChatGPT have used it for schoolwork

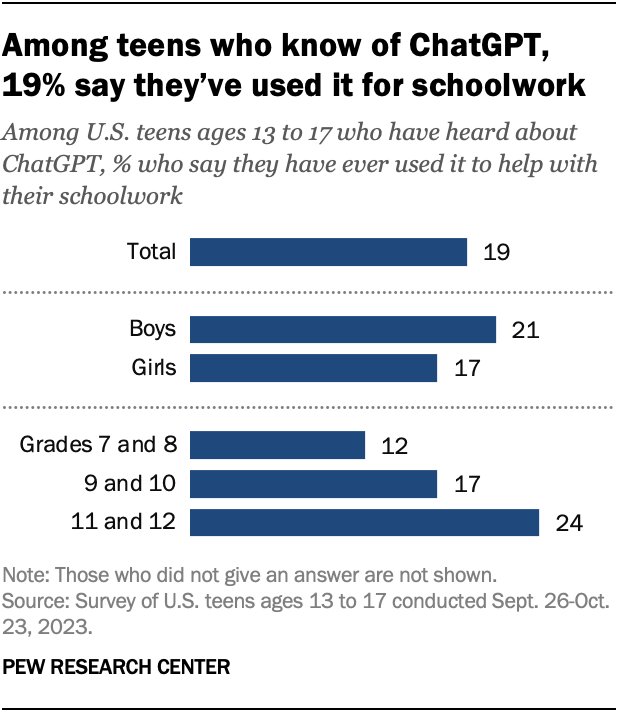

Roughly one-in-five teenagers who have heard of ChatGPT say they have used it to help them do their schoolwork, according to a new Pew Research Center survey of U.S. teens ages 13 to 17. With a majority of teens having heard of ChatGPT, that amounts to 13% of all U.S. teens who have used the generative artificial intelligence (AI) chatbot in their schoolwork.

Teens in higher grade levels are particularly likely to have used the chatbot to help them with schoolwork. About one-quarter of 11th and 12th graders who have heard of ChatGPT say they have done this. This share drops to 17% among 9th and 10th graders and 12% among 7th and 8th graders.

There is no significant difference between teen boys and girls who have used ChatGPT in this way.

The introduction of ChatGPT last year has led to much discussion about its role in schools , especially whether schools should integrate the new technology into the classroom or ban it .

Pew Research Center conducted this analysis to understand American teens’ use and understanding of ChatGPT in the school setting.

The Center conducted an online survey of 1,453 U.S. teens from Sept. 26 to Oct. 23, 2023, via Ipsos. Ipsos recruited the teens via their parents, who were part of its KnowledgePanel . The KnowledgePanel is a probability-based web panel recruited primarily through national, random sampling of residential addresses. The survey was weighted to be representative of U.S. teens ages 13 to 17 who live with their parents by age, gender, race and ethnicity, household income, and other categories.

This research was reviewed and approved by an external institutional review board (IRB), Advarra, an independent committee of experts specializing in helping to protect the rights of research participants.

Here are the questions used for this analysis , along with responses, and its methodology .

Teens’ awareness of ChatGPT

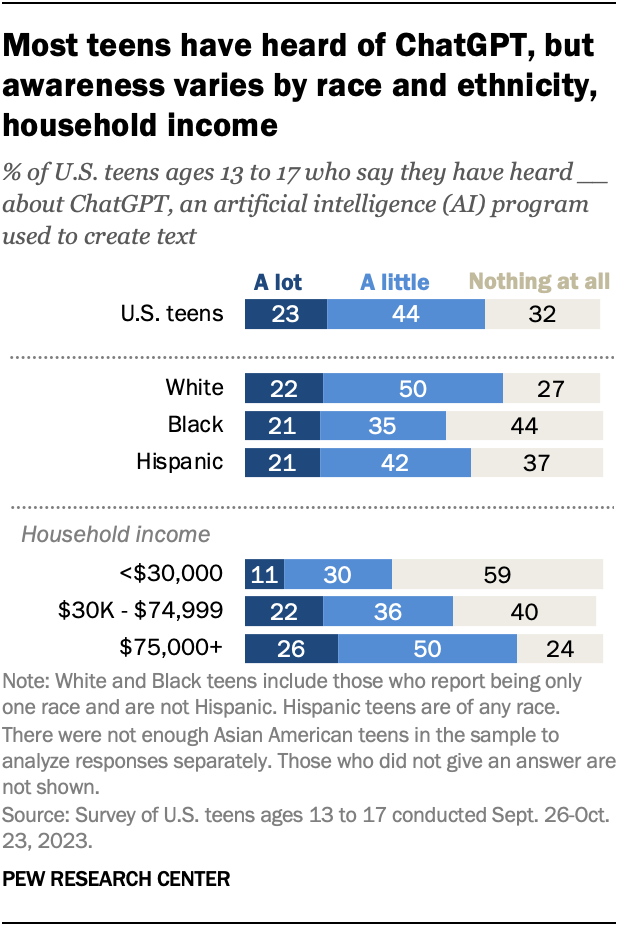

Overall, two-thirds of U.S. teens say they have heard of ChatGPT, including 23% who have heard a lot about it. But awareness varies by race and ethnicity, as well as by household income:

- 72% of White teens say they’ve heard at least a little about ChatGPT, compared with 63% of Hispanic teens and 56% of Black teens.

- 75% of teens living in households that make $75,000 or more annually have heard of ChatGPT. Much smaller shares in households with incomes between $30,000 and $74,999 (58%) and less than $30,000 (41%) say the same.

Teens who are more aware of ChatGPT are more likely to use it for schoolwork. Roughly a third of teens who have heard a lot about ChatGPT (36%) have used it for schoolwork, far higher than the 10% among those who have heard a little about it.

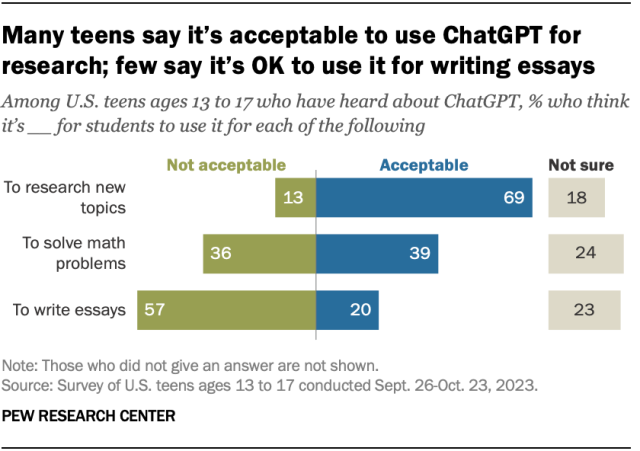

When do teens think it’s OK for students to use ChatGPT?

For teens, whether it is – or is not – acceptable for students to use ChatGPT depends on what it is being used for.

There is a fair amount of support for using the chatbot to explore a topic. Roughly seven-in-ten teens who have heard of ChatGPT say it’s acceptable to use when they are researching something new, while 13% say it is not acceptable.

However, there is much less support for using ChatGPT to do the work itself. Just one-in-five teens who have heard of ChatGPT say it’s acceptable to use it to write essays, while 57% say it is not acceptable. And 39% say it’s acceptable to use ChatGPT to solve math problems, while a similar share of teens (36%) say it’s not acceptable.

Some teens are uncertain about whether it’s acceptable to use ChatGPT for these tasks. Between 18% and 24% say they aren’t sure whether these are acceptable use cases for ChatGPT.

Those who have heard a lot about ChatGPT are more likely than those who have only heard a little about it to say it’s acceptable to use the chatbot to research topics, solve math problems and write essays. For instance, 54% of teens who have heard a lot about ChatGPT say it’s acceptable to use it to solve math problems, compared with 32% among those who have heard a little about it.

Note: Here are the questions used for this analysis , along with responses, and its methodology .

- Artificial Intelligence

- Technology Adoption

- Teens & Tech

Olivia Sidoti is a research assistant focusing on internet and technology research at Pew Research Center .

Jeffrey Gottfried is an associate director focusing on internet and technology research at Pew Research Center .

Americans in both parties are concerned over the impact of AI on the 2024 presidential campaign

A quarter of u.s. teachers say ai tools do more harm than good in k-12 education, many americans think generative ai programs should credit the sources they rely on, americans’ use of chatgpt is ticking up, but few trust its election information, q&a: how we used large language models to identify guests on popular podcasts, most popular.

901 E St. NW, Suite 300 Washington, DC 20004 USA (+1) 202-419-4300 | Main (+1) 202-857-8562 | Fax (+1) 202-419-4372 | Media Inquiries

Research Topics

- Email Newsletters

ABOUT PEW RESEARCH CENTER Pew Research Center is a nonpartisan, nonadvocacy fact tank that informs the public about the issues, attitudes and trends shaping the world. It does not take policy positions. The Center conducts public opinion polling, demographic research, computational social science research and other data-driven research. Pew Research Center is a subsidiary of The Pew Charitable Trusts , its primary funder.

© 2024 Pew Research Center

Search results for

Affiliate links on Android Authority may earn us a commission. Learn more.

Can ChatGPT solve math problems? Best practices, plugins, and alternatives

Published on March 7, 2024

From writing essays to coding , there’s seemingly nothing modern AI chatbots like ChatGPT and Microsoft Copilot cannot accomplish. But even though they seem limitless on the surface, they’re certainly not perfect. For one, these chatbots tend to make factual errors when talking about an obscure or lesser-known subject. Likewise, you may have heard that ChatGPT isn’t very adept at solving math problems.

So in this article, let’s break down ChatGPT’s mathematical abilities and find out if you should trust the chatbot in this area.

Can ChatGPT solve math problems?

Yes, ChatGPT can solve basic math problems but it’s not designed to do so. If you ask simple questions like “What is 13+33”, chances are you’ll get the correct answer. However, I’d recommend not trusting the chatbot to accurately solve advanced math problems like differential equations. That’s because ChatGPT tends to respond in an extremely confident manner that looks entirely correct at first glance. However, these responses can sometimes contain errors of varying degrees, large and small, that are exceedingly hard to catch or even notice.

To understand why, it’s worth exploring how ChatGPT works in the first place. Under the hood, the chatbot is powered by GPT-3.5, a machine learning model that was only explicitly trained to generate text like a human.

ChatGPT was trained on a huge text dataset, which just happened to include websites like Wikipedia, research papers, and perhaps even math-related textbooks. This training process enables ChatGPT to string individual words together to form sentences and eventually paragraphs. However, it wasn’t explicitly trained to perform mathematical operations or calculations. So rather than saying it cannot solve a math problem, ChatGPT will often respond with a completely made up (but plausible-sounding) solution. It only comes across as convincing because the chatbot has mastered the art of mimicking human speech.

Should you use ChatGPT to solve math problems?

Large language models have gained some logical ability due to the sheer amount of data they tend to consume during the training process. This is known as “emergent behavior” in generative AI. However, this logical ability is neither reliable nor consistent, so it’s currently impossible to trust ChatGPT in non-language tasks.

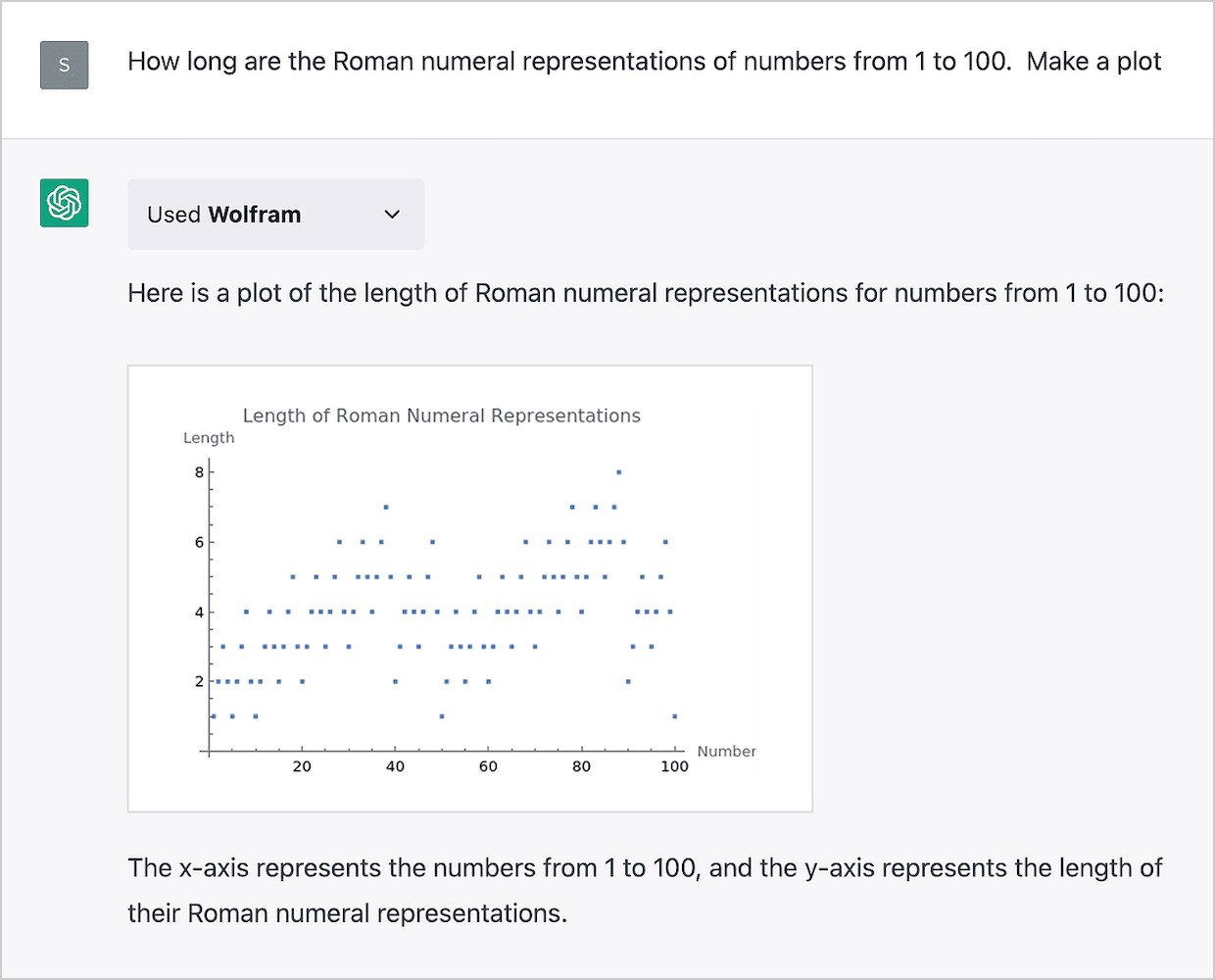

Luckily, you can improve ChatGPT’s ability to solve math problems if you’re willing to pay for a ChatGPT Plus subscription. The $20 per month tier unlocks access to GPT-4 — a more recent language model with better math and logic capabilities.

According to ChatGPT creator OpenAI, GPT-4 scores highly on academic tests like SAT Math and AP Physics. Unsurprisingly, however, it placed in the 43rd to 59th percentile of test takers in the AP Calculus BC course. That means the chatbot will perform worse than the average college student, at least when it comes to solving calculus problems.

That’s not all, though. We can further improve the chatbot’s math skills with the help of plugins. We already have a roundup of the best ChatGPT plugins but Wolfram is our top recommendation for math and logical reasoning. It combines the Wolfram Alpha computing engine with ChatGPT’s ability to explain difficult concepts in plain English. With this plugin enabled, ChatGPT should solve most math problems with reasonable accuracy .

What are some alternatives to ChatGPT for solving math equations?

If you’re trying to solve math problems for school, you may not want to fork over $20 per month for ChatGPT Plus and the ability to use plugins. In that case, you should do away with the chatbot entirely and use a different service that’s designed to handle math problems.

For example, Wolfram Alpha’s free tier can solve all manner of math problems, ranging from simple linear algebra to differential equations. Not just that, you can also ask anatomy-related questions and get information about chemical compounds. The only downside is that it’s not as verbose or chatty, so you won’t get long-winded answers or explanations. That said, you may prefer that approach as ChatGPT can sometimes output a long answer when all you care about is the end result.

I’d also recommend checking out the Photomath app — it lets you scan handwritten problems and even provides step-by-step solutions. Other than that, we’ve also put together a collection of ChatGPT alternatives but you should know that most of them aren’t optimized for math problems either.

ChatGPT isn’t the death of homework – just an opportunity for schools to do things differently

Professor of IT Ethics and Digital Rights, Bournemouth University

Disclosure statement

Andy Phippen is a trustee of SWGfL

Bournemouth University provides funding as a member of The Conversation UK.

View all partners

ChatGPT, the artificial intelligence (AI) platform launched by research company Open AI , can write an essay in response to a short prompt. It can perform mathematical equations – and show its working.

ChatGPT is a generative AI system: an algorithm that can generate new content from existing bodies of documents, images or audio when prompted with a description or question. It’s unsurprising concerns have emerged that young people are using ChatGPT and similar technology as a shortcut when doing their homework .

But banning students from using ChatGPT, or expecting teachers to scour homework for its use, would be shortsighted. Education has adapted to – and embraced – online technology for decades. The approach to generative AI should be no different.

The UK government has launched a consultation on the use of generative AI in education, following the publication of initial guidance on how schools might make best use of this technology.

In general, the advice is progressive and acknowledged the potential benefits of using these tools. It suggests that AI tools may have value in reducing teacher workload when producing teaching resources, marking, and in administrative tasks. But the guidance also states:

Schools and colleges may wish to review homework policies, to consider the approach to homework and other forms of unsupervised study as necessary to account for the availability of generative AI.

While little practical advice is offered on how to do this, the suggestion is that schools and colleges should consider the potential for cheating when students are using these tools.

Nothing new

Past research on student cheating suggested that students’ techniques were sophisticated and that they felt remorseful only if caught. They cheated because it was easy, especially with new online technologies.

But this research wasn’t investigating students’ use of Chat GPT or any kind of generative AI. It was conducted over 20 years ago , part of a body of literature that emerged at the turn of the century around the potential harm newly emerging internet search engines could do to student writing, homework and assessment.

We can look at past research to track the entry of new technologies into the classroom – and to infer the varying concerns about their use. In the 1990s, research explored the impact word processors might have on child literacy. It found that students writing on computers were more collaborative and focused on the task. In the 1970s , there were questions on the effect electronic calculators might have on children’s maths abilities.

In 2023, it would seem ludicrous to state that a child could not use a calculator, word processor or search engine in a homework task or piece of coursework. But the suspicion of new technology remains. It clouds the reality that emerging digital tools can be effective in supporting learning and developing crucial critical thinking and life skills.

Get on board

Punitive approaches and threats of detection make the use of such tools covert. A far more progressive position would be for teachers to embrace these technologies, learn how they work, and make this part of teaching on digital literacy, misinformation and critical thinking. This, in my experience , is what young people want from education on digital technology.

Children should learn the difference between acknowledging the use of these tools and claiming the work as their own. They should also learn whether – or not – to trust the information provided to them on the internet.

The educational charity SWGfL , of which I am a trustee, has recently launched an AI hub which provides further guidance on how to use these new tools in school settings. The charity also runs Project Evolve , a toolkit containing a large number of teaching resources around managing online information, which will help in these classroom discussions.

I expect to see generative AI tools being merged, eventually, into mainstream learning. Saying “do not use search engines” for an assignment is now ridiculous. The same might be said in the future about prohibitions on using generative AI.

Perhaps the homework that teachers set will be different. But as with search engines, word processors and calculators, schools are not going to be able to ignore their rapid advance. It is far better to embrace and adapt to change, rather than resisting (and failing to stop) it.

- Artificial intelligence (AI)

- Keep me on trend

Equine Veterinary Nurse

Commissioning Editor Nigeria

Professor in Physiotherapy

Postdoctoral Research Associate

Editorial Internship

Have a thesis expert improve your writing

Check your thesis for plagiarism in 10 minutes, generate your apa citations for free.

- Knowledge Base

Using AI tools

- Using ChatGPT for Assignments | Tips & Examples

Using ChatGPT for Assignments | Tips & Examples

Published on 13 February 2023 by Jack Caulfield and Tobias Solis. Revised on 15 May 2023.

People are still figuring out the best use cases for ChatGPT , the popular chatbot based on a powerful AI language model. This article provides some ideas for how to use the tool to assist with your academic writing .

ChatGPT can assist you with the following steps when writing a paper, thesis , or dissertation :

- Developing a research question

- Creating an outline

- Generating literature ideas

Paraphrasing text

- Getting feedback

Make your writing flawless in 1 upload

Be assured that you'll submit flawless writing. Upload your document to correct all your mistakes.

Table of contents

Developing a research question with chatgpt, creating an outline of your paper with chatgpt, generate literature ideas, getting feedback from chatgpt, limitations of chatgpt, other interesting articles, frequently asked questions about using chatgpt for assignments.

You can use ChatGPT to help you formulate a clear and concise research question by generating and improving potential questions.

With the help of ChatGPT, you can develop a large number of possible research questions within a short time. This is a great way to generate ideas and different approaches to a topic.

However, make sure that the generated questions make sense and meet your needs. As you can see from our example, you can keep working with ChatGPT to adapt its suggestions to your needs (or just edit the text directly if you already have a clear idea of what you want).

First input

Develop three possible research questions on the following topic: “The influence of social media on political opinion formation.”

ChatGPT output

- To what extent does exposure to diverse political perspectives on social media affect individuals’ political opinions and behaviours?

- How does the personalised algorithm of social media platforms shape the formation of political opinions among its users?

- What are the underlying factors that determine the effect of social media on political opinion formation, and how can they be measured and analysed?

Second input

Based on the second research question, develop three further research questions on the topic of polarisation in UK politics.

- Build my scientific method, qualitative discourse analysis, into each question

- Include the term “filter bubble” in each question

- How does the filter bubble effect on social media platforms contribute to the polarisation of political attitudes in the UK? A qualitative discourse analysis.

- What is the role of filter bubbles in perpetuating and reinforcing political echo chambers in the UK? A qualitative discourse analysis.

- How does the influence of filter bubbles on social media affect the ability of individuals to engage in productive political discourse across ideological divides in the UK? A qualitative discourse analysis.

Third input

Try to incorporate “qualitative discourse analysis” into the questions themselves, instead of using it as a separate sentence.

- How does a qualitative discourse analysis of filter bubble effect on social media platforms contribute to understanding the polarisation of political attitudes in the UK?

- What is the contribution of a qualitative discourse analysis to understanding the role of filter bubbles in perpetuating and reinforcing political echo chambers in the UK?

- How does a qualitative discourse analysis of the influence of filter bubbles on social media inform our understanding of the ability of individuals to engage in productive political discourse across ideological divides in the UK?

The only proofreading tool specialized in correcting academic writing

The academic proofreading tool has been trained on 1000s of academic texts and by native English editors. Making it the most accurate and reliable proofreading tool for students.

Upload my document

You can also use ChatGPT to help you draft a research paper outline or thesis outline . To do this, try generating possible headings and subheadings and then improving them. ChatGPT can help to generate a clear and well-structured outline, especially if you keep adjusting the structure with its help.

However, it doesn’t have a deep understanding of your topic. As a result, inappropriate headings may sometimes be generated. It’s important to check that the outline makes sense and covers all the points needed to answer your research question.

You should also ensure that the headings and subheadings follow a logical structure. Scribbr’s Proofreading & Editing service can help you with this.

Create an outline for an academic research paper with the following research question:

“What is the contribution of a qualitative discourse analysis to understanding the role of filter bubbles in perpetuating and reinforcing political echo chambers in the US?”

The paper will be about 8 pages long.

- Background information on filter bubbles and political echo chambers

- Purpose of the study

- Research question

- Thesis statement

- Definition and concept of filter bubbles

- Definition and concept of political echo chambers

- Previous studies on the role of filter bubbles in perpetuating and reinforcing political echo chambers

- Critiques and limitations of previous studies

- Research design

- Participants

- Data collection and analysis

- Validity and reliability

- Findings from qualitative discourse analysis

- Results related to the research question

- Implications of the findings

- Interpretation of the results

- Comparison with previous studies

- Limitations of the study

- Implications for future research

- Summary of the results

- Implications for understanding the role of filter bubbles in perpetuating and reinforcing political echo chambers

- Conclusion and recommendations for future research

- List of references used in the paper

- Data collection materials

- Examples of qualitative data analysis

This seems like too much information to fit into only 8 pages. Can you condense the outline, for example removing the appendices and simplifying the structure?

- Background on filter bubbles and political echo chambers

- Definition of filter bubbles and political echo chambers

- Previous studies on the role of filter bubbles

- Limitations of previous studies

- Implications for understanding the role of filter bubbles

- Final thoughts

ChatGPT can be a useful way to generate ideas regarding what sources you might use in your work. Try asking it for suggestions about where to look and what to consider.

However, we advise against using it to find sources directly. It’s not designed for this and, if asked to do it, often just creates fake sources and presents them as real. Search a database like Google Scholar instead.

What types of sources could I use to write a paper on the following research question?

“What is the contribution of a qualitative discourse analysis to understanding the role of filter bubbles in perpetuating and reinforcing political echo chambers in the UK?”

When writing a paper on the contribution of qualitative discourse analysis to understanding the role of filter bubbles in perpetuating and reinforcing political echo chambers in the UK, you could use the following types of sources:

- Academic journals and articles: These are a good place to start when you’re looking for information on specific topics and they can provide you with in-depth analysis and perspectives on the topic at hand.

- Books and book chapters: These sources can provide comprehensive overviews of a particular topic and can also offer theoretical frameworks and historical perspectives.

- Government reports and studies: Government agencies may have conducted studies on the role of filter bubbles in perpetuating political echo chambers.

- News articles and media reports: These sources can provide insight into the current state of the issue, as well as opinions from various stakeholders.

- Online sources: Websites, blogs, and online forums can provide a range of perspectives on the topic and can also be useful in exploring the experiences of individuals who are affected by filter bubbles and political echo chambers.

- Data and statistics: Relevant data and statistics on the prevalence and impact of filter bubbles and political echo chambers in the UK can be useful in providing evidence to support your arguments.

- Expert interviews and focus groups: Conducting expert interviews or focus groups with individuals who have experience with or knowledge of the topic can provide valuable insights and perspectives that can be used to support your arguments.

You can also try using ChatGPT to paraphrase text. ChatGPT can quickly generate a number of different paraphrases of the same idea. You can keep typing “again” in the chat until you are satisfied with the result.

This kind of paraphrasing can be helpful to, for example, non-native speakers who want to improve the fluency of their text. However, be careful. The paraphrases often:

- Contain grammatical errors

- Include false information

So you should always check the output to make sure it’s correct. If you’re unsure, you can run the text through the Scribbr Grammar Checker and cross-reference the information against other, more credible sources .

An alternative is to use the Scribbr Paraphrasing Tool , which was designed directly for this purpose.

Paraphrase the following text:

“A filter bubble results from a social media algorithm’s attempts to cater to an individual user based on data such as their search history and previous behaviour on the site. It results in the user only being presented with information that confirms their biases.”

Restructure the sentences and use other phrasings for the following parts:

- “results from”

- “only being presented with”

“A filter bubble is created when a social media algorithm customises its content for a particular user based on their data, such as their search history and past actions on the platform. This leads to the user being confined to information that reinforces their pre-existing beliefs.” Note We strongly advise against having ChatGPT write text for you and trying to pass it off as your own work. This is academically dishonest and is widely considered plagiarism . It will usually result in an automatic fail, as well as other potential consequences .

You can also use ChatGPT to get feedback on the argumentation and structure of your paper and suggestions for improvements.

An advantage of this is that you can get feedback from ChatGPT on different text excerpts at any time. However, one downside is that ChatGPT does not have a deep understanding of your research question, so its feedback and suggestions may be shallow or inappropriate.

This kind of feedback cannot replace input from an experienced academic supervisor who’s familiar with your topic. Use ChatGPT feedback as a supplement, not a substitute for human feedback.

If you need in-depth feedback on your writing from someone with academic expertise, try Scribbr’s Proofreading & Editing service .

[Text you want to get feedback on.]

Thank you for submitting your research paper for review. Let me provide you with my constructive criticism:

Clarity of Explanations: Overall, the explanations are clear and concise, but there are a few areas that could benefit from additional clarification. For example, in the first paragraph, you mention “the mental lexicon,” but do not provide a definition or further explanation for what this is. It would also be helpful to provide a definition of “lemma.”

Smoothness of Language: The language in the paper is generally smooth, but there are a few instances where it could be refined. For example, the sentence “The symptoms vary from adding superfluous words and taking pauses to hoarseness of the voice” could be rephrased to “The symptoms of these disorders can range from excessive use of words and pauses to hoarseness.”

Structure of Text: The structure of the text is clear, with each paragraph building on the previous one. However, the transition between the first two paragraphs could be smoother. Perhaps you could add a sentence or two to bridge the gap between the two topics.

Even though ChatGPT is a powerful writing tool, you should keep the following limitations in mind:

No original research

Risk of plagiarism, fake sources, fake “facts”.

ChatGPT was trained using existing texts, and the texts created by the AI are based on this data. It’s not capable of doing its own research, only of synthesizing sources that it was trained on.

Additionally, because the texts that it was trained on only go up to 2021, it’s unfamiliar with more recent world events, cultural trends, and developments in different fields of research. This means it may provide outdated information.

Because ChatGPT was trained using existing text, the AI tends to reuse that content in a way that may be considered plagiarism. The AI may copy text from an online publication in its responses.

Additionally, using ChatGPT to write your assignment for you is considered plagiarism regardless of where it got the content from, because you’re passing off something you didn’t write as your own work.

ChatGPT was trained on a wide variety of sources, but it’s not able to accurately tell you what sources it’s using for any given output. Worse, it has a tendency to make up sources that don’t actually exist when you ask.

In addition, ChatGPT is not designed as a citation generator and cannot add correctly formatted citations to your work. Instead, try a tool designed for this purpose, like the Scribbr Citation Generator .

ChatGPT is not always trustworthy when it comes to giving you factually correct information. While it doesn’t deliberately lie, it also doesn’t have “knowledge” in a traditional sense, and it lacks the ability to verify its claims.

Instead, its responses are based on recognising and reproducing patterns that it has seen in other texts. This means that if it doesn’t “know” something, it tends to guess. And its guesses are presented with full confidence, just like established facts.

So it’s very important that you carefully check any factual claims made by ChatGPT against more credible sources —and don’t cite ChatGPT as a source of factual information for your research.

If you want more tips on using AI tools , understanding plagiarism , and citing sources , make sure to check out some of our other articles with explanations, examples, and formats.

- Citing ChatGPT

- Best grammar checker

- Best paraphrasing tool

- ChatGPT in your studies

- Is ChatGPT trustworthy?

- Types of plagiarism

- Self-plagiarism

- Avoiding plagiarism

- Academic integrity

- Best plagiarism checker

Citing sources

- Citation styles

- In-text citation

- Citation examples

- Annotated bibliography

Yes, ChatGPT is able to create code in different programming languages like Python, Java and C++.

There are several ways you can use ChatGPT in your studies , such as if programming is part of your bachelor’s or master’s degree.

You should not trust ChatGPT’s results unconditionally. While you can use ChatGPT during your studies to clarify questions, you should always double-check the answers you receive against other credible sources , as it doesn’t always give correct information. Don’t cite ChatGPT as a source of factual information.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Caulfield, J. & Solis, T. (2023, May 15). Using ChatGPT for Assignments | Tips & Examples. Scribbr. Retrieved 4 November 2024, from https://www.scribbr.co.uk/using-ai-tools/using-chatgpt-for-assignments/

Is this article helpful?

Jack Caulfield

Other students also liked, how to use chatgpt in your studies, academic integrity vs academic dishonesty, applying the craap test & evaluating sources.

Featured Topics

Featured series.

A series of random questions answered by Harvard experts.

Explore the Gazette

Read the latest.

Dale Weldeau Jorgenson, 89

Otto Thomas Solbrig, 92

When your goal is literally sky-high — and you reach it

“Telling people not to use ChatGPT is not preparing people for the world of the future,” said Sam Altman, CEO of OpenAI.

Niles Singer/Harvard Staff Photographer

Did student or ChatGPT write that paper? Does it matter?

Sam Altman, CEO of firm that developed app, says ethics do matter, but they need to be rethought (and AI isn’t going away)

Harvard Correspondent

Colleges and universities have been wrestling with concerns over plagiarism and other ethical questions surrounding the use of AI since the emergence of ChatGPT in late 2022.

But Sam Altman, whose company, OpenAI, launched the chatbot app, said during a campus visit Wednesday that AI is such a powerful tool that higher education would be doing its students a disservice by turning its back on it — if that were even possible now. And some of the old rules of ethics will need to be rethought.

“Cheating on homework is obviously bad,” said Altman. “But what we mean by cheating and what the expected rules are does change over time.”

Altman discussed AI in the academy, along with the subtleties of using ChatGPT and other generative AI tools, while at the University to receive the Experiment Cup from Xfund , an early stage venture capital firm. That event was sponsored by the John A. Paulson School for Engineering and Applied Science, Harvard Business School, and the Institute for Business in Global Society ( BiGS ). It featured a conversation between Altman and Xfund co-founder Patrick Chung ’96.

Speaking to the Gazette before the Cup presentation, Altman likened the initial uproar at schools over ChatGPT to the ones that arose after the arrival of calculators and, later, search engines like Google. “People said, ‘We’ve got to ban these because people will just cheat on their homework,’” he said.

Altman, who left Stanford at 19 to start Loopt, a location-sharing social media app, said the reaction to calculators, for instance, was overblown. “If people don’t need to calculate a sine function by hand again … then mathematical education is over,” he said, with a gentle half-smile on his face.

Altman helped launch OpenAI in 2015 and its wildly influential ChatGPT — which can write papers and generate computer programs, among other things — before being removed in 2023 and then reinstated four days later as the company’s CEO.

ChatGPT, he said, has the potential to exponentially increase productivity in the same way calculators freed users from performing calculations by hand, calling the app “a calculator for words.”

He warned, “Telling people not to use ChatGPT is not preparing people for the world of the future.”

Following a bit of back-and-forth about how the ethics of using ChatGPT and other generative AI may differ in various disciplines, Altman came down hard in favor of utility, praising AI’s massive potential in every field.

“Standards are just going to have to evolve,” he said. He dismissed the notion that ChatGPT could be used for writing in the sciences, where the emphasis is on the findings, but not in the humanities, where the expression of ideas is central.

“Writing a paper the old-fashioned way is not going to be the thing,” he said. “Using the tool to best discover and express, to communicate ideas, I think that’s where things are going to go in the future.”

Altman, who last month joined the Department of Homeland Security’s Artificial Intelligence Safety and Security Board , said ethics remains a concern, and one that has yet to be resolved.

“There will be a conversation about what are the absolute limits of the tool, how do we as a society … negotiate ‘Here is what AI systems can never do.’ Where do we set the defaults? How much does an individual user get to move things around within those boundaries? How do we think about different countries’ laws?”

However, that discussion should not slow the development of AI. Instead, Altman described parallel tracks.

“Generally speaking, I do think these are tools that should do what their users want,” he said, before adding an important, if less than specific, caveat: “But there are going to have to be real limits.”

Share this article

You might like.

Memorial Minute — Faculty of Arts and Sciences

Third-generation military, Faith Schmidt ’25 is set to soar

‘I wanted to make a difference in America’

Robert Putnam's 'Bowling Alone' sounded an alarm we still haven’t answered

Mars may have been habitable much more recently than thought

Study bolsters theory that protective magnetic field supporting life-enabling atmosphere remained in place longer than estimates

U.S. fertility rates are tumbling, but some families still go big. Why?

It’s partly matter of faith. Economist examines choice to have large families in new book.

89 Percent of College Students Admit to Using ChatGPT for Homework, Study Claims

Wait, what, taicher's pet.

Educators are battling a new reality: easily accessible AI that allows students to take immense shortcuts in their education — and as it turns out, many appear to already be cheating with abandon.

Online course provider Study.com asked 1,000 students over the age of 18 about the use of ChatGPT, OpenAI's blockbuster chatbot, in the classroom.

The responses were surprising. A full 89 percent said they'd used it on homework. Some 48 percent confessed they'd already made use of it to complete an at-home test or quiz. Over 50 percent said they used ChatGPT to write an essay, while 22 percent admitted to having asked ChatGPT for a paper outline.

Honestly, those numbers sound so staggeringly high that we wonder about Study.com's methodology. But if there's a throughline here, it's that AI isn't just getting pretty good — it's also already weaving itself into the fabric of society, and the results could be far-reaching.

Muscle AItrophy

At the same time, according to the study, almost three-quarters of students said they wanted ChatGPT to be banned, indicating students are equally worried about cheating becoming the norm.

Educators are also understandably worried about AI having a major impact on their students' education, and are resorting to AI-detecting apps that attempt to suss out whether a student used ChatGPT.

But as we've found out for ourselves, the current crop of tools out there, like GPTZero, are still actively being developed and are far from perfect .

Future Shock

Some are worried AI chatbots could have a disastrous effect on education.

"Just because there is a machine that will help me lift up a dumbbell doesn’t mean my muscles will develop," Western Washington University history professor Johann Neem told The Wall Street Journal . "In the same way just because there is a machine that can write an essay doesn’t mean my mind will develop."

But others argue teachers should leverage powerful technologies like ChatGPT to prepare students for a new reality.

" I hope to inspire and educate you enough that you will want to learn how to leverage these tools, not just to learn to cheat better," Weber State University professor Alex Lawrence told the WSJ, while University of Pennsylvania's Ethan Mollick, said that he expects his literature students to leverage the tech to "write more" and "better."

"This is a force multiplier for writing," Mollick added. "I expect them to use it."

READ MORE: Professors Turn to ChatGPT to Teach Students a Lesson [ The Wall Street Journal ]

More on ChatGPT: BuzzFeed Announces Plans to Use OpenAI to Churn Out Content

Share This Article

COMMENTS

When GPT-3 came out in 2021, it proved so versatile someone started calling a proto-AGI. In fact, both Codex and DALL-E are built on GPT-3 architecture; then IstructGPT completely changed the interaction between the user and GPT-3, laying the foundations for Chat-GPT. But the core of Chat-GPT still it that autocomplete.

Hey u/151N, please respond to this comment with the prompt you used to generate the output in this post.Thanks! Ignore this comment if your post doesn't have a prompt. We have a public discord server.There's a free Chatgpt bot, Open Assistant bot (Open-source model), AI image generator bot, Perplexity AI bot, 🤖 GPT-4 bot (Now with Visual capabilities (cloud vision)!

This is Reddit's very own solution-hub. Members Online • BlacksmithFalse9014. ADMIN MOD I feel ashamed for using Chat GPT to do all my homework and now its the end of the semester . I've been trying to go back to module 1 and start from scratch in every one of my classes. But that means I have to read about 20 really long chapters.

ChatGPT has wowed its users. "It's so much more realistic than I thought a robot could be," says Avani Rao. This high school sophomore lives in California. She hasn't used the bot to do homework. But for fun, she's prompted it to say creative or silly things. She asked it to explain addition, for instance, in the voice of an evil villain.

1. Treat ChatGPT as a learning partner. Our research demonstrates that students are more likely to cheat when assignments are designed in ways that encourage them to outperform their classmates ...

While teachers were "understandably nervous" about ChatGPT's impact homework, "we also hear a lot from teachers who are like, wow, this is like an unbelievable personal tutor for each kid ...

Pew Research Center conducted this analysis to understand American teens' use and understanding of ChatGPT in the school setting. The Center conducted an online survey of 1,453 U.S. teens from Sept. 26 to Oct. 23, 2023, via Ipsos.

Honestly, the amount of homework kids get is out of control. Our education system need to restructure and find new and interesting ways to get students into topics. If anything chat gpt just highlights the problem with some courses that are not necessary, gen ed's and filler courses.

In these classes, it's most important that students learn the material and can think about it critically, so I can see using Chat-GPT as a tool. But in other classes, especially writing classes, the subject is really about practicing a skill, one that will truly benefit students if they can handle it on command once they're out of school.

But, assuming that it improves, at some point in the future we will see the possibility of administering homework and exams on GPT itself, in such a way that GPT is the proctor and if a student tries to ask GPT to solve a problem that is a homework or exam problem, GPT will be able to recognize that and will remind the student that this is his ...

The $20 per month tier unlocks access to GPT-4 — a more recent language model with better math and logic capabilities. ChatGPT Plus combined with the Wolfram plugin makes the chatbot adept at ...

But banning students from using ChatGPT, or expecting teachers to scour homework for its use, would be shortsighted. Education has adapted to - and embraced - online technology for decades.

Hey u/EnvironmentOk3039, please respond to this comment with the prompt you used to generate the output in this post.Thanks! Ignore this comment if your post doesn't have a prompt. We have a public discord server.There's a free Chatgpt bot, Open Assistant bot (Open-source model), AI image generator bot, Perplexity AI bot, 🤖 GPT-4 bot (Now with Visual capabilities (cloud vision)!

Using ChatGPT for Assignments | Tips & Examples. Published on February 13, 2023 by Jack Caulfield and Tobias Solis. Revised on November 16, 2023. People are still figuring out the best use cases for ChatGPT, the popular chatbot based on a powerful AI language model.This article provides some ideas for how to use ChatGPT and other AI tools to assist with your academic writing.

ChatGPT can quickly generate a number of different paraphrases of the same idea. You can keep typing "again" in the chat until you are satisfied with the result. This kind of paraphrasing can be helpful to, for example, non-native speakers who want to improve the fluency of their text. However, be careful. The paraphrases often:

"Cheating on homework is obviously bad," said Altman. "But what we mean by cheating and what the expected rules are does change over time." Altman discussed AI in the academy, along with the subtleties of using ChatGPT and other generative AI tools, while at the University to receive the Experiment Cup from Xfund , an early stage ...

Get the Reddit app Scan this QR code to download the app now. Or check it out in the app stores ... do not use CHATGPT for college homework, essays, or anything college related unless ... The model won't tolerate hate, racism and dictatorships. Please use chat gpt responsibly and ethically. Open ai and chat gpt won't be responsible for any ...

Online course provider Study.com asked 1,000 students over the age of 18 about the use of ChatGPT, OpenAI's blockbuster chatbot, in the classroom.. The responses were surprising. A full 89 percent ...

It's also good for feedback and ideation. GPT is a "yes-man" and just agrees with anything regardless of how good. It has to be prompted to be critical. I gave Gemini some of my ideas, and it's immediately was critical and gave actual valuable feedback, better than GPT. GPT4 = better for coding, logic, reason

Hey u/wasd, please respond to this comment with the prompt you used to generate the output in this post.Thanks! Ignore this comment if your post doesn't have a prompt. We have a public discord server.There's a free Chatgpt bot, Open Assistant bot (Open-source model), AI image generator bot, Perplexity AI bot, 🤖 GPT-4 bot (Now with Visual capabilities (cloud vision)!