Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 11 November 2022

Error, reproducibility and uncertainty in experiments for electrochemical energy technologies

- Graham Smith ORCID: orcid.org/0000-0003-0713-2893 1 &

- Edmund J. F. Dickinson ORCID: orcid.org/0000-0003-2137-3327 1

Nature Communications volume 13 , Article number: 6832 ( 2022 ) Cite this article

10k Accesses

9 Citations

22 Altmetric

Metrics details

- Electrocatalysis

- Electrochemistry

- Materials for energy and catalysis

The authors provide a metrology-led perspective on best practice for the electrochemical characterisation of materials for electrochemical energy technologies. Such electrochemical experiments are highly sensitive, and their results are, in practice, often of uncertain quality and challenging to reproduce quantitatively.

A critical aspect of research on electrochemical energy devices, such as batteries, fuel cells and electrolysers, is the evaluation of new materials, components, or processes in electrochemical cells, either ex situ, in situ or in operation. For such experiments, rigorous experimental control and standardised methods are required to achieve reproducibility, even on standard or idealised systems such as single crystal platinum 1 . Data reported for novel materials often exhibit high (or unstated) uncertainty and often prove challenging to reproduce quantitatively. This situation is exacerbated by a lack of formally standardised methods, and practitioners with less formal training in electrochemistry being unaware of best practices. This limits trust in published metrics, with discussions on novel electrochemical systems frequently focusing on a single series of experiments performed by one researcher in one laboratory, comparing the relative performance of the novel material against a claimed state-of-the-art.

Much has been written about the broader reproducibility/replication crisis 2 and those reading the electrochemical literature will be familiar with weakly underpinned claims of “outstanding” performance, while being aware that comparisons may be invalidated by measurement errors introduced by experimental procedures which violate best practice; such issues frequently mar otherwise exciting science in this area. The degree of concern over the quality of reported results is evidenced by the recent decision of several journals to publish explicit experimental best practices 3 , 4 , 5 , reporting guidelines or checklists 6 , 7 , 8 , 9 , 10 and commentary 11 , 12 , 13 aiming to improve the situation, including for parallel theoretical work 14 .

We write as two electrochemists who, working in a national metrology institute, have enjoyed recent exposure to metrology: the science of measurement. Metrology provides the vocabulary 15 and mathematical tools 16 to express confidence in measurements and the comparisons made between them. Metrological systems and frameworks for quantification underpin consistency and assurance in all measurement fields and formal metrology is an everyday consideration for practical and academic work in fields where accurate measurements are crucial; we have found it a useful framework within which to evaluate our own electrochemical work. Here, rather than pen another best practice guide, we aim, with focus on three-electrode electrochemical measurements for energy material characterisation, to summarise some advice that we hope helps those performing electrochemical experiments to:

avoid mistakes and minimise error

report in a manner that facilitates reproducibility

consider and quantify uncertainty

Minimising mistakes and error

Metrology dispenses with nebulous concepts such as performance and instead requires scientists to define a specific measurand (“the quantity intended to be measured”) along with a measurement model ( ”the mathematical relation among all quantities known to be involved in a measurement”), which converts the experimental indicators into the measurand 15 . Error is the difference between the reported value of this measurand and its unknowable true value. (Note this is not the formal definition, and the formal concepts of error and true value are not fully compatible with measurement concepts discussed in this article, but we retain it here—as is common in metrology tuition delivered by national metrology institutes—for pedagogical purposes 15 ).

Mistakes (or gross errors) are those things which prevent measurements from working as intended. In electrochemistry the primary experimental indicator is often current or voltage, while the measurand might be something simple, like device voltage for a given current density, or more complex, like a catalyst’s turnover frequency. Both of these are examples of ‘method-defined measurands’, where the results need to be defined in reference to the method of measurement 17 , 18 (for example, to take account of operating conditions). Robust experimental design and execution are vital to understand, quantify and minimise sources of error, and to prevent mistakes.

Contemporary electrochemical instrumentation can routinely offer a current resolution and accuracy on the order of femtoamps; however, one electron looks much like another to a potentiostat. Consequently, the practical limit on measurements of current is the scientist’s ability to unambiguously determine what causes the observed current. Crucially, they must exclude interfering processes such as modified/poisoned catalyst sites or competing reactions due to impurities.

As electrolytes are conventionally in enormous excess compared to the active heterogeneous interface, electrolyte purity requirements are very high. Note, for example, that a perfectly smooth 1 cm 2 polycrystalline platinum electrode has on the order of 2 nmol of atoms exposed to the electrolyte, so that irreversibly adsorbing impurities present at the part per billion level (nmol mol −1 ) in the electrolyte may substantially alter the surface of the electrode. Sources of impurities at such low concentration are innumerable and must be carefully considered for each experiment; impurity origins for kinetic studies in aqueous solution have been considered broadly in the historical literature, alongside a review of standard mitigation methods 19 . Most commercial electrolytes contain impurities and the specific ‘grade’ chosen may have a large effect; for example, one study showed a three-fold decrease in the specific activity of oxygen reduction catalysts when preparing electrolytes with American Chemical Society (ACS) grade acid rather than a higher purity grade 20 . Likewise, even 99.999% pure hydrogen gas, frequently used for sparging, may contain more than the 0.2 μmol mol −1 of carbon monoxide permitted for fuel cell use 21 .

The most insidious impurities are those generated in situ. The use of reference electrodes with chloride-containing filling solutions should be avoided where chloride may poison catalysts 22 or accelerate dissolution. Similarly, reactions at the counter electrode, including dissolution of the electrode itself, may result in impurities. This is sometimes overlooked when platinum counter electrodes are used to assess ‘platinum-free’ electrocatalysts, accidentally resulting in performance-enhancing contamination 23 , 24 ; a critical discussion on this topic has recently been published 25 . Other trace impurity sources include plasticisers present in cells and gaskets, or silicates from the inappropriate use of glass when working with alkaline electrolytes 26 . To mitigate sensitivity to impurities from the environment, cleaning protocols for cells and components must be robust 27 . The use of piranha solution or similarly oxidising solution followed by boiling in Type 1 water is typical when performing aqueous electrochemistry 20 . Cleaned glassware and electrodes are also routinely stored underwater to prevent recontamination from airborne impurities.

The behaviour of electronic hardware used for electrochemical experiments should be understood and considered carefully in interpreting data 28 , recognising that the built-in complexity of commercially available digital potentiostats (otherwise advantageous!) is capable of introducing measurement artefacts or ambiguity 29 , 30 . While contemporary electrochemical instrumentation may have a voltage resolution of ~1 μV, its voltage measurement uncertainty is limited by other factors, and is typically on the order of 1 mV. As passing current through an electrode changes its potential, a dedicated reference electrode is often incorporated into both ex situ and, increasingly, in situ experiments to provide a stable well defined reference. Reference electrodes are typically selected from a range of well-known standardised electrode–electrolyte interfaces at which a characteristic and kinetically rapid reversible faradaic process occurs. The choice of reference electrode should be made carefully in consideration of chemical compatibility with the measurement environment 31 , 32 , 33 , 34 . In combination with an electronic blocking resistance, the potential of the electrode should be stable and reproducible. Unfortunately, deviation from the ideal behaviour frequently occurs. While this can often be overlooked when comparing results from identical cells, more attention is required when reporting values for comparison.

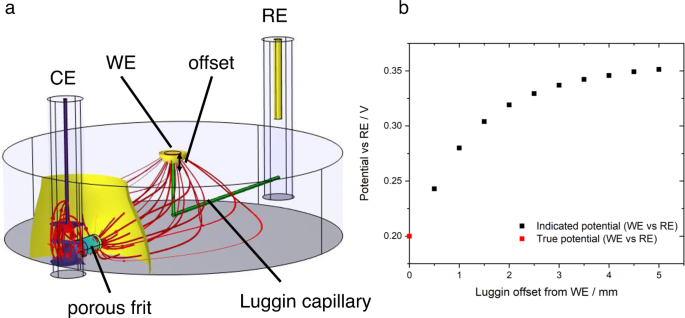

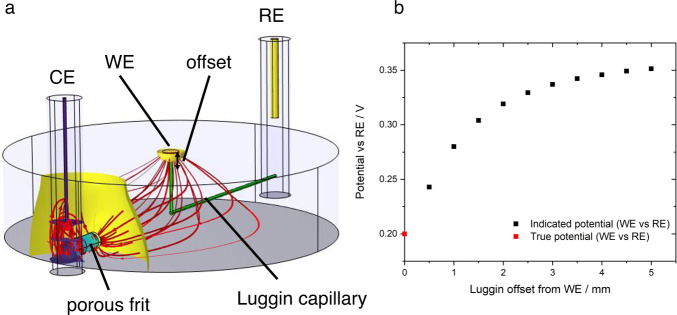

In all cases where conversion between different electrolyte–reference electrode systems is required, junction potentials should be considered. These arise whenever there are different chemical conditions in the electrolyte at the working electrode and reference electrode interfaces. Outside highly dilute solutions, or where there are large activity differences for a reactant/product of the electrode reaction (e.g. pH for hydrogen reactions), liquid junction potentials for conventional aqueous ions have been estimated in the range <50 mV 33 . Such a deviation may nonetheless be significant when onset potentials or activities at specific potentials are being reported. The measured potential difference between the working and reference electrode also depends strongly on the geometry of the cell, so cell design is critical. Fig. 1 shows the influence of cell design on potential profiles. Ideally the reference electrode should therefore be placed close to the working electrode (noting that macroscopic electrodes may have inhomogeneous potentials). To minimise shielding of the electric field between counter and working electrode and interruption of mass transport processes, a thin Luggin-Haber capillary is often used and a small separation maintained. Understanding of shielding and edge effects is vital when reference electrodes are introduced in situ. This is especially applicable for analysis of energy devices for which constraints on cell design, due to the need to minimise electrolyte resistance and seal the cell, preclude optimal reference electrode positioning 32 , 35 , 36 .

a Illustration (simulated data) of primary (resistive) current and potential distribution in a typical three-electrode cell. The main compartment is cylindrical (4 cm diameter, 1 cm height), filled with electrolyte with conductivity 1.28 S m −1 (0.1 M KCl(aq)). The working electrode (WE) is a 2 mm diameter disc drawing 1 mA (≈ 32 mA cm −2 ) from a faradaic process with infinitely fast kinetics and redox potential 0.2 V vs the reference electrode (RE). The counter electrode (CE) is connected to the main compartment by a porous frit; the RE is connected by a Luggin capillary (green cylinders) whose tip position is offset from the WE by a variable distance. Red lines indicate prevailing current paths; coloured surfaces indicate isopotential contours normal to the current density. b Plot of indicated WE vs RE potential (simulated data). As the Luggin tip is moved away from the WE surface, ohmic losses due to the WE-CE current distribution lead to variation in the indicated WE-RE potential. Appreciable error may arise on an offset length scale comparable to the WE radius.

Quantitative statements about fundamental electrochemical processes based on measured values of current and voltage inevitably rely on models of the system. Such models have assumptions that may be routinely overlooked when following experimental and analysis methods, and that may restrict their application to real-world systems. It is quite possible to make highly precise but meaningless measurements! An often-assumed condition for electrocatalyst analysis is the absence of mass transport limitation. For some reactions, such as the acidic hydrogen oxidation and hydrogen evolution reactions, this state is arguably so challenging to reach at representative conditions that it is impossible to measure true catalyst activity 11 . For example, ex situ thin-film rotating disk electrode measurements routinely fail to predict correct trends in catalyst performance in morphologically complex catalyst layers as relevant operating conditions (e.g. meaningful current densities) are theoretically inaccessible. This topic has been extensively discussed with some authors directly criticising this technique and exploring alternatives 37 , 38 , and others defending the technique’s applicability for ranking catalysts if scrupulous attention is paid to experimental details 39 ; yet, many reports continue to use this measurement technique blindly with no regard for its applicability. We therefore strongly urge those planning measurements to consider whether their chosen technique is capable of providing sufficient evidence to disprove their hypothesis, even if it has been widely used for similar experiments.

The correct choice of technique should be dependent upon the measurand being probed rather than simply following previous reports. The case of iR correction, where a measurement of the uncompensated resistance is used to correct the applied voltage, is a good example. When the measurand is a material property, such as intrinsic catalyst activity, the uncompensated resistance is a source of error introduced by the experimental method and it should carefully be corrected out (Fig. 1 ). In the case that the uncompensated resistance is intrinsic to the measurand—for instance the operating voltage of an electrolyser cell—iR compensation is inappropriate and only serves to obfuscate. Another example is the choice of ex situ (outside the operating environment), in situ (in the operating environment), and operando (during operation) measurements. While in situ or operando testing allows characterisation under conditions that are more representative of real-world use, it may also yield measurements with increased uncertainty due to the decreased possibility for fine experimental control. Depending on the intended use of the measurement, an informed compromise must be sought between how relevant and how uncertain the resulting measurement will be.

Maximising reproducibility

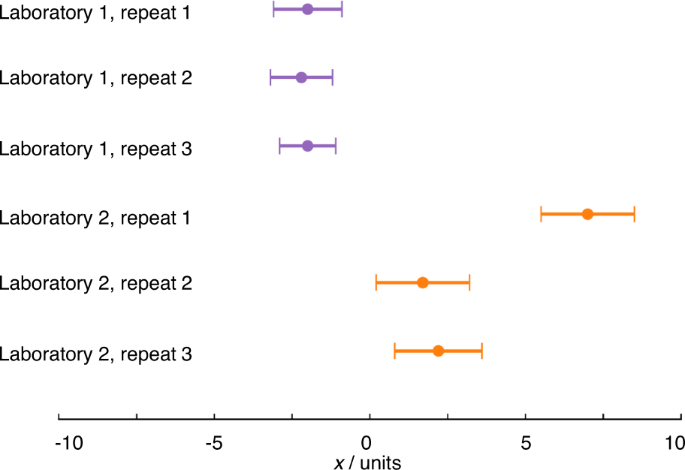

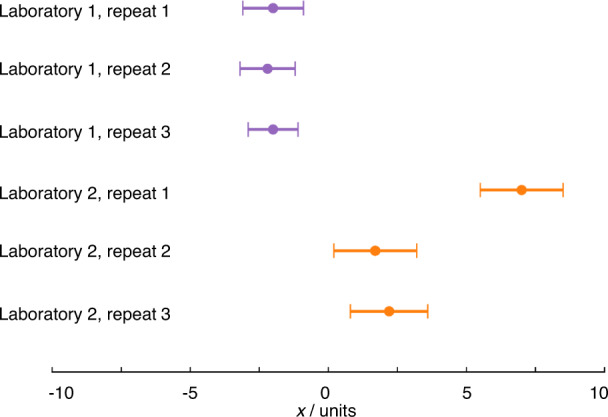

Most electrochemists assess the repeatability of measurements, performing the same measurement themselves several times. Repeats, where all steps (including sample preparation, where relevant) of a measurement are carried out multiple times, are absolutely crucial for highlighting one-off mistakes (Fig. 2 ). Reproducibility, however, is assessed when comparing results reported by different laboratories. Many readers will be familiar with the variability in key properties reported for various systems e.g. variability in the reported electrochemically active surface area (ECSA) of commercial catalysts, which might reasonably be expected to be constant, suggesting that, in practice, the reproducibility of results cannot be taken for granted. As electrochemistry deals mostly with method-defined measurands, the measurement procedure must be standardised for results to be comparable. Variation in results therefore strongly suggests that measurements are not being performed consistently and that the information typically supplied when publishing experimental methods is insufficient to facilitate reproducibility of electrochemical measurements. Quantitative electrochemical measurements require control over a large range of parameters, many of which are easily overlooked or specified imprecisely when reporting data. An understanding of the crucial parameters and methods for their control is often institutional knowledge, held by expert electrochemists, but infrequently formalised and communicated e.g. through publication of widely adopted standards. This creates challenges to both reproducibility and the corresponding assessment of experimental quality by reviewers. The reporting standards established by various publishers (see Introduction) offer a practical response, but it is still unclear whether these will contain sufficiently granular detail to improve the situation.

The measurements from laboratory 1 show a high degree of repeatability, while the measurements from laboratory 2 do not. Apparently, a mistake has been made in repeat 1, which will need to be excluded from any analysis and any uncertainty analysis, and/or suggests further repeat measurements should be conducted. The error bars are based on an uncertainty with coverage factor ~95% (see below) so the results from the two laboratories are different, i.e. show poor reproducibility. This may indicate differing experimental practice or that some as yet unidentified parameter is influencing the results.

Besides information typically supplied in the description of experimental methods for publication, which, at a minimum, must detail the materials, equipment and measurement methods used to generate the results, we suggest that a much more comprehensive description is often required, especially where measurements have historically poor reproducibility or the presented results differ from earlier reports. Such an expanded ‘supplementary experimental’ section would additionally include any details that could impact the results: for example, material pre-treatment, detailed electrode preparation steps, cleaning procedures, expected electrolyte and gas impurities, electrode preconditioning processes, cell geometry including electrode positions, detail of junctions between electrodes, and any other fine experimental details which might be institutional knowledge but unknown to the (now wide) readership of the electrochemical literature. In all cases any corrections and calculations used should be specified precisely and clearly justified; these may include determinations of properties of the studied system, such as ECSA, or of the environment, such as air pressure. We highlight that knowledge of the ECSA is crucial for conventional reporting of intrinsic electrocatalyst activity, but is often very challenging to measure in a reproducible manner 40 , 41 .

To aid reproducibility we recommend regularly calibrating experimental equipment and doing so in a way that is traceable to primary standards realising the International System of Units (SI) base units. The SI system ensures that measurement units (such as the volt) are uniform globally and invariant over time. Calibration applies to direct experimental indicators, e.g. loads and potentiostats, but equally to supporting tools such as temperature probes, balances, and flow meters. Calibration of reference electrodes is often overlooked even though variations from ideal behaviour can be significant 42 and, as discussed above, are often the limit of accuracy on potential measurement. Sometimes reports will specify internal calibration against a known reaction (such as the onset of the hydrogen evolution reaction), but rarely detail regular comparisons to a local master electrode artefact such as a reference hydrogen electrode or explain how that artefact is traceable, e.g. through control of the filling solution concentration and measurement conditions. If reference is made to a standardised material (e.g. commercial Pt/C) the specified material should be both widely available and the results obtained should be consistent with prior reports.

Beyond calibration and reporting, the best test of reproducibility is to perform intercomparisons between laboratories, either by comparing results to identical experiments reported in the literature or, more robustly, through participation in planned intercomparisons (for example ‘round-robin’ exercises); we highlight a recent study applied to solid electrolyte characterisation as a topical example 43 . Intercomparisons are excellent at establishing the key features of an experimental method and the comparability of results obtained from different methods; moreover they provide a consensus against which other laboratories may compare themselves. However, performing repeat measurements for assessing repeatability and reproducibility cannot estimate uncertainty comprehensively, as it excludes systematic sources of uncertainty.

Assessing measurement uncertainty

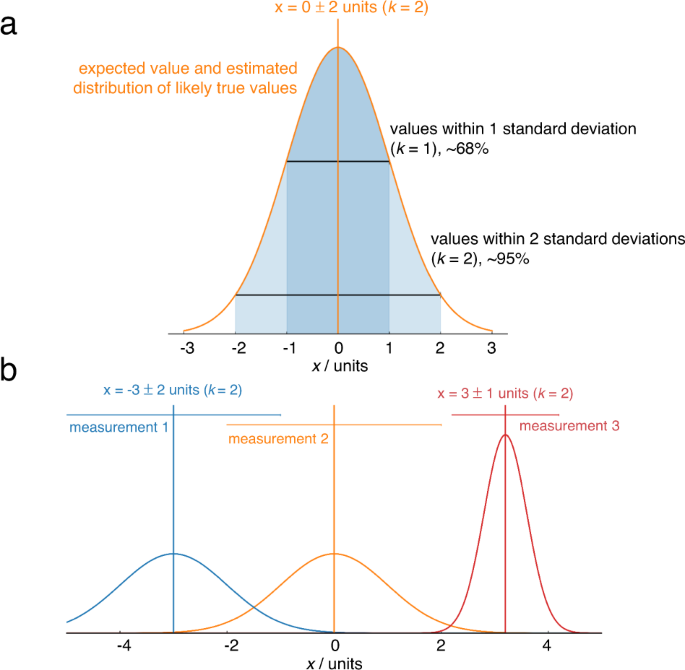

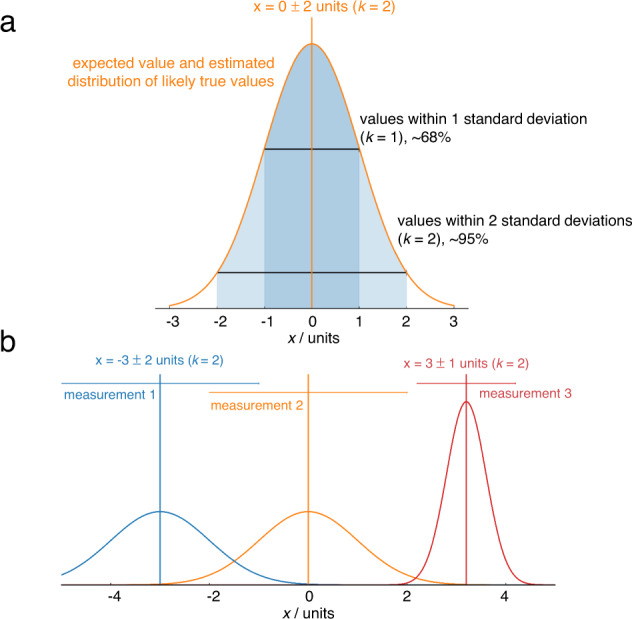

Formal uncertainty evaluation is an alien concept to most electrochemists; even the best papers (as well as our own!) typically report only the standard deviation between a few repeats. Metrological best practice dictates that reported values are stated as the combination of a best estimate of the measurand, and an interval, and a coverage factor ( k ) which gives the probability of the true value being within that interval. For example, “the turnover frequency of the electrocatalyst is 1.0 ± 0.2 s −1 ( k = 2)” 16 means that the scientist (having assumed normally distributed error) is 95% confident that the turnover frequency lies in the range 0.8–1.2 s −1 . Reporting results in such a way makes it immediately clear whether the measurements reliably support the stated conclusions, and enables meaningful comparisons between independent results even if their uncertainties differ (Fig. 3 ). It also encourages honesty and self-reflection about the shortcomings of results, encouraging the development of improved experimental techniques.

a Complete reporting of a measurement includes the best estimate of the measurand and an uncertainty and the probability the true value falls within the uncertainty reported. Here, the percentages indicate that a normal distribution has been assumed. b Horizontal bars indicate 95% confidence intervals from uncertainty analysis. The confidence intervals of measurements 1 and 2 overlap when using k = 2, so it is not possible to say with 95% confidence that the result of the measurement 2 is higher than measurement 1, but it is possible to say this with 68% confidence, i.e. k = 1. Measurement 3 has a lower uncertainty, so it is possible to say with 95% confidence that the value is higher than measurement 2.

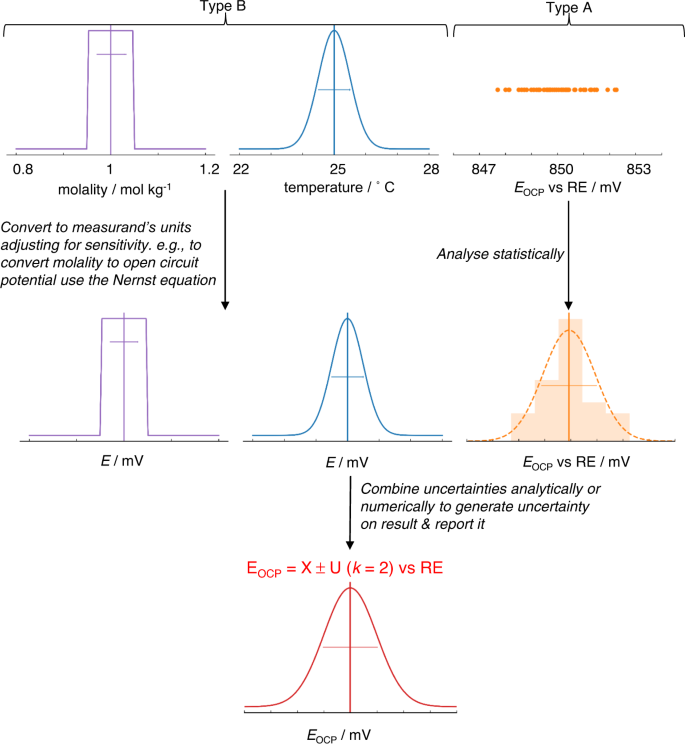

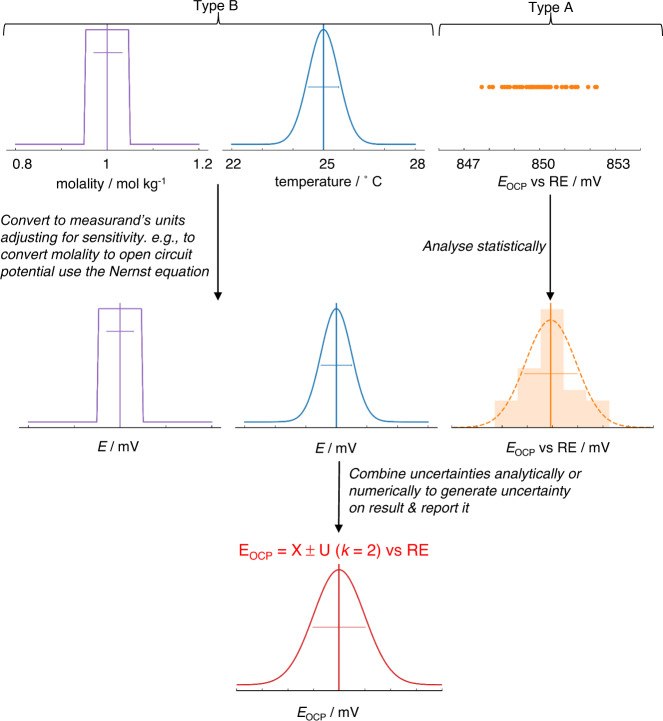

Constructing such a statement and performing the underlying calculations often appears daunting, not least as there are very few examples for electrochemical systems, with pH measurements being one example to have been treated thoroughly 44 . However, a standard process for uncertainty analysis exists, as briefly outlined graphically in Fig. 4 . We refer the interested reader to both accessible introductory texts 45 and detailed step-by-step guides 16 , 46 . The first steps in the process are to state precisely what is being measured—the measurand—and identify likely sources of uncertainty. Even this qualitative effort is often revealing. Precision in the definition of the measurand (and how it is determined from experimental indicators) clarifies the selection of measurement technique and helps to assess its appropriateness; for example, where the measurand relates only to an instantaneous property of a specific physical object, e.g. the current density of a specific fuel cell at 0.65 V following a standardised protocol, we ignore all variability in construction, device history etc. and no error is introduced by the sample. Whereas, when the measurand is a material property, such as turnover frequency of a catalyst material with a defined chemistry and preparation method, variability related to the material itself and sample preparation will often introduce substantial uncertainty in the final result. In electrochemical measurements, errors may arise from a range of sources including the measurement equipment, fluctuations in operating conditions, or variability in materials and samples. Identifying these sources leads to the design of better-quality experiments. In essence, the subsequent steps in the calculation of uncertainty quantify the uncertainty introduced by each source of error and, by using a measurement model or a sensitivity analysis (i.e. an assessment of how the results are sensitive to variability in input parameters), propagate these to arrive at a final uncertainty on the reported result.

Possible sources of uncertainty are identified, and their standard uncertainty or probability distribution is determined by statistical analysis of repeat measurements (Type A uncertainties) or other evidence (Type B uncertainties). If required, uncertainties are then converted into the same unit as the measurand and adjusted for sensitivity, using a measurement model. Uncertainties are then combined either analytically using a standard approach or numerically to generate an overall estimate of uncertainty for the measurand (as indicated in Fig. 3a ).

Generally, given the historically poor understanding of uncertainty in electrochemistry, we promote increased awareness of uncertainty reporting standards and a focus on reporting measurement uncertainty with a level of detail that is appropriate to the claim made, or the scientific utilisation of the data. For example, where the primary conclusion of a paper relies on demonstrating that a material has the ‘highest ever X’ or ‘X is bigger than Y’ it is reasonable for reviewers to ask authors to quantify how confident they are in their measurement and statement. Additionally, where uncertainties are reported, even with error bars in numerical or graphical data, the method by which the uncertainty was determined should be stated, even if the method is consciously simple (e.g. “error bars indicate the sample standard deviation of n = 3 measurements carried out on independent electrodes”). Unfortunately, we are aware of only sporadic and incomplete efforts to create formal uncertainty budgets for electrochemical measurements of energy technologies or materials, though work is underway in our group to construct these for some exemplar systems.

Electrochemistry has undoubtedly thrived without significant interaction with formal metrology; we do not urge an abrupt revolution whereby rigorous measurements become devalued if they lack additional arcane formality. Rather, we recommend using the well-honed principles of metrology to illuminate best practice and increase transparency about the strengths and shortcomings of reported experiments. From rethinking experimental design, to participating in laboratory intercomparisons and estimating the uncertainty on key results, the application of metrological principles to electrochemistry will result in more robust science.

Climent, V. & Feliu, J. M. Thirty years of platinum single crystal electrochemistry. J. Solid State Electrochem . https://doi.org/10.1007/s10008-011-1372-1 (2011).

Nature Editors and Contributors. Challenges in irreproducible research collection. Nature https://www.nature.com/collections/prbfkwmwvz/ (2018).

Chen, J. G., Jones, C. W., Linic, S. & Stamenkovic, V. R. Best practices in pursuit of topics in heterogeneous electrocatalysis. ACS Catal. 7 , 6392–6393 (2017).

Article CAS Google Scholar

Voiry, D. et al. Best practices for reporting electrocatalytic performance of nanomaterials. ACS Nano 12 , 9635–9638 (2018).

Article CAS PubMed Google Scholar

Wei, C. et al. Recommended practices and benchmark activity for hydrogen and oxygen electrocatalysis in water splitting and fuel cells. Adv. Mater. 31 , 1806296 (2019).

Article Google Scholar

Chem Catalysis Editorial Team. Chem Catalysis Checklists Revision 1.1 . https://info.cell.com/introducing-our-checklists-learn-more (2021).

Chatenet, M. et al. Good practice guide for papers on fuel cells and electrolysis cells for the Journal of Power Sources. J. Power Sources 451 , 227635 (2020).

Sun, Y. K. An experimental checklist for reporting battery performances. ACS Energy Lett. 6 , 2187–2189 (2021).

Li, J. et al. Good practice guide for papers on batteries for the Journal of Power Sources. J. Power Sources 452 , 227824 (2020).

Arbizzani, C. et al. Good practice guide for papers on supercapacitors and related hybrid capacitors for the Journal of Power Sources. J. Power Sources 450 , 227636 (2020).

Hansen, J. N. et al. Is there anything better than Pt for HER? ACS Energy Lett. 6 , 1175–1180 (2021).

Article CAS PubMed PubMed Central Google Scholar

Xu, K. Navigating the minefield of battery literature. Commun. Mater. 3 , 1–7 (2022).

Dix, S. T., Lu, S. & Linic, S. Critical practices in rigorously assessing the inherent activity of nanoparticle electrocatalysts. ACS Catal. 10 , 10735–10741 (2020).

Mistry, A. et al. A minimal information set to enable verifiable theoretical battery research. ACS Energy Lett. 6 , 3831–3835 (2021).

Joint Committee for Guides in Metrology: Working Group 2. International Vocabulary of Metrology—Basic and General Concepts and Associated Terms . (2012).

Joint Committee for Guides in Metrology: Working Group 1. Evaluation of measurement data—Guide to the expression of uncertainty in measurement . (2008).

International Organization for Standardization: Committee on Conformity Assessment. ISO 17034:2016 General requirements for the competence of reference material producers . (2016).

Brown, R. J. C. & Andres, H. How should metrology bodies treat method-defined measurands? Accredit. Qual. Assur . https://doi.org/10.1007/s00769-020-01424-w (2020).

Angerstein-Kozlowska, H. Surfaces, Cells, and Solutions for Kinetics Studies . Comprehensive Treatise of Electrochemistry vol. 9: Electrodics: Experimental Techniques (Plenum Press, 1984).

Shinozaki, K., Zack, J. W., Richards, R. M., Pivovar, B. S. & Kocha, S. S. Oxygen reduction reaction measurements on platinum electrocatalysts utilizing rotating disk electrode technique. J. Electrochem. Soc. 162 , F1144–F1158 (2015).

International Organization for Standardization: Technical Committee ISO/TC 197. ISO 14687:2019(E)—Hydrogen fuel quality—Product specification . (2019).

Schmidt, T. J., Paulus, U. A., Gasteiger, H. A. & Behm, R. J. The oxygen reduction reaction on a Pt/carbon fuel cell catalyst in the presence of chloride anions. J. Electroanal. Chem. 508 , 41–47 (2001).

Chen, R. et al. Use of platinum as the counter electrode to study the activity of nonprecious metal catalysts for the hydrogen evolution reaction. ACS Energy Lett. 2 , 1070–1075 (2017).

Ji, S. G., Kim, H., Choi, H., Lee, S. & Choi, C. H. Overestimation of photoelectrochemical hydrogen evolution reactivity induced by noble metal impurities dissolved from counter/reference electrodes. ACS Catal. 10 , 3381–3389 (2020).

Jerkiewicz, G. Applicability of platinum as a counter-electrode material in electrocatalysis research. ACS Catal. 12 , 2661–2670 (2022).

Guo, J., Hsu, A., Chu, D. & Chen, R. Improving oxygen reduction reaction activities on carbon-supported ag nanoparticles in alkaline solutions. J. Phys. Chem. C. 114 , 4324–4330 (2010).

Arulmozhi, N., Esau, D., van Drunen, J. & Jerkiewicz, G. Design and development of instrumentations for the preparation of platinum single crystals for electrochemistry and electrocatalysis research Part 3: Final treatment, electrochemical measurements, and recommended laboratory practices. Electrocatal 9 , 113–123 (2017).

Colburn, A. W., Levey, K. J., O’Hare, D. & Macpherson, J. V. Lifting the lid on the potentiostat: a beginner’s guide to understanding electrochemical circuitry and practical operation. Phys. Chem. Chem. Phys. 23 , 8100–8117 (2021).

Ban, Z., Kätelhön, E. & Compton, R. G. Voltammetry of porous layers: staircase vs analog voltammetry. J. Electroanal. Chem. 776 , 25–33 (2016).

McMath, A. A., Van Drunen, J., Kim, J. & Jerkiewicz, G. Identification and analysis of electrochemical instrumentation limitations through the study of platinum surface oxide formation and reduction. Anal. Chem. 88 , 3136–3143 (2016).

Jerkiewicz, G. Standard and reversible hydrogen electrodes: theory, design, operation, and applications. ACS Catal. 10 , 8409–8417 (2020).

Ives, D. J. G. & Janz, G. J. Reference Electrodes, Theory and Practice (Academic Press, 1961).

Newman, J. & Balsara, N. P. Electrochemical Systems (Wiley, 2021).

Inzelt, G., Lewenstam, A. & Scholz, F. Handbook of Reference Electrodes (Springer Berlin, 2013).

Cimenti, M., Co, A. C., Birss, V. I. & Hill, J. M. Distortions in electrochemical impedance spectroscopy measurements using 3-electrode methods in SOFC. I—effect of cell geometry. Fuel Cells 7 , 364–376 (2007).

Hoshi, Y. et al. Optimization of reference electrode position in a three-electrode cell for impedance measurements in lithium-ion rechargeable battery by finite element method. J. Power Sources 288 , 168–175 (2015).

Article ADS CAS Google Scholar

Jackson, C., Lin, X., Levecque, P. B. J. & Kucernak, A. R. J. Toward understanding the utilization of oxygen reduction electrocatalysts under high mass transport conditions and high overpotentials. ACS Catal. 12 , 200–211 (2022).

Masa, J., Batchelor-McAuley, C., Schuhmann, W. & Compton, R. G. Koutecky-Levich analysis applied to nanoparticle modified rotating disk electrodes: electrocatalysis or misinterpretation. Nano Res. 7 , 71–78 (2014).

Martens, S. et al. A comparison of rotating disc electrode, floating electrode technique and membrane electrode assembly measurements for catalyst testing. J. Power Sources 392 , 274–284 (2018).

Wei, C. et al. Approaches for measuring the surface areas of metal oxide electrocatalysts for determining their intrinsic electrocatalytic activity. Chem. Soc. Rev. 48 , 2518–2534 (2019).

Lukaszewski, M., Soszko, M. & Czerwiński, A. Electrochemical methods of real surface area determination of noble metal electrodes—an overview. Int. J. Electrochem. Sci. https://doi.org/10.20964/2016.06.71 (2016).

Niu, S., Li, S., Du, Y., Han, X. & Xu, P. How to reliably report the overpotential of an electrocatalyst. ACS Energy Lett. 5 , 1083–1087 (2020).

Ohno, S. et al. How certain are the reported ionic conductivities of thiophosphate-based solid electrolytes? An interlaboratory study. ACS Energy Lett. 5 , 910–915 (2020).

Buck, R. P. et al. Measurement of pH. Definition, standards, and procedures (IUPAC Recommendations 2002). Pure Appl. Chem. https://doi.org/10.1351/pac200274112169 (2003).

Bell, S. Good Practice Guide No. 11. The Beginner’s Guide to Uncertainty of Measurement. (Issue 2). (National Physical Laboratory, 2001).

United Kingdom Accreditation Service. M3003 The Expression of Uncertainty and Confidence in Measurement 4th edn. (United Kingdom Accreditation Service, 2019).

Download references

Acknowledgements

This work was supported by the National Measurement System of the UK Department of Business, Energy & Industrial Strategy. Andy Wain, Richard Brown and Gareth Hinds (National Physical Laboratory, Teddington, UK) provided insightful comments on the text.

Author information

Authors and affiliations.

National Physical Laboratory, Hampton Road, Teddington, TW11 0LW, UK

Graham Smith & Edmund J. F. Dickinson

You can also search for this author in PubMed Google Scholar

Contributions

G.S. originated the concept for the article. G.S. and E.J.F.D. contributed to drafting, editing and revision of the manuscript.

Corresponding author

Correspondence to Graham Smith .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Peer review

Peer review information.

Nature Communications thanks Gregory Jerkiewicz for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

Smith, G., Dickinson, E.J.F. Error, reproducibility and uncertainty in experiments for electrochemical energy technologies. Nat Commun 13 , 6832 (2022). https://doi.org/10.1038/s41467-022-34594-x

Download citation

Received : 29 July 2022

Accepted : 31 October 2022

Published : 11 November 2022

DOI : https://doi.org/10.1038/s41467-022-34594-x

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

Electrochemical surface-enhanced raman spectroscopy.

- Christa L. Brosseau

- Alvaro Colina

Nature Reviews Methods Primers (2023)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

Error, reproducibility and uncertainty in experiments for electrochemical energy technologies

Graham smith, edmund j f dickinson.

- Author information

- Article notes

- Copyright and License information

Corresponding author.

Received 2022 Jul 29; Accepted 2022 Oct 31; Collection date 2022.

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/ .

The authors provide a metrology-led perspective on best practice for the electrochemical characterisation of materials for electrochemical energy technologies. Such electrochemical experiments are highly sensitive, and their results are, in practice, often of uncertain quality and challenging to reproduce quantitatively.

Subject terms: Electrocatalysis, Materials for energy and catalysis, Electrochemistry, Fuel cells, Batteries

A critical aspect of research on electrochemical energy devices, such as batteries, fuel cells and electrolysers, is the evaluation of new materials, components, or processes in electrochemical cells, either ex situ, in situ or in operation. For such experiments, rigorous experimental control and standardised methods are required to achieve reproducibility, even on standard or idealised systems such as single crystal platinum 1 . Data reported for novel materials often exhibit high (or unstated) uncertainty and often prove challenging to reproduce quantitatively. This situation is exacerbated by a lack of formally standardised methods, and practitioners with less formal training in electrochemistry being unaware of best practices. This limits trust in published metrics, with discussions on novel electrochemical systems frequently focusing on a single series of experiments performed by one researcher in one laboratory, comparing the relative performance of the novel material against a claimed state-of-the-art.

Much has been written about the broader reproducibility/replication crisis 2 and those reading the electrochemical literature will be familiar with weakly underpinned claims of “outstanding” performance, while being aware that comparisons may be invalidated by measurement errors introduced by experimental procedures which violate best practice; such issues frequently mar otherwise exciting science in this area. The degree of concern over the quality of reported results is evidenced by the recent decision of several journals to publish explicit experimental best practices 3 – 5 , reporting guidelines or checklists 6 – 10 and commentary 11 – 13 aiming to improve the situation, including for parallel theoretical work 14 .

We write as two electrochemists who, working in a national metrology institute, have enjoyed recent exposure to metrology: the science of measurement. Metrology provides the vocabulary 15 and mathematical tools 16 to express confidence in measurements and the comparisons made between them. Metrological systems and frameworks for quantification underpin consistency and assurance in all measurement fields and formal metrology is an everyday consideration for practical and academic work in fields where accurate measurements are crucial; we have found it a useful framework within which to evaluate our own electrochemical work. Here, rather than pen another best practice guide, we aim, with focus on three-electrode electrochemical measurements for energy material characterisation, to summarise some advice that we hope helps those performing electrochemical experiments to:

avoid mistakes and minimise error

report in a manner that facilitates reproducibility

consider and quantify uncertainty

Minimising mistakes and error

Metrology dispenses with nebulous concepts such as performance and instead requires scientists to define a specific measurand (“the quantity intended to be measured”) along with a measurement model ( ”the mathematical relation among all quantities known to be involved in a measurement”), which converts the experimental indicators into the measurand 15 . Error is the difference between the reported value of this measurand and its unknowable true value. (Note this is not the formal definition, and the formal concepts of error and true value are not fully compatible with measurement concepts discussed in this article, but we retain it here—as is common in metrology tuition delivered by national metrology institutes—for pedagogical purposes 15 ).

Mistakes (or gross errors) are those things which prevent measurements from working as intended. In electrochemistry the primary experimental indicator is often current or voltage, while the measurand might be something simple, like device voltage for a given current density, or more complex, like a catalyst’s turnover frequency. Both of these are examples of ‘method-defined measurands’, where the results need to be defined in reference to the method of measurement 17 , 18 (for example, to take account of operating conditions). Robust experimental design and execution are vital to understand, quantify and minimise sources of error, and to prevent mistakes.

Contemporary electrochemical instrumentation can routinely offer a current resolution and accuracy on the order of femtoamps; however, one electron looks much like another to a potentiostat. Consequently, the practical limit on measurements of current is the scientist’s ability to unambiguously determine what causes the observed current. Crucially, they must exclude interfering processes such as modified/poisoned catalyst sites or competing reactions due to impurities.

As electrolytes are conventionally in enormous excess compared to the active heterogeneous interface, electrolyte purity requirements are very high. Note, for example, that a perfectly smooth 1 cm 2 polycrystalline platinum electrode has on the order of 2 nmol of atoms exposed to the electrolyte, so that irreversibly adsorbing impurities present at the part per billion level (nmol mol −1 ) in the electrolyte may substantially alter the surface of the electrode. Sources of impurities at such low concentration are innumerable and must be carefully considered for each experiment; impurity origins for kinetic studies in aqueous solution have been considered broadly in the historical literature, alongside a review of standard mitigation methods 19 . Most commercial electrolytes contain impurities and the specific ‘grade’ chosen may have a large effect; for example, one study showed a three-fold decrease in the specific activity of oxygen reduction catalysts when preparing electrolytes with American Chemical Society (ACS) grade acid rather than a higher purity grade 20 . Likewise, even 99.999% pure hydrogen gas, frequently used for sparging, may contain more than the 0.2 μmol mol −1 of carbon monoxide permitted for fuel cell use 21 .

The most insidious impurities are those generated in situ. The use of reference electrodes with chloride-containing filling solutions should be avoided where chloride may poison catalysts 22 or accelerate dissolution. Similarly, reactions at the counter electrode, including dissolution of the electrode itself, may result in impurities. This is sometimes overlooked when platinum counter electrodes are used to assess ‘platinum-free’ electrocatalysts, accidentally resulting in performance-enhancing contamination 23 , 24 ; a critical discussion on this topic has recently been published 25 . Other trace impurity sources include plasticisers present in cells and gaskets, or silicates from the inappropriate use of glass when working with alkaline electrolytes 26 . To mitigate sensitivity to impurities from the environment, cleaning protocols for cells and components must be robust 27 . The use of piranha solution or similarly oxidising solution followed by boiling in Type 1 water is typical when performing aqueous electrochemistry 20 . Cleaned glassware and electrodes are also routinely stored underwater to prevent recontamination from airborne impurities.

The behaviour of electronic hardware used for electrochemical experiments should be understood and considered carefully in interpreting data 28 , recognising that the built-in complexity of commercially available digital potentiostats (otherwise advantageous!) is capable of introducing measurement artefacts or ambiguity 29 , 30 . While contemporary electrochemical instrumentation may have a voltage resolution of ~1 μV, its voltage measurement uncertainty is limited by other factors, and is typically on the order of 1 mV. As passing current through an electrode changes its potential, a dedicated reference electrode is often incorporated into both ex situ and, increasingly, in situ experiments to provide a stable well defined reference. Reference electrodes are typically selected from a range of well-known standardised electrode–electrolyte interfaces at which a characteristic and kinetically rapid reversible faradaic process occurs. The choice of reference electrode should be made carefully in consideration of chemical compatibility with the measurement environment 31 – 34 . In combination with an electronic blocking resistance, the potential of the electrode should be stable and reproducible. Unfortunately, deviation from the ideal behaviour frequently occurs. While this can often be overlooked when comparing results from identical cells, more attention is required when reporting values for comparison.

In all cases where conversion between different electrolyte–reference electrode systems is required, junction potentials should be considered. These arise whenever there are different chemical conditions in the electrolyte at the working electrode and reference electrode interfaces. Outside highly dilute solutions, or where there are large activity differences for a reactant/product of the electrode reaction (e.g. pH for hydrogen reactions), liquid junction potentials for conventional aqueous ions have been estimated in the range <50 mV 33 . Such a deviation may nonetheless be significant when onset potentials or activities at specific potentials are being reported. The measured potential difference between the working and reference electrode also depends strongly on the geometry of the cell, so cell design is critical. Fig. 1 shows the influence of cell design on potential profiles. Ideally the reference electrode should therefore be placed close to the working electrode (noting that macroscopic electrodes may have inhomogeneous potentials). To minimise shielding of the electric field between counter and working electrode and interruption of mass transport processes, a thin Luggin-Haber capillary is often used and a small separation maintained. Understanding of shielding and edge effects is vital when reference electrodes are introduced in situ. This is especially applicable for analysis of energy devices for which constraints on cell design, due to the need to minimise electrolyte resistance and seal the cell, preclude optimal reference electrode positioning 32 , 35 , 36 .

Fig. 1. Example of error introduced by cell geometry.

a Illustration (simulated data) of primary (resistive) current and potential distribution in a typical three-electrode cell. The main compartment is cylindrical (4 cm diameter, 1 cm height), filled with electrolyte with conductivity 1.28 S m −1 (0.1 M KCl(aq)). The working electrode (WE) is a 2 mm diameter disc drawing 1 mA (≈ 32 mA cm −2 ) from a faradaic process with infinitely fast kinetics and redox potential 0.2 V vs the reference electrode (RE). The counter electrode (CE) is connected to the main compartment by a porous frit; the RE is connected by a Luggin capillary (green cylinders) whose tip position is offset from the WE by a variable distance. Red lines indicate prevailing current paths; coloured surfaces indicate isopotential contours normal to the current density. b Plot of indicated WE vs RE potential (simulated data). As the Luggin tip is moved away from the WE surface, ohmic losses due to the WE-CE current distribution lead to variation in the indicated WE-RE potential. Appreciable error may arise on an offset length scale comparable to the WE radius.

Quantitative statements about fundamental electrochemical processes based on measured values of current and voltage inevitably rely on models of the system. Such models have assumptions that may be routinely overlooked when following experimental and analysis methods, and that may restrict their application to real-world systems. It is quite possible to make highly precise but meaningless measurements! An often-assumed condition for electrocatalyst analysis is the absence of mass transport limitation. For some reactions, such as the acidic hydrogen oxidation and hydrogen evolution reactions, this state is arguably so challenging to reach at representative conditions that it is impossible to measure true catalyst activity 11 . For example, ex situ thin-film rotating disk electrode measurements routinely fail to predict correct trends in catalyst performance in morphologically complex catalyst layers as relevant operating conditions (e.g. meaningful current densities) are theoretically inaccessible. This topic has been extensively discussed with some authors directly criticising this technique and exploring alternatives 37 , 38 , and others defending the technique’s applicability for ranking catalysts if scrupulous attention is paid to experimental details 39 ; yet, many reports continue to use this measurement technique blindly with no regard for its applicability. We therefore strongly urge those planning measurements to consider whether their chosen technique is capable of providing sufficient evidence to disprove their hypothesis, even if it has been widely used for similar experiments.

The correct choice of technique should be dependent upon the measurand being probed rather than simply following previous reports. The case of iR correction, where a measurement of the uncompensated resistance is used to correct the applied voltage, is a good example. When the measurand is a material property, such as intrinsic catalyst activity, the uncompensated resistance is a source of error introduced by the experimental method and it should carefully be corrected out (Fig. 1 ). In the case that the uncompensated resistance is intrinsic to the measurand—for instance the operating voltage of an electrolyser cell—iR compensation is inappropriate and only serves to obfuscate. Another example is the choice of ex situ (outside the operating environment), in situ (in the operating environment), and operando (during operation) measurements. While in situ or operando testing allows characterisation under conditions that are more representative of real-world use, it may also yield measurements with increased uncertainty due to the decreased possibility for fine experimental control. Depending on the intended use of the measurement, an informed compromise must be sought between how relevant and how uncertain the resulting measurement will be.

Maximising reproducibility

Most electrochemists assess the repeatability of measurements, performing the same measurement themselves several times. Repeats, where all steps (including sample preparation, where relevant) of a measurement are carried out multiple times, are absolutely crucial for highlighting one-off mistakes (Fig. 2 ). Reproducibility, however, is assessed when comparing results reported by different laboratories. Many readers will be familiar with the variability in key properties reported for various systems e.g. variability in the reported electrochemically active surface area (ECSA) of commercial catalysts, which might reasonably be expected to be constant, suggesting that, in practice, the reproducibility of results cannot be taken for granted. As electrochemistry deals mostly with method-defined measurands, the measurement procedure must be standardised for results to be comparable. Variation in results therefore strongly suggests that measurements are not being performed consistently and that the information typically supplied when publishing experimental methods is insufficient to facilitate reproducibility of electrochemical measurements. Quantitative electrochemical measurements require control over a large range of parameters, many of which are easily overlooked or specified imprecisely when reporting data. An understanding of the crucial parameters and methods for their control is often institutional knowledge, held by expert electrochemists, but infrequently formalised and communicated e.g. through publication of widely adopted standards. This creates challenges to both reproducibility and the corresponding assessment of experimental quality by reviewers. The reporting standards established by various publishers (see Introduction) offer a practical response, but it is still unclear whether these will contain sufficiently granular detail to improve the situation.

Fig. 2. Illustration of reproducibility, repeatability and gross error.

The measurements from laboratory 1 show a high degree of repeatability, while the measurements from laboratory 2 do not. Apparently, a mistake has been made in repeat 1, which will need to be excluded from any analysis and any uncertainty analysis, and/or suggests further repeat measurements should be conducted. The error bars are based on an uncertainty with coverage factor ~95% (see below) so the results from the two laboratories are different, i.e. show poor reproducibility. This may indicate differing experimental practice or that some as yet unidentified parameter is influencing the results.

Besides information typically supplied in the description of experimental methods for publication, which, at a minimum, must detail the materials, equipment and measurement methods used to generate the results, we suggest that a much more comprehensive description is often required, especially where measurements have historically poor reproducibility or the presented results differ from earlier reports. Such an expanded ‘supplementary experimental’ section would additionally include any details that could impact the results: for example, material pre-treatment, detailed electrode preparation steps, cleaning procedures, expected electrolyte and gas impurities, electrode preconditioning processes, cell geometry including electrode positions, detail of junctions between electrodes, and any other fine experimental details which might be institutional knowledge but unknown to the (now wide) readership of the electrochemical literature. In all cases any corrections and calculations used should be specified precisely and clearly justified; these may include determinations of properties of the studied system, such as ECSA, or of the environment, such as air pressure. We highlight that knowledge of the ECSA is crucial for conventional reporting of intrinsic electrocatalyst activity, but is often very challenging to measure in a reproducible manner 40 , 41 .

To aid reproducibility we recommend regularly calibrating experimental equipment and doing so in a way that is traceable to primary standards realising the International System of Units (SI) base units. The SI system ensures that measurement units (such as the volt) are uniform globally and invariant over time. Calibration applies to direct experimental indicators, e.g. loads and potentiostats, but equally to supporting tools such as temperature probes, balances, and flow meters. Calibration of reference electrodes is often overlooked even though variations from ideal behaviour can be significant 42 and, as discussed above, are often the limit of accuracy on potential measurement. Sometimes reports will specify internal calibration against a known reaction (such as the onset of the hydrogen evolution reaction), but rarely detail regular comparisons to a local master electrode artefact such as a reference hydrogen electrode or explain how that artefact is traceable, e.g. through control of the filling solution concentration and measurement conditions. If reference is made to a standardised material (e.g. commercial Pt/C) the specified material should be both widely available and the results obtained should be consistent with prior reports.

Beyond calibration and reporting, the best test of reproducibility is to perform intercomparisons between laboratories, either by comparing results to identical experiments reported in the literature or, more robustly, through participation in planned intercomparisons (for example ‘round-robin’ exercises); we highlight a recent study applied to solid electrolyte characterisation as a topical example 43 . Intercomparisons are excellent at establishing the key features of an experimental method and the comparability of results obtained from different methods; moreover they provide a consensus against which other laboratories may compare themselves. However, performing repeat measurements for assessing repeatability and reproducibility cannot estimate uncertainty comprehensively, as it excludes systematic sources of uncertainty.

Assessing measurement uncertainty

Formal uncertainty evaluation is an alien concept to most electrochemists; even the best papers (as well as our own!) typically report only the standard deviation between a few repeats. Metrological best practice dictates that reported values are stated as the combination of a best estimate of the measurand, and an interval, and a coverage factor ( k ) which gives the probability of the true value being within that interval. For example, “the turnover frequency of the electrocatalyst is 1.0 ± 0.2 s −1 ( k = 2)” 16 means that the scientist (having assumed normally distributed error) is 95% confident that the turnover frequency lies in the range 0.8–1.2 s −1 . Reporting results in such a way makes it immediately clear whether the measurements reliably support the stated conclusions, and enables meaningful comparisons between independent results even if their uncertainties differ (Fig. 3 ). It also encourages honesty and self-reflection about the shortcomings of results, encouraging the development of improved experimental techniques.

Fig. 3. Illustrating the role of uncertainty in deciding which result is higher.

a Complete reporting of a measurement includes the best estimate of the measurand and an uncertainty and the probability the true value falls within the uncertainty reported. Here, the percentages indicate that a normal distribution has been assumed. b Horizontal bars indicate 95% confidence intervals from uncertainty analysis. The confidence intervals of measurements 1 and 2 overlap when using k = 2, so it is not possible to say with 95% confidence that the result of the measurement 2 is higher than measurement 1, but it is possible to say this with 68% confidence, i.e. k = 1. Measurement 3 has a lower uncertainty, so it is possible to say with 95% confidence that the value is higher than measurement 2.

Constructing such a statement and performing the underlying calculations often appears daunting, not least as there are very few examples for electrochemical systems, with pH measurements being one example to have been treated thoroughly 44 . However, a standard process for uncertainty analysis exists, as briefly outlined graphically in Fig. 4 . We refer the interested reader to both accessible introductory texts 45 and detailed step-by-step guides 16 , 46 . The first steps in the process are to state precisely what is being measured—the measurand—and identify likely sources of uncertainty. Even this qualitative effort is often revealing. Precision in the definition of the measurand (and how it is determined from experimental indicators) clarifies the selection of measurement technique and helps to assess its appropriateness; for example, where the measurand relates only to an instantaneous property of a specific physical object, e.g. the current density of a specific fuel cell at 0.65 V following a standardised protocol, we ignore all variability in construction, device history etc. and no error is introduced by the sample. Whereas, when the measurand is a material property, such as turnover frequency of a catalyst material with a defined chemistry and preparation method, variability related to the material itself and sample preparation will often introduce substantial uncertainty in the final result. In electrochemical measurements, errors may arise from a range of sources including the measurement equipment, fluctuations in operating conditions, or variability in materials and samples. Identifying these sources leads to the design of better-quality experiments. In essence, the subsequent steps in the calculation of uncertainty quantify the uncertainty introduced by each source of error and, by using a measurement model or a sensitivity analysis (i.e. an assessment of how the results are sensitive to variability in input parameters), propagate these to arrive at a final uncertainty on the reported result.

Fig. 4. Illustration of simplified and exaggerated uncertainty evaluation on open circuit potential vs a reference electrode (RE).

Possible sources of uncertainty are identified, and their standard uncertainty or probability distribution is determined by statistical analysis of repeat measurements (Type A uncertainties) or other evidence (Type B uncertainties). If required, uncertainties are then converted into the same unit as the measurand and adjusted for sensitivity, using a measurement model. Uncertainties are then combined either analytically using a standard approach or numerically to generate an overall estimate of uncertainty for the measurand (as indicated in Fig. 3a ).

Generally, given the historically poor understanding of uncertainty in electrochemistry, we promote increased awareness of uncertainty reporting standards and a focus on reporting measurement uncertainty with a level of detail that is appropriate to the claim made, or the scientific utilisation of the data. For example, where the primary conclusion of a paper relies on demonstrating that a material has the ‘highest ever X’ or ‘X is bigger than Y’ it is reasonable for reviewers to ask authors to quantify how confident they are in their measurement and statement. Additionally, where uncertainties are reported, even with error bars in numerical or graphical data, the method by which the uncertainty was determined should be stated, even if the method is consciously simple (e.g. “error bars indicate the sample standard deviation of n = 3 measurements carried out on independent electrodes”). Unfortunately, we are aware of only sporadic and incomplete efforts to create formal uncertainty budgets for electrochemical measurements of energy technologies or materials, though work is underway in our group to construct these for some exemplar systems.

Electrochemistry has undoubtedly thrived without significant interaction with formal metrology; we do not urge an abrupt revolution whereby rigorous measurements become devalued if they lack additional arcane formality. Rather, we recommend using the well-honed principles of metrology to illuminate best practice and increase transparency about the strengths and shortcomings of reported experiments. From rethinking experimental design, to participating in laboratory intercomparisons and estimating the uncertainty on key results, the application of metrological principles to electrochemistry will result in more robust science.

Acknowledgements

This work was supported by the National Measurement System of the UK Department of Business, Energy & Industrial Strategy. Andy Wain, Richard Brown and Gareth Hinds (National Physical Laboratory, Teddington, UK) provided insightful comments on the text.

Author contributions

G.S. originated the concept for the article. G.S. and E.J.F.D. contributed to drafting, editing and revision of the manuscript.

Peer review

Peer review information.

Nature Communications thanks Gregory Jerkiewicz for their contribution to the peer review of this work.

Competing interests

The authors declare no competing interests.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

- 1. Climent, V. & Feliu, J. M. Thirty years of platinum single crystal electrochemistry. J. Solid State Electrochem . 10.1007/s10008-011-1372-1 (2011).

- 2. Nature Editors and Contributors. Challenges in irreproducible research collection. Nature https://www.nature.com/collections/prbfkwmwvz/ (2018).

- 3. Chen JG, Jones CW, Linic S, Stamenkovic VR. Best practices in pursuit of topics in heterogeneous electrocatalysis. ACS Catal. 2017;7:6392–6393. doi: 10.1021/acscatal.7b02839. [ DOI ] [ Google Scholar ]

- 4. Voiry D, et al. Best practices for reporting electrocatalytic performance of nanomaterials. ACS Nano. 2018;12:9635–9638. doi: 10.1021/acsnano.8b07700. [ DOI ] [ PubMed ] [ Google Scholar ]

- 5. Wei C, et al. Recommended practices and benchmark activity for hydrogen and oxygen electrocatalysis in water splitting and fuel cells. Adv. Mater. 2019;31:1806296. doi: 10.1002/adma.201806296. [ DOI ] [ PubMed ] [ Google Scholar ]

- 6. Chem Catalysis Editorial Team. Chem Catalysis Checklists Revision 1.1 . https://info.cell.com/introducing-our-checklists-learn-more (2021).

- 7. Chatenet M, et al. Good practice guide for papers on fuel cells and electrolysis cells for the Journal of Power Sources. J. Power Sources. 2020;451:227635. doi: 10.1016/j.jpowsour.2019.227635. [ DOI ] [ Google Scholar ]

- 8. Sun YK. An experimental checklist for reporting battery performances. ACS Energy Lett. 2021;6:2187–2189. doi: 10.1021/acsenergylett.1c00870. [ DOI ] [ Google Scholar ]

- 9. Li J, et al. Good practice guide for papers on batteries for the Journal of Power Sources. J. Power Sources. 2020;452:227824. doi: 10.1016/j.jpowsour.2020.227824. [ DOI ] [ Google Scholar ]

- 10. Arbizzani C, et al. Good practice guide for papers on supercapacitors and related hybrid capacitors for the Journal of Power Sources. J. Power Sources. 2020;450:227636. doi: 10.1016/j.jpowsour.2019.227636. [ DOI ] [ Google Scholar ]

- 11. Hansen JN, et al. Is there anything better than Pt for HER? ACS Energy Lett. 2021;6:1175–1180. doi: 10.1021/acsenergylett.1c00246. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 12. Xu K. Navigating the minefield of battery literature. Commun. Mater. 2022;3:1–7. doi: 10.1038/s43246-022-00251-5. [ DOI ] [ Google Scholar ]

- 13. Dix ST, Lu S, Linic S. Critical practices in rigorously assessing the inherent activity of nanoparticle electrocatalysts. ACS Catal. 2020;10:10735–10741. doi: 10.1021/acscatal.0c03028. [ DOI ] [ Google Scholar ]

- 14. Mistry A, et al. A minimal information set to enable verifiable theoretical battery research. ACS Energy Lett. 2021;6:3831–3835. doi: 10.1021/acsenergylett.1c01710. [ DOI ] [ Google Scholar ]

- 15. Joint Committee for Guides in Metrology: Working Group 2. International Vocabulary of Metrology—Basic and General Concepts and Associated Terms . (2012).

- 16. Joint Committee for Guides in Metrology: Working Group 1. Evaluation of measurement data—Guide to the expression of uncertainty in measurement . (2008).

- 17. International Organization for Standardization: Committee on Conformity Assessment. ISO 17034:2016 General requirements for the competence of reference material producers . (2016).

- 18. Brown, R. J. C. & Andres, H. How should metrology bodies treat method-defined measurands? Accredit. Qual. Assur . 10.1007/s00769-020-01424-w (2020).

- 19. Angerstein-Kozlowska, H. Surfaces, Cells, and Solutions for Kinetics Studies . Comprehensive Treatise of Electrochemistry vol. 9: Electrodics: Experimental Techniques (Plenum Press, 1984).

- 20. Shinozaki K, Zack JW, Richards RM, Pivovar BS, Kocha SS. Oxygen reduction reaction measurements on platinum electrocatalysts utilizing rotating disk electrode technique. J. Electrochem. Soc. 2015;162:F1144–F1158. doi: 10.1149/2.1071509jes. [ DOI ] [ Google Scholar ]

- 21. International Organization for Standardization: Technical Committee ISO/TC 197. ISO 14687:2019(E)—Hydrogen fuel quality—Product specification . (2019).

- 22. Schmidt TJ, Paulus UA, Gasteiger HA, Behm RJ. The oxygen reduction reaction on a Pt/carbon fuel cell catalyst in the presence of chloride anions. J. Electroanal. Chem. 2001;508:41–47. doi: 10.1016/S0022-0728(01)00499-5. [ DOI ] [ Google Scholar ]

- 23. Chen R, et al. Use of platinum as the counter electrode to study the activity of nonprecious metal catalysts for the hydrogen evolution reaction. ACS Energy Lett. 2017;2:1070–1075. doi: 10.1021/acsenergylett.7b00219. [ DOI ] [ Google Scholar ]

- 24. Ji SG, Kim H, Choi H, Lee S, Choi CH. Overestimation of photoelectrochemical hydrogen evolution reactivity induced by noble metal impurities dissolved from counter/reference electrodes. ACS Catal. 2020;10:3381–3389. doi: 10.1021/acscatal.9b04229. [ DOI ] [ Google Scholar ]

- 25. Jerkiewicz G. Applicability of platinum as a counter-electrode material in electrocatalysis research. ACS Catal. 2022;12:2661–2670. doi: 10.1021/acscatal.1c06040. [ DOI ] [ Google Scholar ]

- 26. Guo J, Hsu A, Chu D, Chen R. Improving oxygen reduction reaction activities on carbon-supported ag nanoparticles in alkaline solutions. J. Phys. Chem. C. 2010;114:4324–4330. doi: 10.1021/jp910790u. [ DOI ] [ Google Scholar ]

- 27. Arulmozhi N, Esau D, van Drunen J, Jerkiewicz G. Design and development of instrumentations for the preparation of platinum single crystals for electrochemistry and electrocatalysis research Part 3: Final treatment, electrochemical measurements, and recommended laboratory practices. Electrocatal. 2017;9:113–123. doi: 10.1007/s12678-017-0426-2. [ DOI ] [ Google Scholar ]

- 28. Colburn AW, Levey KJ, O’Hare D, Macpherson JV. Lifting the lid on the potentiostat: a beginner’s guide to understanding electrochemical circuitry and practical operation. Phys. Chem. Chem. Phys. 2021;23:8100–8117. doi: 10.1039/D1CP00661D. [ DOI ] [ PubMed ] [ Google Scholar ]

- 29. Ban Z, Kätelhön E, Compton RG. Voltammetry of porous layers: staircase vs analog voltammetry. J. Electroanal. Chem. 2016;776:25–33. doi: 10.1016/j.jelechem.2016.06.003. [ DOI ] [ Google Scholar ]

- 30. McMath AA, Van Drunen J, Kim J, Jerkiewicz G. Identification and analysis of electrochemical instrumentation limitations through the study of platinum surface oxide formation and reduction. Anal. Chem. 2016;88:3136–3143. doi: 10.1021/acs.analchem.5b04239. [ DOI ] [ PubMed ] [ Google Scholar ]

- 31. Jerkiewicz G. Standard and reversible hydrogen electrodes: theory, design, operation, and applications. ACS Catal. 2020;10:8409–8417. doi: 10.1021/acscatal.0c02046. [ DOI ] [ Google Scholar ]

- 32. Ives, D. J. G. & Janz, G. J. Reference Electrodes, Theory and Practice (Academic Press, 1961).

- 33. Newman, J. & Balsara, N. P. Electrochemical Systems (Wiley, 2021).

- 34. Inzelt, G., Lewenstam, A. & Scholz, F. Handbook of Reference Electrodes (Springer Berlin, 2013).

- 35. Cimenti M, Co AC, Birss VI, Hill JM. Distortions in electrochemical impedance spectroscopy measurements using 3-electrode methods in SOFC. I—effect of cell geometry. Fuel Cells. 2007;7:364–376. doi: 10.1002/fuce.200700019. [ DOI ] [ Google Scholar ]

- 36. Hoshi Y, et al. Optimization of reference electrode position in a three-electrode cell for impedance measurements in lithium-ion rechargeable battery by finite element method. J. Power Sources. 2015;288:168–175. doi: 10.1016/j.jpowsour.2015.04.065. [ DOI ] [ Google Scholar ]

- 37. Jackson C, Lin X, Levecque PBJ, Kucernak ARJ. Toward understanding the utilization of oxygen reduction electrocatalysts under high mass transport conditions and high overpotentials. ACS Catal. 2022;12:200–211. doi: 10.1021/acscatal.1c03908. [ DOI ] [ Google Scholar ]

- 38. Masa J, Batchelor-McAuley C, Schuhmann W, Compton RG. Koutecky-Levich analysis applied to nanoparticle modified rotating disk electrodes: electrocatalysis or misinterpretation. Nano Res. 2014;7:71–78. doi: 10.1007/s12274-013-0372-0. [ DOI ] [ Google Scholar ]

- 39. Martens S, et al. A comparison of rotating disc electrode, floating electrode technique and membrane electrode assembly measurements for catalyst testing. J. Power Sources. 2018;392:274–284. doi: 10.1016/j.jpowsour.2018.04.084. [ DOI ] [ Google Scholar ]

- 40. Wei C, et al. Approaches for measuring the surface areas of metal oxide electrocatalysts for determining their intrinsic electrocatalytic activity. Chem. Soc. Rev. 2019;48:2518–2534. doi: 10.1039/C8CS00848E. [ DOI ] [ PubMed ] [ Google Scholar ]

- 41. Lukaszewski, M., Soszko, M. & Czerwiński, A. Electrochemical methods of real surface area determination of noble metal electrodes—an overview. Int. J. Electrochem. Sci. 10.20964/2016.06.71 (2016).

- 42. Niu S, Li S, Du Y, Han X, Xu P. How to reliably report the overpotential of an electrocatalyst. ACS Energy Lett. 2020;5:1083–1087. doi: 10.1021/acsenergylett.0c00321. [ DOI ] [ Google Scholar ]

- 43. Ohno S, et al. How certain are the reported ionic conductivities of thiophosphate-based solid electrolytes? An interlaboratory study. ACS Energy Lett. 2020;5:910–915. doi: 10.1021/acsenergylett.9b02764. [ DOI ] [ Google Scholar ]

- 44. Buck, R. P. et al. Measurement of pH. Definition, standards, and procedures (IUPAC Recommendations 2002). Pure Appl. Chem. 10.1351/pac200274112169 (2003).

- 45. Bell, S. Good Practice Guide No. 11. The Beginner’s Guide to Uncertainty of Measurement. (Issue 2). (National Physical Laboratory, 2001).

- 46. United Kingdom Accreditation Service. M3003 The Expression of Uncertainty and Confidence in Measurement 4th edn. (United Kingdom Accreditation Service, 2019).

- View on publisher site

- PDF (782.9 KB)

- Collections

Similar articles

Cited by other articles, links to ncbi databases.

- Download .nbib .nbib

- Format: AMA APA MLA NLM

Add to Collections

- Uncertainty, Accuracy, and Precision

Sources of Uncertainty in Measurements in the Lab

When taking a measurement or performing an experiment, there are many ways in which uncertainty can appear, even if the procedure is performed exactly as indicated. Each experiment and measurement needs to be considered carefully to identify potential limitations or tricky procedural spots.

When considering sources of error for a lab report be sure to consult with your lab manual and/or TA , as each course has different expectations on what types of error or uncertainty sources are expected to be discussed.

Types of Uncertainty or Error

While these are not sources of error, knowing the two main ways we classify uncertainty or error in a measurement may help you when considering your own experiments.

Systematic Error